[ Resolution Studies ]

Refuse to let the syntaxes of (a) history direct our futures.

An introduction to Resolution

Abstract

This essay starts with the description of a pioneering work of video art by Jon Satrom: QTzrk (2011). With the help of this case study Menkman illustrates how certain digital video setting, or resolutions, are not supported unilaterally, but could have changed our entire understanding of the medium of video. The case study serves as an introduction to: "Resolution Studies”, a proposal for a theory that involves more than just the calculation of resolutions as described in physics. In essence resolution studies is about literacy: literacy of the machines, the people, the people creating the machines, and the people being created by the machines. But resolution studies does not only involve the study of the effects of technological progress or the aesthetization of the scales of resolution. Resolution studies also involves a research on alternative settings that could have been in place, but are not – and the technologies which are, as a result, rendered outside of the discourse of computation.

An introduction to Resolution

Abstract

This essay starts with the description of a pioneering work of video art by Jon Satrom: QTzrk (2011). With the help of this case study Menkman illustrates how certain digital video setting, or resolutions, are not supported unilaterally, but could have changed our entire understanding of the medium of video. The case study serves as an introduction to: "Resolution Studies”, a proposal for a theory that involves more than just the calculation of resolutions as described in physics. In essence resolution studies is about literacy: literacy of the machines, the people, the people creating the machines, and the people being created by the machines. But resolution studies does not only involve the study of the effects of technological progress or the aesthetization of the scales of resolution. Resolution studies also involves a research on alternative settings that could have been in place, but are not – and the technologies which are, as a result, rendered outside of the discourse of computation.

Jon Satrom QTzrk (2011). (video)

Technically, QTzrk (Jon Satrom, video installation, 2011) consists of two main video ‘elements’. The first element, a 16:9 video, is a desktop video – a video captured from the desktop perspective – and features ‘movie.mov’. Movie.mov is shown on top of the desktop, an environment which gets deconstructed during QTzrk. The second type of video element are the QTlets – smaller, looped videos that are not quadrilateral. The QTlets are constructed and opened via a now obsolete option in the Quicktime 7 software: Satrom used a mask to change the shape of the otherwise four-cornered videos and transformed them into ‘video shards’. The QTlet elements are featured in QTzrk but are also released as standalone downloadables, available on Satrom’s website; however, they no longer play properly on recent versions of Mac OS X (the masking option is now obsolete and playing these files significantly slows down the computer).

Story-wise, QTzrk starts when the movie.mov file is clicked. It opens inside a Quicktime 7 player, on top of what later becomes clear is a clean desktop that is missing a menu bar. Movie.mov shows a slow motion nature video of a Great White Shark, jumping out of the ocean. Suddenly, a pointer clicks the pause button of the interface, and the Great White Shark turns into a fluid video, leaking out of the Quicktime 7 movie.mov interface buttons. The shark folds up in a kludgy pile of video, resting on the bottom of the desktop, still playing but now in a messily folded way. The Quicktime 7 movie.mov window changes into what looks like a ‘terminal’, which is then commanded to save itself as a QTlet named ‘shark_pile’. The shark_pile is picked up by a mouse pointer, which kind of performs like an invisible hand, hovering the pile over the desktop, finally dropping it back into the Quicktime window, which now shows line after line of mojibake-data. This action – dropping the shark_pile inside the Quicktime window – seems to be the trigger for the desktop environment to collapse.

The Quicktime player breaks apart, no longer adhering to its quadrilateral shape but taking the shape of a second, downloadable QTlet. On the desktop, 35 screenshots of the shark frame appear. A final, new QTlet is introduced; this one consists of groups of screenshots, which when opened up show glitched png files. These clusters themselves transform into new video sequences (a third downloadable QTlet), adding more layers to the collage. By now the original movie.mov seems to slowly disappear in the desktop background, which itself features a ‘datamoshed’ shark video (datamoshing is the colloquial term for the purposeful deconstruction of an .mpeg, or any other video compression using intraframe/keyframe standard). After a minute of noisy droning of the QTlets on top of a datamoshed shark, the desktop suddenly starts to zoom out, revealing one Quicktime 7 window inside the next. Finally the cursor clicks the closing button of the Quicktime 7 interface, ending the presentation and revealing a clean white desktop with just the one movie.mov file icon in the middle. Just when the pointer is about to reopen the movie.mov file, and start the loop all over again, QTzrk ends.

TITLE: QTzrk

DIMENSIONS: expandable/variable

MATERIALS: QuickTime 7

YEAR: 2011

PRICE: FREE; Instructions, TXT, & download available (here)

Technically, QTzrk (Jon Satrom, video installation, 2011) consists of two main video ‘elements’. The first element, a 16:9 video, is a desktop video – a video captured from the desktop perspective – and features ‘movie.mov’. Movie.mov is shown on top of the desktop, an environment which gets deconstructed during QTzrk. The second type of video element are the QTlets – smaller, looped videos that are not quadrilateral. The QTlets are constructed and opened via a now obsolete option in the Quicktime 7 software: Satrom used a mask to change the shape of the otherwise four-cornered videos and transformed them into ‘video shards’. The QTlet elements are featured in QTzrk but are also released as standalone downloadables, available on Satrom’s website; however, they no longer play properly on recent versions of Mac OS X (the masking option is now obsolete and playing these files significantly slows down the computer).

Story-wise, QTzrk starts when the movie.mov file is clicked. It opens inside a Quicktime 7 player, on top of what later becomes clear is a clean desktop that is missing a menu bar. Movie.mov shows a slow motion nature video of a Great White Shark, jumping out of the ocean. Suddenly, a pointer clicks the pause button of the interface, and the Great White Shark turns into a fluid video, leaking out of the Quicktime 7 movie.mov interface buttons. The shark folds up in a kludgy pile of video, resting on the bottom of the desktop, still playing but now in a messily folded way. The Quicktime 7 movie.mov window changes into what looks like a ‘terminal’, which is then commanded to save itself as a QTlet named ‘shark_pile’. The shark_pile is picked up by a mouse pointer, which kind of performs like an invisible hand, hovering the pile over the desktop, finally dropping it back into the Quicktime window, which now shows line after line of mojibake-data. This action – dropping the shark_pile inside the Quicktime window – seems to be the trigger for the desktop environment to collapse.

The Quicktime player breaks apart, no longer adhering to its quadrilateral shape but taking the shape of a second, downloadable QTlet. On the desktop, 35 screenshots of the shark frame appear. A final, new QTlet is introduced; this one consists of groups of screenshots, which when opened up show glitched png files. These clusters themselves transform into new video sequences (a third downloadable QTlet), adding more layers to the collage. By now the original movie.mov seems to slowly disappear in the desktop background, which itself features a ‘datamoshed’ shark video (datamoshing is the colloquial term for the purposeful deconstruction of an .mpeg, or any other video compression using intraframe/keyframe standard). After a minute of noisy droning of the QTlets on top of a datamoshed shark, the desktop suddenly starts to zoom out, revealing one Quicktime 7 window inside the next. Finally the cursor clicks the closing button of the Quicktime 7 interface, ending the presentation and revealing a clean white desktop with just the one movie.mov file icon in the middle. Just when the pointer is about to reopen the movie.mov file, and start the loop all over again, QTzrk ends.

TITLE: QTzrk

DIMENSIONS: expandable/variable

MATERIALS: QuickTime 7

YEAR: 2011

PRICE: FREE; Instructions, TXT, & download available (here)

Wendy Chun: Updating to Remain the Same (2016)

Alex Galloway: The Interface Effect (2012)

Moving Beyond Resolution

I wish I could open Google image search, query ‘rainbow’ and just listen to any image of a rainbow Google has to offer me. I wish I could add textures to my fonts and that I could embed within this text videos starting at a particular moment, or a particular sequence in a video game. I wish I could render and present my videos as circles, pentagons, and other, more organic manifolds.

If I could do these things, I believe my use of the computer would be different: I would create modular relationships between my text files, and my videos would have uneven corners, multiple timelines and changing soundtracks. In short, I think my computational experience could be much more like an integrated collage, if my operating system would allow me to make it so.

Moving Beyond Resolution.

In 2011, Chicago glitch artist Jon Satrom released his QTzrk installation. The installation consisting of four different video loops offers a narrative that introduced me both to the genre of desktop film and to non-quadrilateral video technology. As such, it left me both shocked and inspired. So much so that, in 2012, inspired by Satrom’s work, I set out to build Compress Process, an application that would make it possible to navigate video inside a 3D environment. I too wanted to stretch the limits of video, especially beyond its quadrilateral frame. However, upon release of Compress Process, Wired magazine reviewed the video experiment as a ‘flopped video game’. Ironically, the Wired reporter could not imagine video existing outside the confines of its traditional two-dimensional, flat and standardized interface. From his point of view, this other resolution – 3D – meant an imposed re-categorization of the work; it became a gaming application and was reviewed (and burned) as ‘a flop’. (1)

This kind of imposition of the interface (or the interface effect) is extensively described by NYU new media professor Alexander Galloway in his book The Interface Effect.(2) Galloway writes that ‘an interface is not a thing, an interface is always an effect. It is always a process or a translation.’ The interface thus always becomes part of the process of reading, translating and the understanding of our mediated experiences. Thinking through this reasoning can also give an explanation of the reaction of Klatt in his Wired review of Compress Process, and it makes me wonder: is it at all possible to escape the normative or habitual interpretation of the interface?

As Wendy Hui Kyong Chun writes: ‘New media exist at the bleeding edge of obsolescence. […] We are forever trying to catch up, updating to remain (close to) the same.’(3) Today, the speed of the upgrade has become incommensurable; new upgrades arrive too fast and even seem to exploit this speed as a way to obscure their new options, interfaces and (im)possibilities. Because of the speed of the upgrade, remaining the same, or using technology in a continuous manner, has become a mere ‘goal’. Chun’s argument seems to echo what Deleuze already described in his Postscript on the Societies of Control:

In other words; the continuous imposition of the upgrade demands a form of control over the user, leaving them a sense of freedom, while actually becoming more and more restricted in their practices.

Today, the field of image processing forces almost all formats to follow quadrilateral, ecology dependent, standard (re)solutions, which result in tradeoffs (compromises) between settings that manage speed and functionality (bandwidth, control, power, efficiency, fidelity), while at the same time considering claims in the realms of value vs. storage, processing and transmission. At a time when image-processing technologies function as black boxes, we desperately need to research, reflect and re-evaluate our technologies of obfuscation. However, institutions – schools and publications alike – appear to consider the same old settings over and over, without critically analyzing or deconstructing the programs, problems and possibilities that come with our newer media. As a result, there exists no support for the study of (the setting of) alternative resolutions. Users just learn to copy the use of the interface, to paste data and replicate information, but they no longer question, or learn to question, their standard formats.

Mike Judge made some alarming forecasts in his 2006 in his science fiction comedy film Idiocracy, which, albeit indirectly, echo the words by science fiction writer Philip K. Dick already in his dystopian short story ‘Pay for the Printer’ (1956): in an era in which printers print printers, slowly everything will resolve into useless mush and kipple. In a way, we have to realise that if we do not approach our resolutions analytically, the next generation of our species will likely be discombobulated by digital generation loss – a loss of fidelity in resolutions between subsequent copies and trans-copies of a source. As a result, daily life will turn into obeying the rules of institutionalized programs, while the user will end up only producing monotonous output.

In order to find new, alternative resolutions and to stay open to refreshing, stimulating content, I need to ask myself: do I, as a user, consumer and producer of data and information, depend only on my conditioning and the resolutions that are imposed on me, or is it possible for me to create new resolutions? Can I escape the interface, or does every decontextualized materiality immediately get re-contextualized inside another, already existing, paradigm or interface? How can these kinds of connections block or obscure intelligible reading, or actually offer me a new context to resolve the information? Together these questions set up a pressing research agenda but also a possible future away from monotonous data. In order to try to find an answer to any of these questions, I will need to start at the beginning: an exposition of the term ‘resolution’.

An Etymology of Resolution The meaning of the word resolution depends greatly on the context in which it is used. The Oxford dictionary for instance, differentiates between an ordinary and a formal use of the word, while it also lists definitions from the discourses of music, medicine, chemistry, physics and optics. What is striking about these definitions is that they read not just diverse, but at times even contradictory. In order to come to terms with the many different definitions of the word resolution, and to avert a sense of inceptive ambiguity, I will start this chapter with a very short etymology and description of the term. The word resolution finds its roots in the Latin word re-solutio and consists of two parts: re-, which is a prefix meaning again or back, and solution, which can be traced back to the Latin action noun solūtiō (“a loosening, solution”), or solvō (“I loosen”). Resolution thus suggests a separation or disentanglement of one thing from something it is tied up with, or “the process of reducing things into simpler forms.”(1) The Oxford Dictionary places the origin of resolution in late Middle English where it was first recorded in 1412, as resolucioun (“a breaking into parts”), but also references the Latin word resolvere.(2) Douglas Harper, historian and creator of the Online Etymology Dictionary, describes a kinship with the fourteenth-century French word solucion, which translates to division, dissolving, or explanation.(3) Harper also writes that around the 1520s the term resolution was used to refer to the act of determining or deciding upon something by “breaking (something) into parts to arrive at a truth or to make a final determination.” Following Harper, Resolving, in terms of “a solving” (of a mathematical problem) was only first recorded in the 1540s, as was its usage when meaning “the power of holding firmly” (resolute).(4) This is where to “pass a resolution” stems from (1580s).(5) Resolution in terms of a “decision or expression of a meeting” is dated at around 1600, while a resolution made around New Year, generally referring to the specific wish to better oneself, was first recorded around the 1780s. When a resolution is used in the context of a formal, legislative, or deliberative assembly, it refers to a proposal that requires a vote. In this case, resolution is often used in conjunction with the term motion, and refers to a proposal (also connected to “dispute resolution”).(6) So while in chemistry resolution may mean the breaking down of a complex issue or material into smaller pieces, resolution can also mean the finding of an answer to a problem (in mathematics) or even the deciding of a firm, formal solution.(7) This use of the term resolution – a final solution – seems to oppose the older definitions of resolution, which generally signify an act of breaking down. Etymologically however, these different meanings of the term all still originate from the same root. Douglas Harper dates the first recording of resolution referring to the “effect of an optical instrument” back to the 1860s.(8) The Oxford Dictionary does not date any of the different uses of the term, but it does end its list of definitions with: “5) The smallest interval measurable by a telescope or scientific instrument; the resolving power. 5.1) The degree of detail visible in a photographic or television image.”(9)

(1) "Resolution." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://dictionary.reference.com/browse/resolution>.

(2) "Resolution." Dictionaries, Oxford. Oxford University Press. Accessed: January 30, 2018. <http://www.oxforddictionaries.com/definition/english/resolution>.

(3) "Solution." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://www.etymonline.com/index.php?term=solution>.

(4) "Resolution." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://dictionary.reference.com/browse/resolution>.

(5) "Resolve." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://dictionary.reference.com/browse/resolve>.

(6) Shaw, Harry. Dictionary of problem words and expressions. McGraw-Hill Companies: 1987. Accessed: January 30, 2018. <http://problem_words.enacademic.com/1435/resolution,_motion>.

(7) 1) A firm decision to do or not do something, 1.1) A formal expression of opinion or intention agreed on by a legislative body or other formal meeting, typically after taking a vote 2) The quality of being determined or resolute 3) The action of solving a problem in a contentious matter 3.1) Music: The passing of a discord into a concord during the course of changing harmony: 3.2) Medicine: The disappearance of a symptom or condition 4) Chemistry: The process of reducing or separating something into constituent parts or components 4.1) Physics: The replacing of a single force or other vector quantity by two or more jointly equivalent to it. 5) The smallest interval measurable by a telescope or scientific instrument; the resolving power. 5.1) The degree of detail visible in a photographic or television image.

Angus Stevenson (ed.): Oxford Dictionary of English. Third edition, Oxford 2010: p. 1512.

(8) "Resolution." Douglas Harper: Online Etymology Dictionary. October 30, 2015. <http://dictionary.reference.com/browse/resolution>.

(9) Oxford Dictionary of English. Edited by Angus Stevenson. third edition, Oxford University Press. 2010. p. 1512.

I wish I could open Google image search, query ‘rainbow’ and just listen to any image of a rainbow Google has to offer me. I wish I could add textures to my fonts and that I could embed within this text videos starting at a particular moment, or a particular sequence in a video game. I wish I could render and present my videos as circles, pentagons, and other, more organic manifolds.

If I could do these things, I believe my use of the computer would be different: I would create modular relationships between my text files, and my videos would have uneven corners, multiple timelines and changing soundtracks. In short, I think my computational experience could be much more like an integrated collage, if my operating system would allow me to make it so.

Moving Beyond Resolution.

In 2011, Chicago glitch artist Jon Satrom released his QTzrk installation. The installation consisting of four different video loops offers a narrative that introduced me both to the genre of desktop film and to non-quadrilateral video technology. As such, it left me both shocked and inspired. So much so that, in 2012, inspired by Satrom’s work, I set out to build Compress Process, an application that would make it possible to navigate video inside a 3D environment. I too wanted to stretch the limits of video, especially beyond its quadrilateral frame. However, upon release of Compress Process, Wired magazine reviewed the video experiment as a ‘flopped video game’. Ironically, the Wired reporter could not imagine video existing outside the confines of its traditional two-dimensional, flat and standardized interface. From his point of view, this other resolution – 3D – meant an imposed re-categorization of the work; it became a gaming application and was reviewed (and burned) as ‘a flop’. (1)

This kind of imposition of the interface (or the interface effect) is extensively described by NYU new media professor Alexander Galloway in his book The Interface Effect.(2) Galloway writes that ‘an interface is not a thing, an interface is always an effect. It is always a process or a translation.’ The interface thus always becomes part of the process of reading, translating and the understanding of our mediated experiences. Thinking through this reasoning can also give an explanation of the reaction of Klatt in his Wired review of Compress Process, and it makes me wonder: is it at all possible to escape the normative or habitual interpretation of the interface?

As Wendy Hui Kyong Chun writes: ‘New media exist at the bleeding edge of obsolescence. […] We are forever trying to catch up, updating to remain (close to) the same.’(3) Today, the speed of the upgrade has become incommensurable; new upgrades arrive too fast and even seem to exploit this speed as a way to obscure their new options, interfaces and (im)possibilities. Because of the speed of the upgrade, remaining the same, or using technology in a continuous manner, has become a mere ‘goal’. Chun’s argument seems to echo what Deleuze already described in his Postscript on the Societies of Control:

Capitalism is no longer involved in production […] Thus it is essentially dispersive, and the factory has given way to the corporation. The family, the school, the army, the factory are no longer the distinct analogical spaces that converge towards an owner – state or private power – but coded figures – deformable and transformable – of a single corporation that now has only stockholders. […] The conquests of the market are made by grabbing control and no longer by disciplinary training, by fixing the exchange rate much more than by lowering costs, by transformation of the product more than by specialization of production.(4)

In other words; the continuous imposition of the upgrade demands a form of control over the user, leaving them a sense of freedom, while actually becoming more and more restricted in their practices.

Today, the field of image processing forces almost all formats to follow quadrilateral, ecology dependent, standard (re)solutions, which result in tradeoffs (compromises) between settings that manage speed and functionality (bandwidth, control, power, efficiency, fidelity), while at the same time considering claims in the realms of value vs. storage, processing and transmission. At a time when image-processing technologies function as black boxes, we desperately need to research, reflect and re-evaluate our technologies of obfuscation. However, institutions – schools and publications alike – appear to consider the same old settings over and over, without critically analyzing or deconstructing the programs, problems and possibilities that come with our newer media. As a result, there exists no support for the study of (the setting of) alternative resolutions. Users just learn to copy the use of the interface, to paste data and replicate information, but they no longer question, or learn to question, their standard formats.

Mike Judge made some alarming forecasts in his 2006 in his science fiction comedy film Idiocracy, which, albeit indirectly, echo the words by science fiction writer Philip K. Dick already in his dystopian short story ‘Pay for the Printer’ (1956): in an era in which printers print printers, slowly everything will resolve into useless mush and kipple. In a way, we have to realise that if we do not approach our resolutions analytically, the next generation of our species will likely be discombobulated by digital generation loss – a loss of fidelity in resolutions between subsequent copies and trans-copies of a source. As a result, daily life will turn into obeying the rules of institutionalized programs, while the user will end up only producing monotonous output.

In order to find new, alternative resolutions and to stay open to refreshing, stimulating content, I need to ask myself: do I, as a user, consumer and producer of data and information, depend only on my conditioning and the resolutions that are imposed on me, or is it possible for me to create new resolutions? Can I escape the interface, or does every decontextualized materiality immediately get re-contextualized inside another, already existing, paradigm or interface? How can these kinds of connections block or obscure intelligible reading, or actually offer me a new context to resolve the information? Together these questions set up a pressing research agenda but also a possible future away from monotonous data. In order to try to find an answer to any of these questions, I will need to start at the beginning: an exposition of the term ‘resolution’.

An Etymology of Resolution The meaning of the word resolution depends greatly on the context in which it is used. The Oxford dictionary for instance, differentiates between an ordinary and a formal use of the word, while it also lists definitions from the discourses of music, medicine, chemistry, physics and optics. What is striking about these definitions is that they read not just diverse, but at times even contradictory. In order to come to terms with the many different definitions of the word resolution, and to avert a sense of inceptive ambiguity, I will start this chapter with a very short etymology and description of the term. The word resolution finds its roots in the Latin word re-solutio and consists of two parts: re-, which is a prefix meaning again or back, and solution, which can be traced back to the Latin action noun solūtiō (“a loosening, solution”), or solvō (“I loosen”). Resolution thus suggests a separation or disentanglement of one thing from something it is tied up with, or “the process of reducing things into simpler forms.”(1) The Oxford Dictionary places the origin of resolution in late Middle English where it was first recorded in 1412, as resolucioun (“a breaking into parts”), but also references the Latin word resolvere.(2) Douglas Harper, historian and creator of the Online Etymology Dictionary, describes a kinship with the fourteenth-century French word solucion, which translates to division, dissolving, or explanation.(3) Harper also writes that around the 1520s the term resolution was used to refer to the act of determining or deciding upon something by “breaking (something) into parts to arrive at a truth or to make a final determination.” Following Harper, Resolving, in terms of “a solving” (of a mathematical problem) was only first recorded in the 1540s, as was its usage when meaning “the power of holding firmly” (resolute).(4) This is where to “pass a resolution” stems from (1580s).(5) Resolution in terms of a “decision or expression of a meeting” is dated at around 1600, while a resolution made around New Year, generally referring to the specific wish to better oneself, was first recorded around the 1780s. When a resolution is used in the context of a formal, legislative, or deliberative assembly, it refers to a proposal that requires a vote. In this case, resolution is often used in conjunction with the term motion, and refers to a proposal (also connected to “dispute resolution”).(6) So while in chemistry resolution may mean the breaking down of a complex issue or material into smaller pieces, resolution can also mean the finding of an answer to a problem (in mathematics) or even the deciding of a firm, formal solution.(7) This use of the term resolution – a final solution – seems to oppose the older definitions of resolution, which generally signify an act of breaking down. Etymologically however, these different meanings of the term all still originate from the same root. Douglas Harper dates the first recording of resolution referring to the “effect of an optical instrument” back to the 1860s.(8) The Oxford Dictionary does not date any of the different uses of the term, but it does end its list of definitions with: “5) The smallest interval measurable by a telescope or scientific instrument; the resolving power. 5.1) The degree of detail visible in a photographic or television image.”(9)

(1) "Resolution." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://dictionary.reference.com/browse/resolution>.

(2) "Resolution." Dictionaries, Oxford. Oxford University Press. Accessed: January 30, 2018. <http://www.oxforddictionaries.com/definition/english/resolution>.

(3) "Solution." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://www.etymonline.com/index.php?term=solution>.

(4) "Resolution." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://dictionary.reference.com/browse/resolution>.

(5) "Resolve." Douglas Harper: Online Etymology Dictionary. Accessed: January 30, 2018. <http://dictionary.reference.com/browse/resolve>.

(6) Shaw, Harry. Dictionary of problem words and expressions. McGraw-Hill Companies: 1987. Accessed: January 30, 2018. <http://problem_words.enacademic.com/1435/resolution,_motion>.

(7) 1) A firm decision to do or not do something, 1.1) A formal expression of opinion or intention agreed on by a legislative body or other formal meeting, typically after taking a vote 2) The quality of being determined or resolute 3) The action of solving a problem in a contentious matter 3.1) Music: The passing of a discord into a concord during the course of changing harmony: 3.2) Medicine: The disappearance of a symptom or condition 4) Chemistry: The process of reducing or separating something into constituent parts or components 4.1) Physics: The replacing of a single force or other vector quantity by two or more jointly equivalent to it. 5) The smallest interval measurable by a telescope or scientific instrument; the resolving power. 5.1) The degree of detail visible in a photographic or television image.

Angus Stevenson (ed.): Oxford Dictionary of English. Third edition, Oxford 2010: p. 1512.

(8) "Resolution." Douglas Harper: Online Etymology Dictionary. October 30, 2015. <http://dictionary.reference.com/browse/resolution>.

(9) Oxford Dictionary of English. Edited by Angus Stevenson. third edition, Oxford University Press. 2010. p. 1512.

Rayleigh phasing

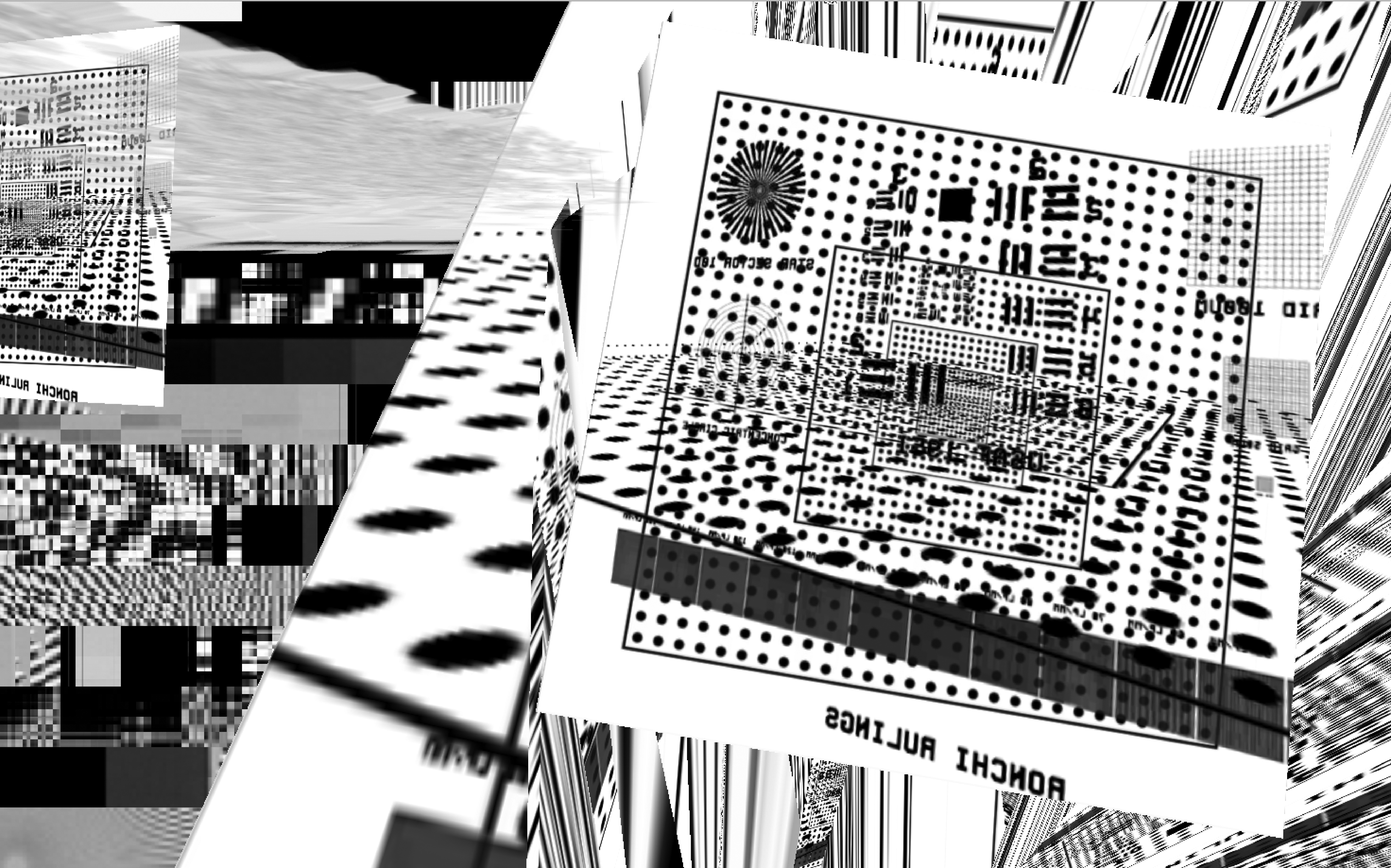

Decalibated Ronchi Rulings

Decalibated Ronchi RulingsOptical Resolution

In 1877, the English physicist John William Strutt succeeded his father to become the third Baron Rayleigh. While Rayleigh’s most notable accomplishment was the discovery of the inert (not chemically reactive) gas argon in 1895, for which he earned a Nobel Prize in 1904, Rayleigh also worked in the field of optics. Here he wrote a criterion that is still used today in the process of quantifying angular resolution: the minimum angle at which a point of view still resolves two points, or the minimum angle at which two points become visible independently from each other.(5) In an 1879 paragraph titled ‘Resolving, or Separating, Power of Optical Instruments’, Lord Rayleigh writes: ‘According to the principles of common optics, there is no limit to the resolving power of an instrument.’ But in a paper written between 1881 and 1887, Rayleigh asks: ‘How is it […] that the power of the microscope is subject to an absolute limit […]? The answer requires us to go behind the approximate doctrine of rays, on which common optics is built, and to take into consideration the finite character of the wave-length of light.’(6)

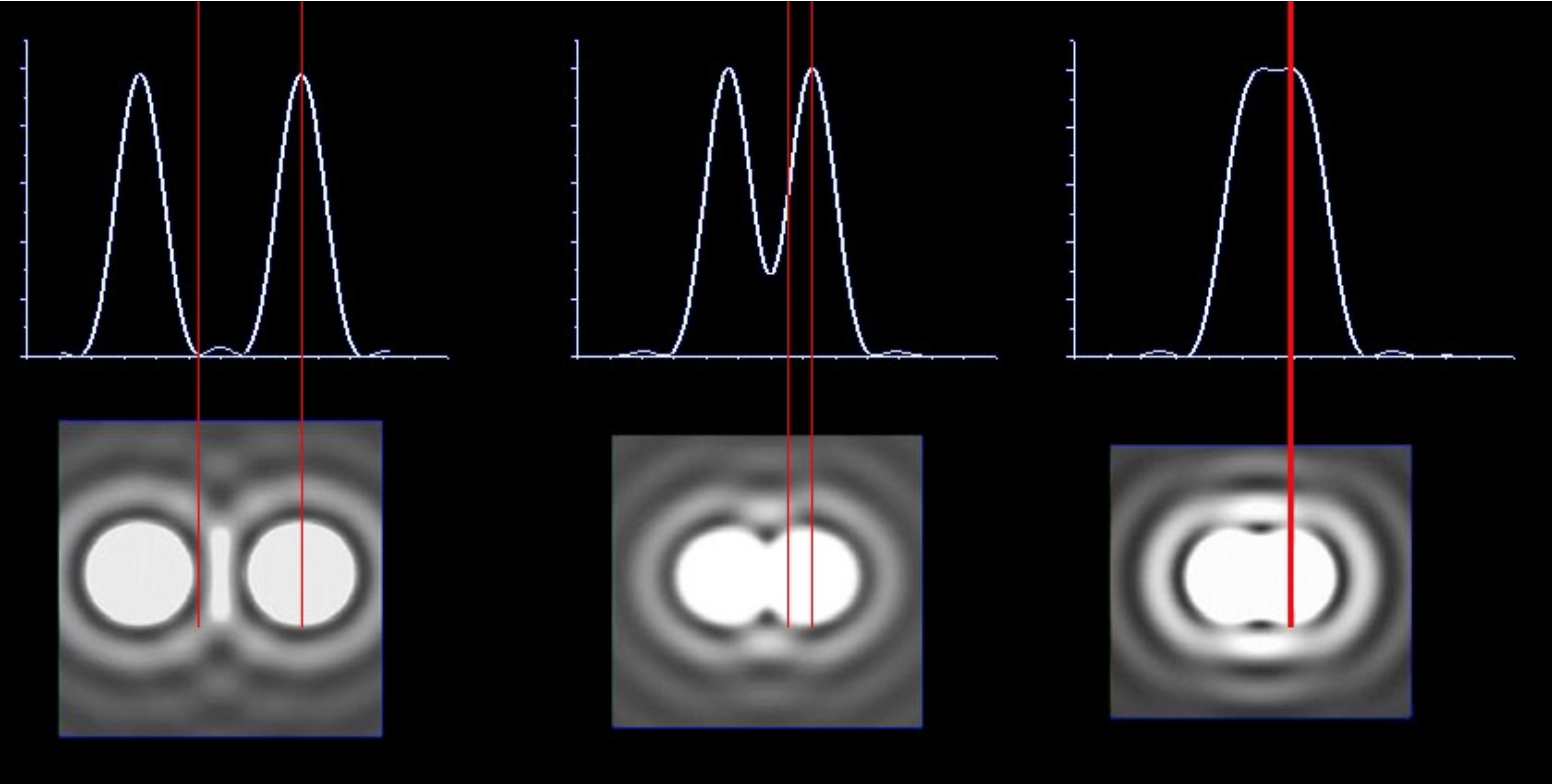

When it comes to straightforward optical systems that consider only light rays from a limited spectrum, Rayleigh was right: in order to quantify resolution of these optical systems, contrast, the amount of difference between the maximum and minimum intensity of light visible within the space between two objects, is indispensable. Just like a white line on a white sheet of paper needs contrast to be visible (to be resolved), it will not be possible to distinguish between two objects when there is no contrast between these two objects. Contrast between details defines the degree of visibility, and thus resolution: no contrast will result in no resolution.

But the contrast between two points, and thus the minimum resolution, is contingent on the wavelength of the light and any possible diffraction patterns between those two points in the image. This ring-shaped diffraction pattern of a point (light source), known as an Airy Pattern, named after George Biddell Airy, is the result of diffraction and is characterized by the wavelength of light illuminating a circular aperture. When two point lights are moved into close proximity, so close that the first Airy disk’s zero crossing falls inside the second Airy disk’s zero crossing, the oscillation within the Airy Patterns will cancel most contrast of light between them. As a result, the two points will optically be blurred together, no matter the lens’s resolving power. The diffraction of light thus results in the fact that even the biggest imaginable telescope has limited resolving power.

Rayleigh described this effect in his Rayleigh criterion, which states that two points can be resolved when the centre of the diffraction pattern of one point falls just outside the first minimum diffraction pattern of the other. When considered through circular aperture, he states that it is possible to calculate a minimum angular resolution as:

In this formula, θ stands for angular resolution (which is measured in radians), λ stands for the wavelength of the light used in the system (blue light has a shorter wavelength, which will result in a better resolution), and D stands for the diameter of the lens’s aperture (the hole with a diameter through which the light travels). Aperture is a measure of a lens’s ability to gather light and resolve fine specimen detail at a fixed object distance.

As stated before, an angular resolution is the minimum distance between two points (light sources) required to stay individually distinguishable from each other. Here, a smaller resolution means there is a smaller resolution angle (and thus less space) necessary between the resolved dots. However, real optical systems are complex and suffer from aberrations, flaws in the optical system and practical difficulties such as specimen quality. Besides this, in reality, most often two dots radiate or reflect light at different levels of intensity. This means that in practice the resolution of an optical system is always higher (worse) than its calculable minimum.

All technologies have a limited optical resolution, which depends on, for instance, aperture, wavelength, contrast and angular resolution. When the optical technology is more complex, the actors that are involved in determining the minimal resolution of the technology become more diverse and the setting of resolution changes into a more elaborate process. In microscopy, just like in any other optical technology, angular and lateral resolution refer to the minimum amount of distance needed (measured in rads or in metres) between two objects, such as dots, that still make it possible to just tell them apart. However, a rewritten mathematical formula defines the theoretical resolving power in microscopy as:

In this formula, dmin stands for the minimal distance two dots need from each other to be resolved, or minimal resolution. λ stands again for the wavelength of light. In the formula for microscopy, however, the diameter of the lens’s aperture (D) is swapped with NA, or Numerical Aperture, which consists of a mathematical calculation of the light-gathering capabilities of a lens. In microscopy, this is the sum of the aperture of an objective and the diaphragm of the condenser, which have set values per microscope. Resolution in microscopy is thus determined by certain physical parameters that not only include the wavelength of light, but also the light-gathering power of objective and lenses.

The definition of resolution in this second formula is expanded to also include the attributed settings of strength, accuracy or power of the material agents that are involved in resolving the image, such as the objective, condenser and lenses. At first sight, this might seem like a minimal expansion and lead to the dismissal of a simple rephrasing or rewriting of the earlier formula for angular resolution. However, the expansion of the formula with just one specific material agent, the diaphragm, and the attribution of certain values of this material agent (which are often determined in increments rather than a fluid spectrum of values) is actually an important step that illustrates how technology gains complexity. Every time a new agent is added to the equation, the agent introduces complexity by adding their own rules or possible settings, involving or influencing the behaviour of all other material agents. Moreover, the affordances of these technologies, or the clues inherent to how the technology is build to tell a user how it can or should be used, also play a role that intensifies the complexity of the resolution of the final output. As James J. Gibson writes: ‘[…] affordances are properties of things taken with reference to an observer but not properties of the experiences of the observer.’(7)

In photography, for instance, the higher the aperture, the shallower the depth of field, the closer the lens needs to come to the object. This also introduces new possibilities for failure: if the diaphragm does not afford an appropriate setting for a particular equation, it might not be possible to resolve the image at all – the imaging technology might simply refuse or even state an ‘unsupported setting’ error message; in which case the technological assemblage will refuse to resolve an image entirely - the foreclosure of an abnormal option rather than an impossibility. Thus: the properties of the technological assemblage that the user handles, the affordances, add complexity to the setting of a resolution.

In 1877, the English physicist John William Strutt succeeded his father to become the third Baron Rayleigh. While Rayleigh’s most notable accomplishment was the discovery of the inert (not chemically reactive) gas argon in 1895, for which he earned a Nobel Prize in 1904, Rayleigh also worked in the field of optics. Here he wrote a criterion that is still used today in the process of quantifying angular resolution: the minimum angle at which a point of view still resolves two points, or the minimum angle at which two points become visible independently from each other.(5) In an 1879 paragraph titled ‘Resolving, or Separating, Power of Optical Instruments’, Lord Rayleigh writes: ‘According to the principles of common optics, there is no limit to the resolving power of an instrument.’ But in a paper written between 1881 and 1887, Rayleigh asks: ‘How is it […] that the power of the microscope is subject to an absolute limit […]? The answer requires us to go behind the approximate doctrine of rays, on which common optics is built, and to take into consideration the finite character of the wave-length of light.’(6)

When it comes to straightforward optical systems that consider only light rays from a limited spectrum, Rayleigh was right: in order to quantify resolution of these optical systems, contrast, the amount of difference between the maximum and minimum intensity of light visible within the space between two objects, is indispensable. Just like a white line on a white sheet of paper needs contrast to be visible (to be resolved), it will not be possible to distinguish between two objects when there is no contrast between these two objects. Contrast between details defines the degree of visibility, and thus resolution: no contrast will result in no resolution.

But the contrast between two points, and thus the minimum resolution, is contingent on the wavelength of the light and any possible diffraction patterns between those two points in the image. This ring-shaped diffraction pattern of a point (light source), known as an Airy Pattern, named after George Biddell Airy, is the result of diffraction and is characterized by the wavelength of light illuminating a circular aperture. When two point lights are moved into close proximity, so close that the first Airy disk’s zero crossing falls inside the second Airy disk’s zero crossing, the oscillation within the Airy Patterns will cancel most contrast of light between them. As a result, the two points will optically be blurred together, no matter the lens’s resolving power. The diffraction of light thus results in the fact that even the biggest imaginable telescope has limited resolving power.

Rayleigh described this effect in his Rayleigh criterion, which states that two points can be resolved when the centre of the diffraction pattern of one point falls just outside the first minimum diffraction pattern of the other. When considered through circular aperture, he states that it is possible to calculate a minimum angular resolution as:

θ = 1.22 λ / D

In this formula, θ stands for angular resolution (which is measured in radians), λ stands for the wavelength of the light used in the system (blue light has a shorter wavelength, which will result in a better resolution), and D stands for the diameter of the lens’s aperture (the hole with a diameter through which the light travels). Aperture is a measure of a lens’s ability to gather light and resolve fine specimen detail at a fixed object distance.

As stated before, an angular resolution is the minimum distance between two points (light sources) required to stay individually distinguishable from each other. Here, a smaller resolution means there is a smaller resolution angle (and thus less space) necessary between the resolved dots. However, real optical systems are complex and suffer from aberrations, flaws in the optical system and practical difficulties such as specimen quality. Besides this, in reality, most often two dots radiate or reflect light at different levels of intensity. This means that in practice the resolution of an optical system is always higher (worse) than its calculable minimum.

All technologies have a limited optical resolution, which depends on, for instance, aperture, wavelength, contrast and angular resolution. When the optical technology is more complex, the actors that are involved in determining the minimal resolution of the technology become more diverse and the setting of resolution changes into a more elaborate process. In microscopy, just like in any other optical technology, angular and lateral resolution refer to the minimum amount of distance needed (measured in rads or in metres) between two objects, such as dots, that still make it possible to just tell them apart. However, a rewritten mathematical formula defines the theoretical resolving power in microscopy as:

dmin = 1.22 x λ / NA

In this formula, dmin stands for the minimal distance two dots need from each other to be resolved, or minimal resolution. λ stands again for the wavelength of light. In the formula for microscopy, however, the diameter of the lens’s aperture (D) is swapped with NA, or Numerical Aperture, which consists of a mathematical calculation of the light-gathering capabilities of a lens. In microscopy, this is the sum of the aperture of an objective and the diaphragm of the condenser, which have set values per microscope. Resolution in microscopy is thus determined by certain physical parameters that not only include the wavelength of light, but also the light-gathering power of objective and lenses.

The definition of resolution in this second formula is expanded to also include the attributed settings of strength, accuracy or power of the material agents that are involved in resolving the image, such as the objective, condenser and lenses. At first sight, this might seem like a minimal expansion and lead to the dismissal of a simple rephrasing or rewriting of the earlier formula for angular resolution. However, the expansion of the formula with just one specific material agent, the diaphragm, and the attribution of certain values of this material agent (which are often determined in increments rather than a fluid spectrum of values) is actually an important step that illustrates how technology gains complexity. Every time a new agent is added to the equation, the agent introduces complexity by adding their own rules or possible settings, involving or influencing the behaviour of all other material agents. Moreover, the affordances of these technologies, or the clues inherent to how the technology is build to tell a user how it can or should be used, also play a role that intensifies the complexity of the resolution of the final output. As James J. Gibson writes: ‘[…] affordances are properties of things taken with reference to an observer but not properties of the experiences of the observer.’(7)

In photography, for instance, the higher the aperture, the shallower the depth of field, the closer the lens needs to come to the object. This also introduces new possibilities for failure: if the diaphragm does not afford an appropriate setting for a particular equation, it might not be possible to resolve the image at all – the imaging technology might simply refuse or even state an ‘unsupported setting’ error message; in which case the technological assemblage will refuse to resolve an image entirely - the foreclosure of an abnormal option rather than an impossibility. Thus: the properties of the technological assemblage that the user handles, the affordances, add complexity to the setting of a resolution.

Aspect Ratio, Resolution and Resolving power

In optical systems, the quality of the rendered image depends on the resolving power and acutance of the technological assemblage that renders the image; the (reflected) light of the source or subject that is captured, the context and conditions in which the image is recorded. Consider, for instance, how different objects (lens, film, image sensor, compression algorithm) have to weigh (or dispute) between standard settings (frame rate, aperture, ISO, number of pixels and pixel aspect ratio, color encoding scheme or weight in mbps), while having to evaluate the technologies’ possible affordances; the possible settings the mediating technological architecture offers when connecting or composing these objects and settings. Finally, the resolving power is an objective measure of resolution, which can, for instance, be measured in horizontal lines (horizontal resolution) and vertical lines (vertical resolution), line pairs or cycles per millimetre. The image acutance refers to a measure of sharpness of the edge contrast of the image and is measured following a gradient. A high acutance means a cleaner edge between two details while a low acutance means a soft or blurry edge.

Following this definition of optical resolution, digital resolution should – in theory – also refer to the pixel density of the image on display, written as the number of pixels per area (in PPI or PPCM) and maybe extended to consider the apparatus, its affordances and and settings (such as pixel aspect ratio or color encoding schemes). However, in an everyday use of the term, the meaning of digital resolution is constantly confused or conflated to simply refer to a display’s standardized output or graphics display resolution: the number of distinct pixels the display features in each dimension (width and height). As a result, resolution has become an ambiguous term that no longer reflects the quality of the content that is on display. The use of the word ‘resolution’ in this context is a misnomer, since the number of pixels in each dimension of the display (e.g. 1920 × 1080) says absolutely nothing about the actual pixel density, the pixels per unit or the quality of the content on display, which may in fact be shown zoomed, stretched or letter-boxed and wrongly color encoded, to fit the display’s standard display resolution.(8)

Generally, these settings either ossify as requirements or de facto norms, or are notated as de jure – legally binding – standards by organizations such as the International Organization for Standardization (ISO). While this makes the process of resolving an image less complex, since it systematizes parts of the process, ultimately it also makes the process less transparent and more black-boxed. And it is not only institutions such as ISO that program, encode and regulate (standardize) the flows of data in and between our technologies, or that arrange the data in our machines following systems that underline efficiency or functionality. In fact, data is organized following either protocol or proprietary standards developed by technological oligarchs to include all kinds of inefficiencies that the user is not conditioned or even supposed to see, think or question. These proprietary standards function as a type of controlling logic that re-capsulate information inside various wrappers in which our data is (re-)encoded, edited and even deformed by nepotistic, (sometimes) covertly operating cartels for reasons like insidious data collection or locking the user into their proprietary software.

Just like in the realm of optics, a resolution does not just mean a final rendition of the data on the screen, but also involves the processes and affordances involved during its rendition – the tradeoffs inside the technological assemblage which record, produce and display the image (or other media, such as video, sound or 3D data). The current conflation of the meaning of resolution within the digital – as a result of which resolution only refers to the final dimensions the image is displayed at or in – obscures the complexities and politics at stake in the process of resolving, and, as a result, presents a limit to the understanding, using, compiling and reading of (imaging) data. Further theoretical refinements that elaborate on the usage and development of the term ‘resolution’ have been missing from debates on resolutions since it was ported from the field of optics, where it has been in use for two centuries. To garner a better understanding of our imaging technologies, the word ‘resolution’ itself needs to be resolved; or rather, it needs to be disentangled to refer not just to a final output, but to a more procedural construct.

Untie&&Dis/Solve: Digital Resolutions

Resolutions are made, and they involve procedural trade-offs. The more complex an image-processing technology is, the more actors its rendering entails, each following their own rules or standards to resolve an image, influencing the image’s final resolution. However, these actors and their inherent complexities are increasingly positioned beyond the fold of everyday settings, outside the afforded options of the interface. This is how resolutions do not just function as an interface effect but also as a hyperopic lens, obfuscating some of the most immediate stakes and possible alternative resolutions of media.

Unknowingly, the user and audience suffer from technological hyperopia; a condition of ‘farsightedness’ that does not allow the user to properly see what processes are taking place right under their nose. Rather, they just focus on a final end product. This is due to a shift from creating a resolution, to the setting of a resolution and, finally, to the imposition of resolutions as standard settings. Every time we press print, run, record, publish, render, we also press a button we could consider as ‘compromise’. Unfortunately, however, what we compromise – settings between or beyond standards, and which deliver other, maybe unwanted, but maybe also desirable outcomes – is often obfuscated. We need to shift our understanding of resolutions and see them as disputable norms, habitual compromises and expandable limits. Through challenging the actors involved in the setting of resolutions, the user can scale actively between increments of hyperopia and myopia. The question is: has the user become unable to construct their own settings, or has the user become oblivious to resolutions and their inherent compromises? And how has the user become this blind?

One answer to this question can be found in a redefinition of the screen. For a long time the screen was just a straightforward, typically passive object that acted as a veil: it would emit or reflect light. Today, the term ‘screen’ may still refer to its old configuration: a two-dimensional material surface or threshold that partitions one space from the next, or functions as a shield. As curator and theorist Christiane Paul writes: the screen acts as a mediator of (digital) light. However, over the past decades, technological innovations have transformed the notion of the screen into a wide variety of complex amalgamations.(9)

But over time, the screen has transformed into a navigational plane, rendering it similar to an interface or GUI. While media archaeologist Erkki Huhtamo dabbles about the possibility to describe the contemporary screen as a framed surface or container for displaying visual information, that is controlled by the user and therefore not permanently part of the frame. He finally argues that the screen exists as a constantly changing, temporally constructed interface between the user and information.(10) As Galloway explains in The Interface Effect (2012), the interface is part of the processes of understanding, reading and translating our mediated experiences: it operates as an effect. In his book The Interface Envelope (2015), James Ash writes: ‘within digital interfaces, the specific mode of resolution of an object is carefully designed and tested in order to be as optimal as possible […]. In a digital interface, resolution is the outcome of transductions between a variety of objects including screened images, software, hardware and physical input devices, all of which are centrally involved in the design of particular objects within an interface.’(11)

Not only has the screen morphed from a flat surface to an interactive plane of navigation or interface, its content and the technologies that shape its content have developed into extremely complex systems. As Lev Manovich wrote back in 1995: ‘Rather than being a neutral medium of presenting information, the screen is aggressive. It functions to filter, to screen out, to take over, rendering nonexistent whatever is outside its frame’ – a degree of filtering that varies between different screen technologies. The screen is thus not simply a boundary plane. It has become an autonomous object that affects what is being displayed; a threshold mediating different systems or a process oscillating between states. The mobile screen itself is located in-between different applications and uses.(12)

In the computer, most of the interactions with our interfaces are mediated by software applications that act like platforms. These platforms do not take up the full screen, but instead exist within a window. While they all exist in the same screen, these windows follow their own sets of rules and standards; they exist next to and on top of each other like walled gardens. In a sense, these platforms are a modern version of frameworks; they offer a simulacrum of freedom and possibility. In the case of the platform Facebook, for example, content is reformatted and deformed: Facebook recompresses and reformats any posted data, text, sound or images, while it has rules for the number of characters, and what characters and compressions can be used or uploaded. Facebook has even designed its own emojis for the platform. In short, the platform Facebook enforces its own resolutions. It is important to realize that the screen is a constant state of assemblage: delimiting and demarcating our ways of seeing and instead expanding the axial and lateral resolution to layers that are usually obfuscated or uncharted.

It is imperative to rethink the definition of ‘resolution’ and expand it from a simple measure of acutance. Because what is resolved on the screen and what is not depends not just on the material qualities of the screen or display, or the signal it receives, but also on the processes, platforms, standards and interfaces involved in setting these measures, behind or in the depths beyond the screen or display.

So while in the digital realm, the term ‘resolution’ is often simplified to just mean a number – signifying, say, the width and height of a screen – the critical understanding of the term ‘resolution’ I propose also considers a depth (beyond its outcome). In this ‘depth’, beyond a screen (or when including audible technologies: membrane), protocols and other (proprietary) standards, together with the technological interfaces and the objects’ materialities and affordances, form a final resolution.

The cost of all of these media resolutions – standards encapsulated inside standard encapsulations – is that we have gradually become unaware of the choices and compromises they represent. We need to realize that a resolution is never a neutral settlement, but an outcome that carries historical, economical and political ideologies which once were implemented by choice. While resolutions compromise, obfuscate and obscure particular visual outcomes, the processes of standardization and upgrade culture as a whole also compromise particular technological affordances – creating new ways of seeing or perceiving – altogether. And it is these alternative technologies of seeing, or obscured and deleted settings, that also need to be considered as part of resolution studies.

In optical systems, the quality of the rendered image depends on the resolving power and acutance of the technological assemblage that renders the image; the (reflected) light of the source or subject that is captured, the context and conditions in which the image is recorded. Consider, for instance, how different objects (lens, film, image sensor, compression algorithm) have to weigh (or dispute) between standard settings (frame rate, aperture, ISO, number of pixels and pixel aspect ratio, color encoding scheme or weight in mbps), while having to evaluate the technologies’ possible affordances; the possible settings the mediating technological architecture offers when connecting or composing these objects and settings. Finally, the resolving power is an objective measure of resolution, which can, for instance, be measured in horizontal lines (horizontal resolution) and vertical lines (vertical resolution), line pairs or cycles per millimetre. The image acutance refers to a measure of sharpness of the edge contrast of the image and is measured following a gradient. A high acutance means a cleaner edge between two details while a low acutance means a soft or blurry edge.

Following this definition of optical resolution, digital resolution should – in theory – also refer to the pixel density of the image on display, written as the number of pixels per area (in PPI or PPCM) and maybe extended to consider the apparatus, its affordances and and settings (such as pixel aspect ratio or color encoding schemes). However, in an everyday use of the term, the meaning of digital resolution is constantly confused or conflated to simply refer to a display’s standardized output or graphics display resolution: the number of distinct pixels the display features in each dimension (width and height). As a result, resolution has become an ambiguous term that no longer reflects the quality of the content that is on display. The use of the word ‘resolution’ in this context is a misnomer, since the number of pixels in each dimension of the display (e.g. 1920 × 1080) says absolutely nothing about the actual pixel density, the pixels per unit or the quality of the content on display, which may in fact be shown zoomed, stretched or letter-boxed and wrongly color encoded, to fit the display’s standard display resolution.(8)

Generally, these settings either ossify as requirements or de facto norms, or are notated as de jure – legally binding – standards by organizations such as the International Organization for Standardization (ISO). While this makes the process of resolving an image less complex, since it systematizes parts of the process, ultimately it also makes the process less transparent and more black-boxed. And it is not only institutions such as ISO that program, encode and regulate (standardize) the flows of data in and between our technologies, or that arrange the data in our machines following systems that underline efficiency or functionality. In fact, data is organized following either protocol or proprietary standards developed by technological oligarchs to include all kinds of inefficiencies that the user is not conditioned or even supposed to see, think or question. These proprietary standards function as a type of controlling logic that re-capsulate information inside various wrappers in which our data is (re-)encoded, edited and even deformed by nepotistic, (sometimes) covertly operating cartels for reasons like insidious data collection or locking the user into their proprietary software.

Just like in the realm of optics, a resolution does not just mean a final rendition of the data on the screen, but also involves the processes and affordances involved during its rendition – the tradeoffs inside the technological assemblage which record, produce and display the image (or other media, such as video, sound or 3D data). The current conflation of the meaning of resolution within the digital – as a result of which resolution only refers to the final dimensions the image is displayed at or in – obscures the complexities and politics at stake in the process of resolving, and, as a result, presents a limit to the understanding, using, compiling and reading of (imaging) data. Further theoretical refinements that elaborate on the usage and development of the term ‘resolution’ have been missing from debates on resolutions since it was ported from the field of optics, where it has been in use for two centuries. To garner a better understanding of our imaging technologies, the word ‘resolution’ itself needs to be resolved; or rather, it needs to be disentangled to refer not just to a final output, but to a more procedural construct.

Untie&&Dis/Solve: Digital Resolutions

Resolutions are made, and they involve procedural trade-offs. The more complex an image-processing technology is, the more actors its rendering entails, each following their own rules or standards to resolve an image, influencing the image’s final resolution. However, these actors and their inherent complexities are increasingly positioned beyond the fold of everyday settings, outside the afforded options of the interface. This is how resolutions do not just function as an interface effect but also as a hyperopic lens, obfuscating some of the most immediate stakes and possible alternative resolutions of media.

Unknowingly, the user and audience suffer from technological hyperopia; a condition of ‘farsightedness’ that does not allow the user to properly see what processes are taking place right under their nose. Rather, they just focus on a final end product. This is due to a shift from creating a resolution, to the setting of a resolution and, finally, to the imposition of resolutions as standard settings. Every time we press print, run, record, publish, render, we also press a button we could consider as ‘compromise’. Unfortunately, however, what we compromise – settings between or beyond standards, and which deliver other, maybe unwanted, but maybe also desirable outcomes – is often obfuscated. We need to shift our understanding of resolutions and see them as disputable norms, habitual compromises and expandable limits. Through challenging the actors involved in the setting of resolutions, the user can scale actively between increments of hyperopia and myopia. The question is: has the user become unable to construct their own settings, or has the user become oblivious to resolutions and their inherent compromises? And how has the user become this blind?

One answer to this question can be found in a redefinition of the screen. For a long time the screen was just a straightforward, typically passive object that acted as a veil: it would emit or reflect light. Today, the term ‘screen’ may still refer to its old configuration: a two-dimensional material surface or threshold that partitions one space from the next, or functions as a shield. As curator and theorist Christiane Paul writes: the screen acts as a mediator of (digital) light. However, over the past decades, technological innovations have transformed the notion of the screen into a wide variety of complex amalgamations.(9)

But over time, the screen has transformed into a navigational plane, rendering it similar to an interface or GUI. While media archaeologist Erkki Huhtamo dabbles about the possibility to describe the contemporary screen as a framed surface or container for displaying visual information, that is controlled by the user and therefore not permanently part of the frame. He finally argues that the screen exists as a constantly changing, temporally constructed interface between the user and information.(10) As Galloway explains in The Interface Effect (2012), the interface is part of the processes of understanding, reading and translating our mediated experiences: it operates as an effect. In his book The Interface Envelope (2015), James Ash writes: ‘within digital interfaces, the specific mode of resolution of an object is carefully designed and tested in order to be as optimal as possible […]. In a digital interface, resolution is the outcome of transductions between a variety of objects including screened images, software, hardware and physical input devices, all of which are centrally involved in the design of particular objects within an interface.’(11)

Not only has the screen morphed from a flat surface to an interactive plane of navigation or interface, its content and the technologies that shape its content have developed into extremely complex systems. As Lev Manovich wrote back in 1995: ‘Rather than being a neutral medium of presenting information, the screen is aggressive. It functions to filter, to screen out, to take over, rendering nonexistent whatever is outside its frame’ – a degree of filtering that varies between different screen technologies. The screen is thus not simply a boundary plane. It has become an autonomous object that affects what is being displayed; a threshold mediating different systems or a process oscillating between states. The mobile screen itself is located in-between different applications and uses.(12)

In the computer, most of the interactions with our interfaces are mediated by software applications that act like platforms. These platforms do not take up the full screen, but instead exist within a window. While they all exist in the same screen, these windows follow their own sets of rules and standards; they exist next to and on top of each other like walled gardens. In a sense, these platforms are a modern version of frameworks; they offer a simulacrum of freedom and possibility. In the case of the platform Facebook, for example, content is reformatted and deformed: Facebook recompresses and reformats any posted data, text, sound or images, while it has rules for the number of characters, and what characters and compressions can be used or uploaded. Facebook has even designed its own emojis for the platform. In short, the platform Facebook enforces its own resolutions. It is important to realize that the screen is a constant state of assemblage: delimiting and demarcating our ways of seeing and instead expanding the axial and lateral resolution to layers that are usually obfuscated or uncharted.

It is imperative to rethink the definition of ‘resolution’ and expand it from a simple measure of acutance. Because what is resolved on the screen and what is not depends not just on the material qualities of the screen or display, or the signal it receives, but also on the processes, platforms, standards and interfaces involved in setting these measures, behind or in the depths beyond the screen or display.

So while in the digital realm, the term ‘resolution’ is often simplified to just mean a number – signifying, say, the width and height of a screen – the critical understanding of the term ‘resolution’ I propose also considers a depth (beyond its outcome). In this ‘depth’, beyond a screen (or when including audible technologies: membrane), protocols and other (proprietary) standards, together with the technological interfaces and the objects’ materialities and affordances, form a final resolution.

The cost of all of these media resolutions – standards encapsulated inside standard encapsulations – is that we have gradually become unaware of the choices and compromises they represent. We need to realize that a resolution is never a neutral settlement, but an outcome that carries historical, economical and political ideologies which once were implemented by choice. While resolutions compromise, obfuscate and obscure particular visual outcomes, the processes of standardization and upgrade culture as a whole also compromise particular technological affordances – creating new ways of seeing or perceiving – altogether. And it is these alternative technologies of seeing, or obscured and deleted settings, that also need to be considered as part of resolution studies.

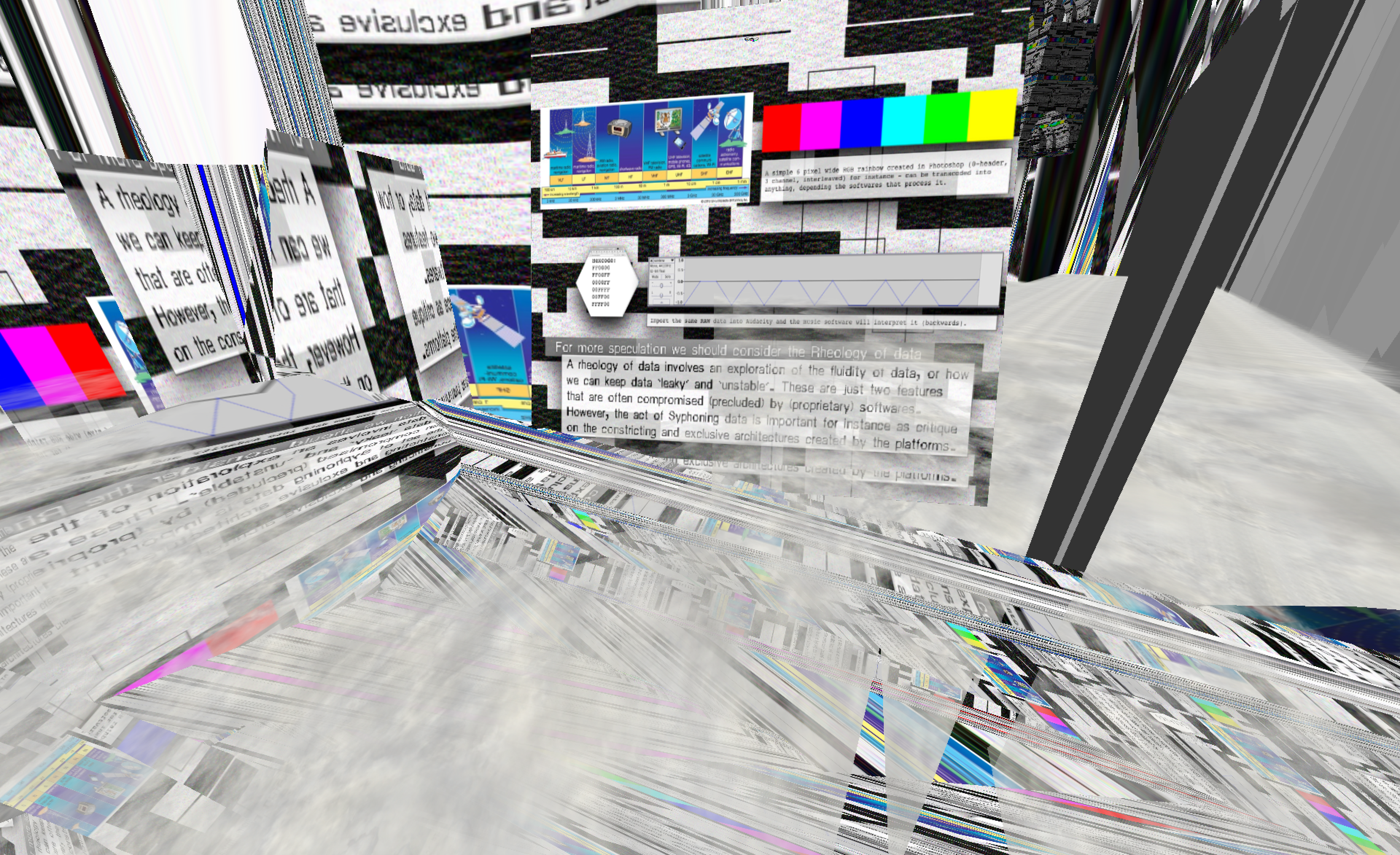

A Rheology of Data

In 1941, the Argentinian writer Jorge Luis Borges published El Jardín de senderos que se bifurcan (The Garden of Forking Paths), containing the short story ‘The Library of Babel’. In this story, Borges describes a universe in the form of a vast library, containing all possible books following a few simple rules: every book consists of 410 pages, each page displays 40 lines and each line contains approximately 80 letters. Each book features any combinations of 25 orthographic symbols: 22 letters, a period (full stop), a comma and a space. While the exact number of books in the Library of Babel can be calculated, Borges says the library is ‘indefinite and perhaps infinite’.

The fascinating part of this story starts when Borges describes the behaviour of the visitors to the library. In particular, the Purifiers, who arbitrarily destroy books that do not follow the Purifiers’ rules of language or decoding. The word ‘arbitrarily’ is important here, because it references the fluidity of the library; the openness to different languages and other systems of interpretation. One book may, for instance, offer an index to the next book, or a system of decoding – a ‘bridge’ – to read the next. This provokes the question: how do the Purifiers know they did not just read the books in the wrong order? How can they be certain that they were not just lacking an index or a codex that would help them access the books to be purified (burned)?

When I learned about NASA’s use of sonification – the process of displaying any type of data or measurement as sound, or as it says on the NASA website: ‘the transmission of information via sound’, which the space agency uses to learn more about the universe – I realized that with the ‘right’ listening device, anything can be heard. Even a rainbow. This does not always mean it makes sense to the listener, but rather, it is significant for the willingness of contemporary space scientists to build bridges between different domains - something I later understood as a 'rheology of data'.

Rheology is a term from the realm of physics, or, to be more precise, from mechanics, where it is used to describe the flow of matter – primarily in a liquid state, but also ‘soft solids’, or solids that respond in a plastic way (rather than deforming elastically in response to an applied force). With a ‘rheology of data’ I thus mean a study of how data can be read and displayed in completely different forms, depending on the context – software or interface. A rheology of data facilitates a deformation and flow of the matter of data. It shows that there is a possibility to ‘push’ data into different applications, and to show data in different forms. Thinking in the 'rheology of data' became meaningful to me when I first ran the open source Mac OS X plugin technology Syphon (first released in 2010 as open video tap, but later developed by Anton Marini in collaboration with Tom Butterworth as Syphon). With the help of Syphon, I could suddenly make certain applications (Adobe After Effects, Modul8, Processing or Open Frameworks) share information, such as frames – full frame rate video or stills – with one another, in real time. Syphon allowed me to project my slides and video as textures on top of 3D objects (from Modul8 to Unity). The plugin taught me that my thinking in software environments or ‘walled gardens’ was flawed, or at least limiting. Software is much more interesting when I can leak and push my content through the walls of software, which normally work as a closed architectures. Syphon showed me that data is more fluid than the ways in which I am conditioned to perceive and use it; data can be resolved differently, if I make it so. And this is where I want to come back to Jon Satrom’s QTzrk. QTzrk is a name that is not supposed to be spoken, it is a name referencing computer language, leaking back into human spoken language. In QTzrk, Satrom already prefigures what Syphon facilitates: the video shows a video software (Quicktime 7) leaking frames, data, from its container into a fluid puddle of data.

In the realm of computation, though, there is still very little fluidity. And the Library of Babel still remains an asynchronous metaphor for our contemporary approach to computation. Computer programs only function when inserting certain forms of formatted data; data for which the machine has codecs installed. (The value of) RAW, non-formatted or unprocessed data is easily dismissed because it is often hard to open or read. There seems to be hardly any freedom in transgressing this. The insights I gained from reading about NASA opened a new approach in my computational thinking: I started teaching my students not just about the electromagnetic spectrum, but also about how NASA, through sonification and other transcoding techniques, could listen to the weather, hoping they would understand the freedom they can take in the processes of perceiving and interpreting data.

Only the contemporary Purifiers – software, its users and the computer system in general – enforce the rule that when data can be illegible, it must be invalid. Take, for instance, Satrom’s QTlets, which have, after a life span of a little over five years, at least in my OS become completely obsolete and unplayable. In reality, it simply means that I do not have the right decoder, which is no longer available and supported. In general, it means that the string of data is not run through the right program or read in the right language, which would translate its data into a legible form of information. Data is not solid; it can flow from one context or environment to the next, changing both its resolution and its meaning – which can be both a danger and a blessing in disguise.

In 1941, the Argentinian writer Jorge Luis Borges published El Jardín de senderos que se bifurcan (The Garden of Forking Paths), containing the short story ‘The Library of Babel’. In this story, Borges describes a universe in the form of a vast library, containing all possible books following a few simple rules: every book consists of 410 pages, each page displays 40 lines and each line contains approximately 80 letters. Each book features any combinations of 25 orthographic symbols: 22 letters, a period (full stop), a comma and a space. While the exact number of books in the Library of Babel can be calculated, Borges says the library is ‘indefinite and perhaps infinite’.

The fascinating part of this story starts when Borges describes the behaviour of the visitors to the library. In particular, the Purifiers, who arbitrarily destroy books that do not follow the Purifiers’ rules of language or decoding. The word ‘arbitrarily’ is important here, because it references the fluidity of the library; the openness to different languages and other systems of interpretation. One book may, for instance, offer an index to the next book, or a system of decoding – a ‘bridge’ – to read the next. This provokes the question: how do the Purifiers know they did not just read the books in the wrong order? How can they be certain that they were not just lacking an index or a codex that would help them access the books to be purified (burned)?