Beyond Resolution

An introduction of my journey into resolution studies, as presented at #34C3, Leipzig, Germany || December 2017.

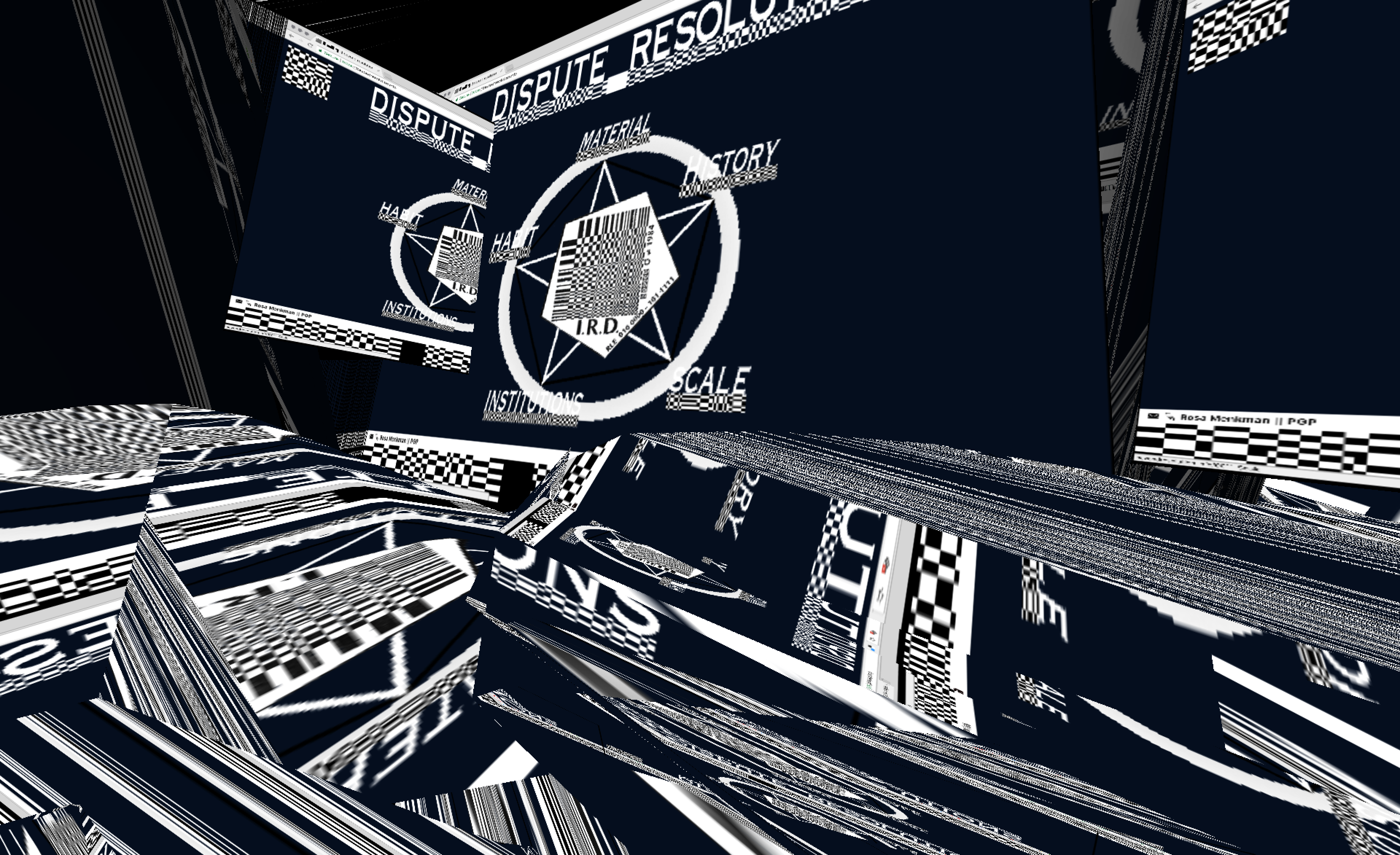

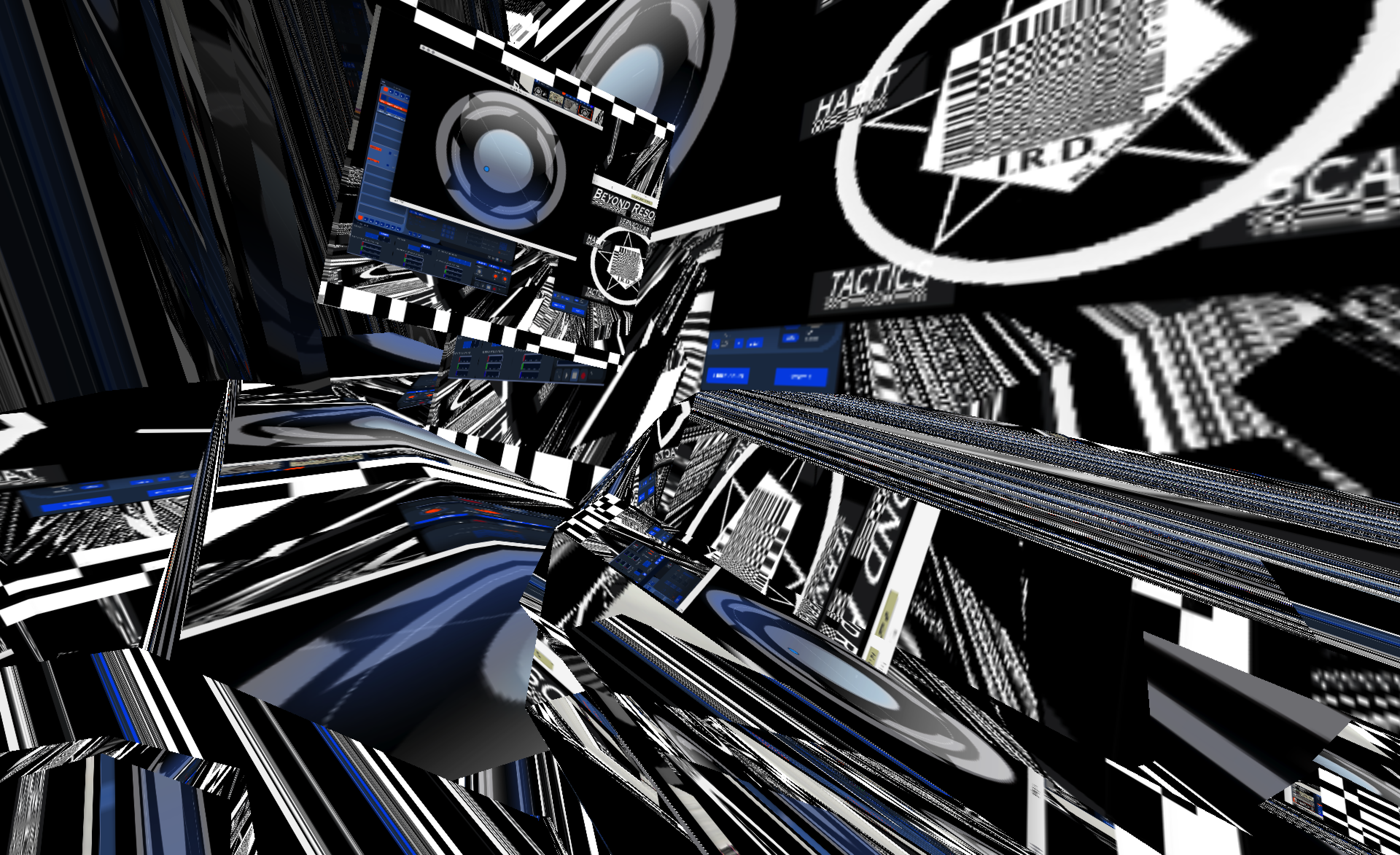

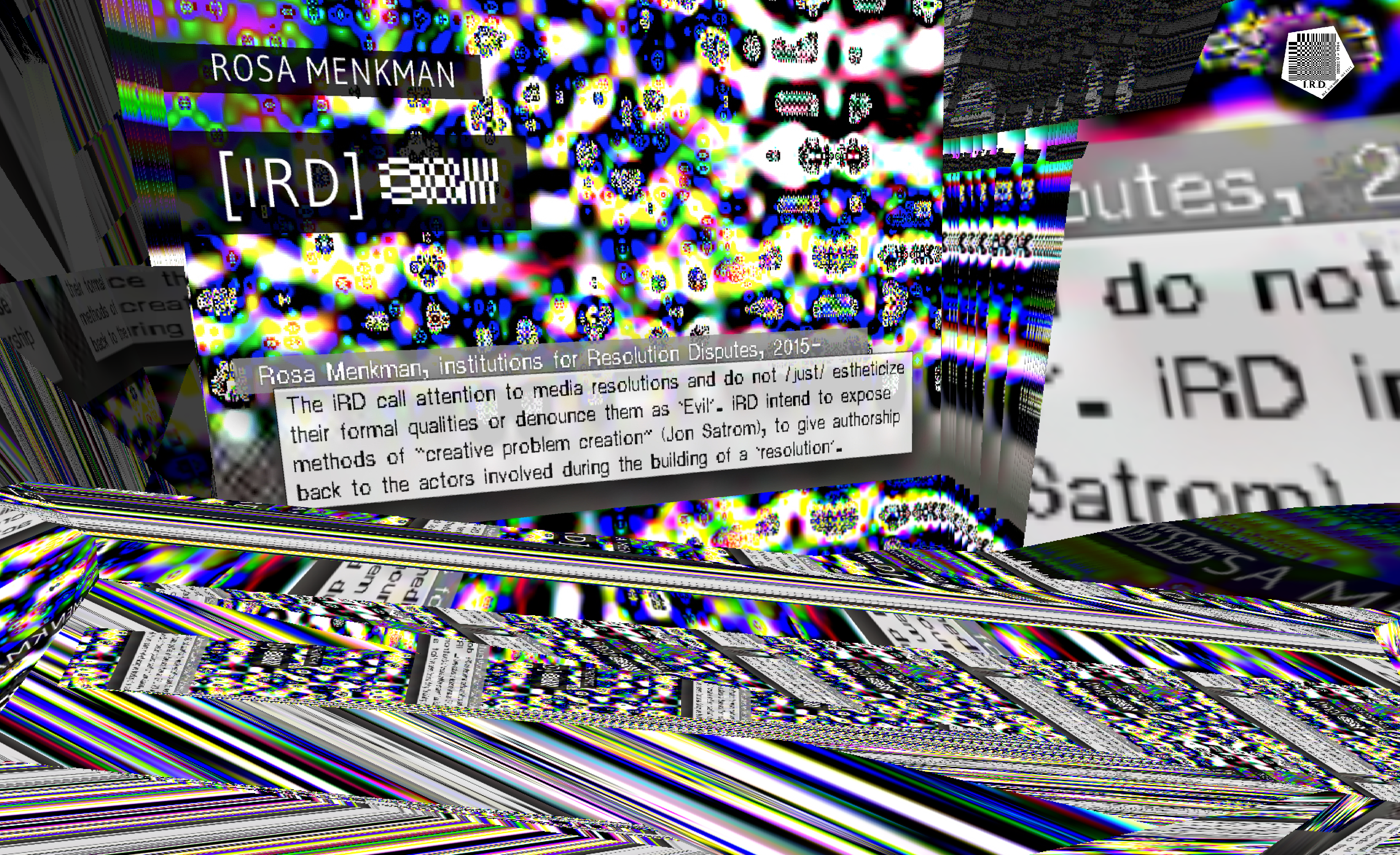

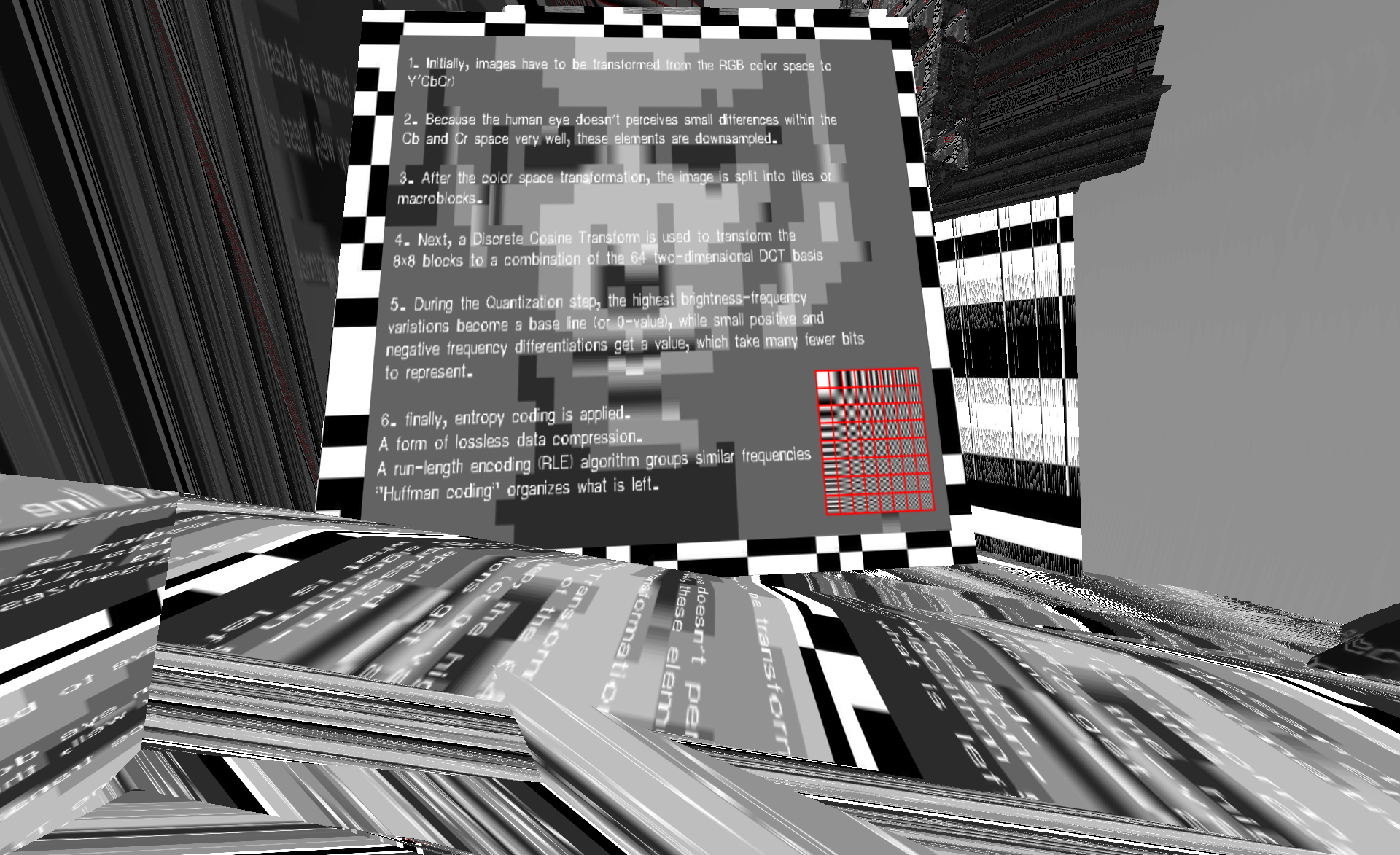

I opened the »institutions of Resolution Disputes« [i.R.D.] on March 28, 2015, as a solo show, hosted by Transfer Gallery in New York City. On September 9, 2017, its follow-up »Behind White Shadows« also opened in Transfer. At the heart of both shows lies research on compressions, with one central research object: the Discrete Cosine Transform (DCT) algorithm, the core of the JPEG (and other) compressions. Together, the two exhibitions form a diptych that is this publication, titled Beyond Resolution.

By going beyond resolution, I attempt to uncover and elucidate how resolutions constantly inform both machine vision and human perception. I unpack the ways resolutions organize our contemporary image processing technologies, emphasizing that resolutions not only organize how and what gets seen, but also what images, settings, ways of rendering and points of view are forgotten, obfuscated, or simply dismissed and unsupported.

The journey that Beyond Resolution also represents, started on a not so fine Saturday morning in early January 2015, when I signed the contract for a research fellowship to write a book on Resolution Studies. For this opportunity I immediately moved back from London to Amsterdam. Unfortunately, and out of the blue, three days before my contract was due to start, my job was put on hold indefinitely. Bureaucratic management dropped me into a financial and ultimately emotional black hole.

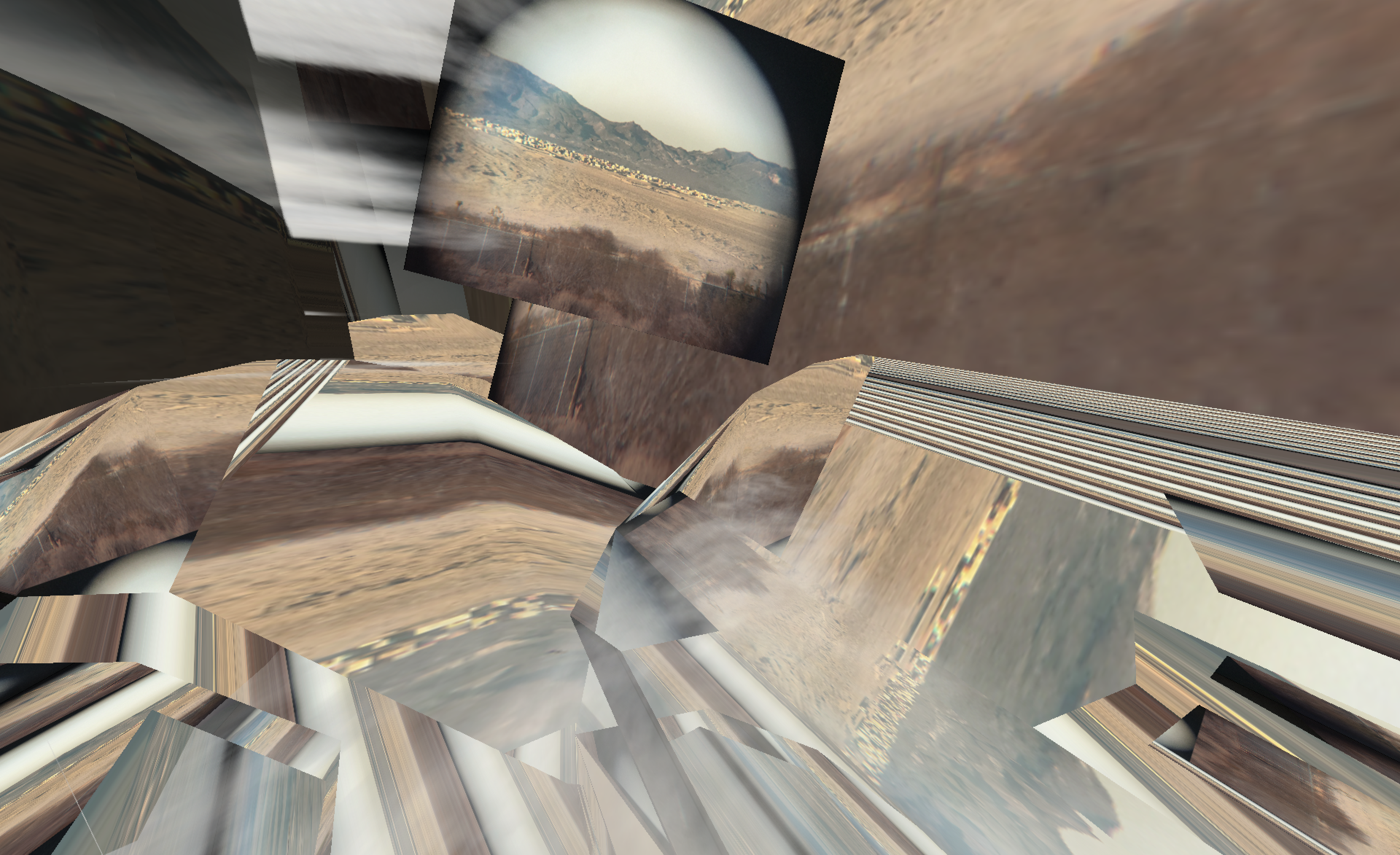

When I finally re-organised my finances, I went to the Mojave desert, to take some time. There, from the porch of a little cabin looking out over a dust road, I could feel the infrasound produced by bombs dropped on »Little Baghdad«, a Twentynine Palms military training ground just miles away on the slope of a hill. I became fascinated with these obscure military spaces – where things happened beyond my understanding, yet in my direct field of perception. It reminded me of Trevor Paglen’s book I Could Tell You But Then You Would Have to Be Destroyed by Me (2007). Paglen’s main field of research is mass surveillance and data collection. His work often deals with photography as a mode of non-resolved vision and the production of invisible images. This memory inspired me to re-start my research on resolutions, this time independently, under the title Beyond Resolution.

Both exhibitions came with their own custom patch – the i.R.D. patch (black on black) provided a key to the five encrypted institutions, while the patch for Behind White Shadows featured Lenna Sjööblom aka Lena Söderberg (glow in the dark on white) – a symbol for the ongoing, yet too often ignored racism embedded within the development of image processing technologies.

An introduction of my journey into resolution studies, as presented at #34C3, Leipzig, Germany || December 2017.

I opened the »institutions of Resolution Disputes« [i.R.D.] on March 28, 2015, as a solo show, hosted by Transfer Gallery in New York City. On September 9, 2017, its follow-up »Behind White Shadows« also opened in Transfer. At the heart of both shows lies research on compressions, with one central research object: the Discrete Cosine Transform (DCT) algorithm, the core of the JPEG (and other) compressions. Together, the two exhibitions form a diptych that is this publication, titled Beyond Resolution.

By going beyond resolution, I attempt to uncover and elucidate how resolutions constantly inform both machine vision and human perception. I unpack the ways resolutions organize our contemporary image processing technologies, emphasizing that resolutions not only organize how and what gets seen, but also what images, settings, ways of rendering and points of view are forgotten, obfuscated, or simply dismissed and unsupported.

The journey that Beyond Resolution also represents, started on a not so fine Saturday morning in early January 2015, when I signed the contract for a research fellowship to write a book on Resolution Studies. For this opportunity I immediately moved back from London to Amsterdam. Unfortunately, and out of the blue, three days before my contract was due to start, my job was put on hold indefinitely. Bureaucratic management dropped me into a financial and ultimately emotional black hole.

When I finally re-organised my finances, I went to the Mojave desert, to take some time. There, from the porch of a little cabin looking out over a dust road, I could feel the infrasound produced by bombs dropped on »Little Baghdad«, a Twentynine Palms military training ground just miles away on the slope of a hill. I became fascinated with these obscure military spaces – where things happened beyond my understanding, yet in my direct field of perception. It reminded me of Trevor Paglen’s book I Could Tell You But Then You Would Have to Be Destroyed by Me (2007). Paglen’s main field of research is mass surveillance and data collection. His work often deals with photography as a mode of non-resolved vision and the production of invisible images. This memory inspired me to re-start my research on resolutions, this time independently, under the title Beyond Resolution.

Both exhibitions came with their own custom patch – the i.R.D. patch (black on black) provided a key to the five encrypted institutions, while the patch for Behind White Shadows featured Lenna Sjööblom aka Lena Söderberg (glow in the dark on white) – a symbol for the ongoing, yet too often ignored racism embedded within the development of image processing technologies.

When I came back to Europe, I started my residency at Schloss Solitude and picked up a teaching position at Merz Akademie, where I developed a full colloquium around Resolution Studies as Artistic Practice. Here I shared and reworked Beyond Resolution with my students and extended it with new theory and practice.

In Beyond Resolution, the i.R.D. (institutions of Resolution Disputes) conduct research and critique the consequences of setting resolutions by following a pentagon of contexts: the effects of scaling and the habitual, material, genealogical, and tactical use (and abuse) by the settings, protocols, and affordances behind our resolutions.

In Beyond Resolution, the i.R.D. (institutions of Resolution Disputes) conduct research and critique the consequences of setting resolutions by following a pentagon of contexts: the effects of scaling and the habitual, material, genealogical, and tactical use (and abuse) by the settings, protocols, and affordances behind our resolutions.

In 2017, while teaching in another art school, within a – very traditional – department focused on painting and sculpture, two students separately asked me how art can exist in the digital, when there is an inherent lack of »emotion« within the material. One student even added that digital material is »cold.«

I responded with a counter question: Why do you think classic materials, such as paint or clay, are inherently warm or emotional? By doing so, I hoped to start a dialogue about how their work exists as a combination of physical characteristics and signifying strategies. I tried to make them think not just in terms of material, but in terms of materiality, which is not fixed, but emerges from a set of norms and expectations, traditions, rules and finally the meaning the artist and writer add themselves. I tried to explain that we – as artists – can play with this constellation and consequently, even morph and transform the materiality of, for instance, paint.

The postmodern literary critic Katherine Hayles re-conceptualized materiality as ‘the interplay between a text’s physical characteristics and its signifying strategies’. Rather than suggesting a mediums materiality as fixed in physicality, Hayles’ re-definition is useful because it «opens the possibility of considering texts as embodied entities while still maintaining a central focus on interpretation. In this view of materiality, it is not merely an inert collection of physical properties but a dynamic quality that emerges from the interplay between the text as a physical artifact, its conceptual content, and the interpretive activities of readers and writers.»

The students lacked an understanding of »materiality« in general – let alone of digital materiality. They had no analytical training to understand how materials work, or how digital media and platforms influence and program us speaking to our habits by using a particularly reflexive vernacular or dialect.

I was shocked; digital literacy is not trivial; it is a prerequisite for agency in our contemporary society. To be able to ignore or unsee the infrastructures that govern our digital technologies, and thus our daily realities, or to presume these infrastructures are ‘hidden’ or ‘magic,’ is an act reserved only for the digital deprived or the highly privileged.

I am convinced that (digital) illiteracy is the result of lacking education in primary and high school. Even if these students have not studied the digital previously, I would expects some grasp of analytical and hopefully experimental tools as part of their creative thinking strategies. This makes me wonder if todays teachers are not sufficiently literate themselves, or alternatively if we are not teaching our students the tools and ways of thinking necessary to understand and engage present forms of ubiquitous information processing.

I responded with a counter question: Why do you think classic materials, such as paint or clay, are inherently warm or emotional? By doing so, I hoped to start a dialogue about how their work exists as a combination of physical characteristics and signifying strategies. I tried to make them think not just in terms of material, but in terms of materiality, which is not fixed, but emerges from a set of norms and expectations, traditions, rules and finally the meaning the artist and writer add themselves. I tried to explain that we – as artists – can play with this constellation and consequently, even morph and transform the materiality of, for instance, paint.

The postmodern literary critic Katherine Hayles re-conceptualized materiality as ‘the interplay between a text’s physical characteristics and its signifying strategies’. Rather than suggesting a mediums materiality as fixed in physicality, Hayles’ re-definition is useful because it «opens the possibility of considering texts as embodied entities while still maintaining a central focus on interpretation. In this view of materiality, it is not merely an inert collection of physical properties but a dynamic quality that emerges from the interplay between the text as a physical artifact, its conceptual content, and the interpretive activities of readers and writers.»

The students lacked an understanding of »materiality« in general – let alone of digital materiality. They had no analytical training to understand how materials work, or how digital media and platforms influence and program us speaking to our habits by using a particularly reflexive vernacular or dialect.

I was shocked; digital literacy is not trivial; it is a prerequisite for agency in our contemporary society. To be able to ignore or unsee the infrastructures that govern our digital technologies, and thus our daily realities, or to presume these infrastructures are ‘hidden’ or ‘magic,’ is an act reserved only for the digital deprived or the highly privileged.

I am convinced that (digital) illiteracy is the result of lacking education in primary and high school. Even if these students have not studied the digital previously, I would expects some grasp of analytical and hopefully experimental tools as part of their creative thinking strategies. This makes me wonder if todays teachers are not sufficiently literate themselves, or alternatively if we are not teaching our students the tools and ways of thinking necessary to understand and engage present forms of ubiquitous information processing.

As a personal reference, I recall that decades ago, when I was ten years old, I dreamed of listening to sound in space. In fact, this is what I wrote on the first page of my diary (1993). I also remember clearly when my teacher stole this dream from me, the moment she told me that because of a lack of matter, there is no sound in space. She concluded that the research I dreamed of was impossible, and my dream shattered.

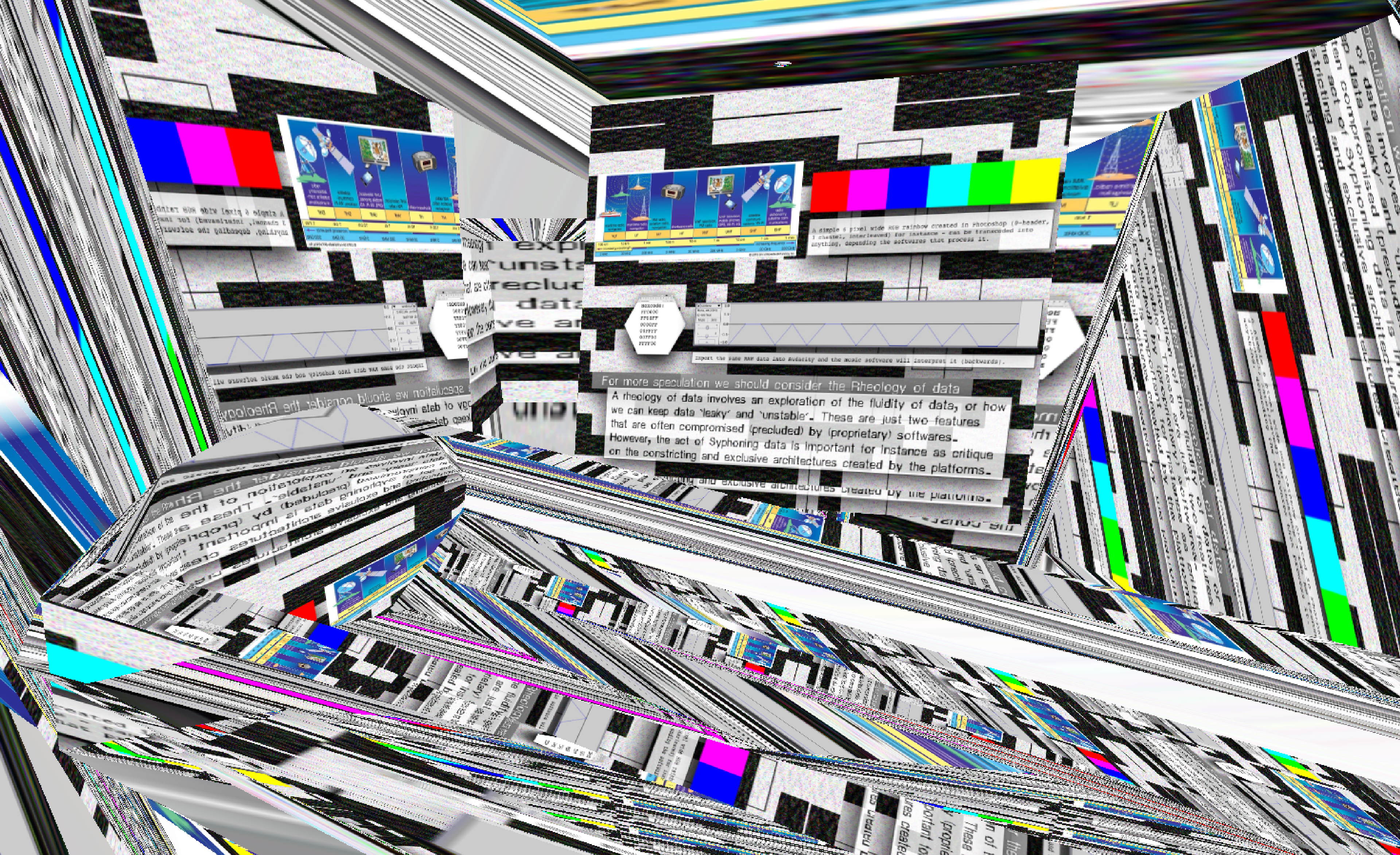

Only years later, when I was introduced to micro waves and NASA’s use of sonification – the process of displaying any type of data or measurement as sound, I understood that with the right listening device, anything can be heard. From then on I started teaching my students not just about the electromagnetic spectrum, but also how they, through sonification and other transcoding techniques, could listen to rainbows and the weather. The technical challenges associated with this I call »a rheology of data«. Here, rheology is a term borrowed from the branch of physics that deals with the deformation and flow of matter.

Only years later, when I was introduced to micro waves and NASA’s use of sonification – the process of displaying any type of data or measurement as sound, I understood that with the right listening device, anything can be heard. From then on I started teaching my students not just about the electromagnetic spectrum, but also how they, through sonification and other transcoding techniques, could listen to rainbows and the weather. The technical challenges associated with this I call »a rheology of data«. Here, rheology is a term borrowed from the branch of physics that deals with the deformation and flow of matter.

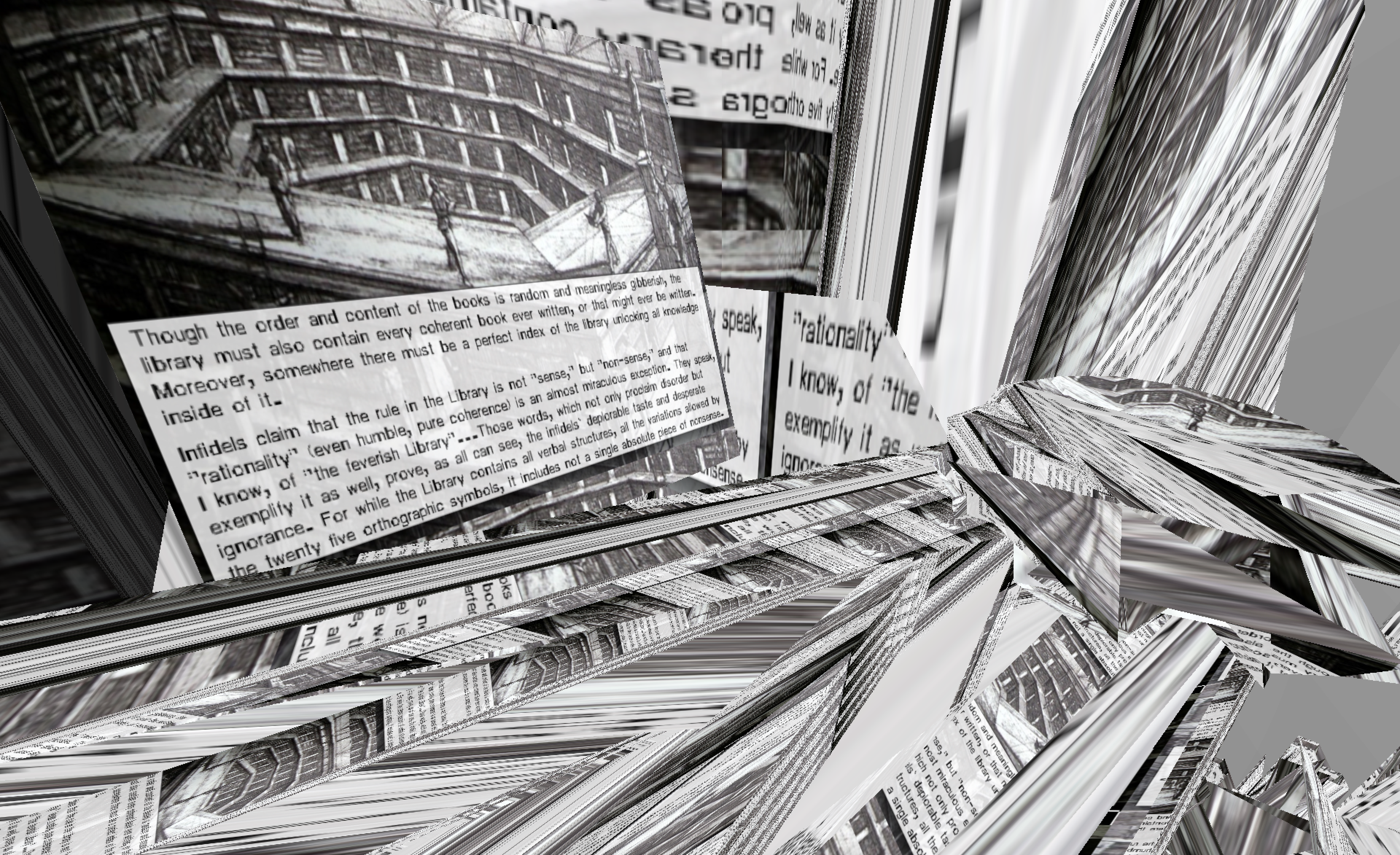

The Argentinian writer Jorge Luis Borges’s 1941 short storey »The Library of Babel« is an amazing inspiration to me. In the short story, the author describes a universe in the form of a vast library, containing all the possible books following these simple rules: every book consist of 410 pages, each page displays 40 lines, has approximately 80 letters, features any combinations of 25 orthographic symbols; 22 letters, a period, a comma, and a space. While the exact number of books of the Library of Babel has been calculated, Borges describes the library as endless. I think Borges was aware of the morphing quality of the materiality of books and that, as a consequence, the library is full of books that read like nonsense.

The fascinating part of this story starts when Borges describes the behavior of visitors of the library. In particular, the Purifiers, who arbitrarily destroy books that do not follow their rules of language or decoding. The word arbitrarily is important here, because it references the fluidity of the library; the openness to different languages and other systems of interpretation. What I read here is a very clear, asynchronous metaphor for our contemporary approach to data: most people are only interested in information and dismiss (the value of) RAW, non formatted or unprocessed data (RAW data is of course an oxymoron, as data is never RAW but always a cultural object in itself). As a result the purifiers do not accept or realise that when something is illegible, it does not mean that it is just garbage. It can simply mean there is no key, or that the string of data – the book – is not run through the right program or read in the right language, that decodes its data into human legible information.

The fascinating part of this story starts when Borges describes the behavior of visitors of the library. In particular, the Purifiers, who arbitrarily destroy books that do not follow their rules of language or decoding. The word arbitrarily is important here, because it references the fluidity of the library; the openness to different languages and other systems of interpretation. What I read here is a very clear, asynchronous metaphor for our contemporary approach to data: most people are only interested in information and dismiss (the value of) RAW, non formatted or unprocessed data (RAW data is of course an oxymoron, as data is never RAW but always a cultural object in itself). As a result the purifiers do not accept or realise that when something is illegible, it does not mean that it is just garbage. It can simply mean there is no key, or that the string of data – the book – is not run through the right program or read in the right language, that decodes its data into human legible information.

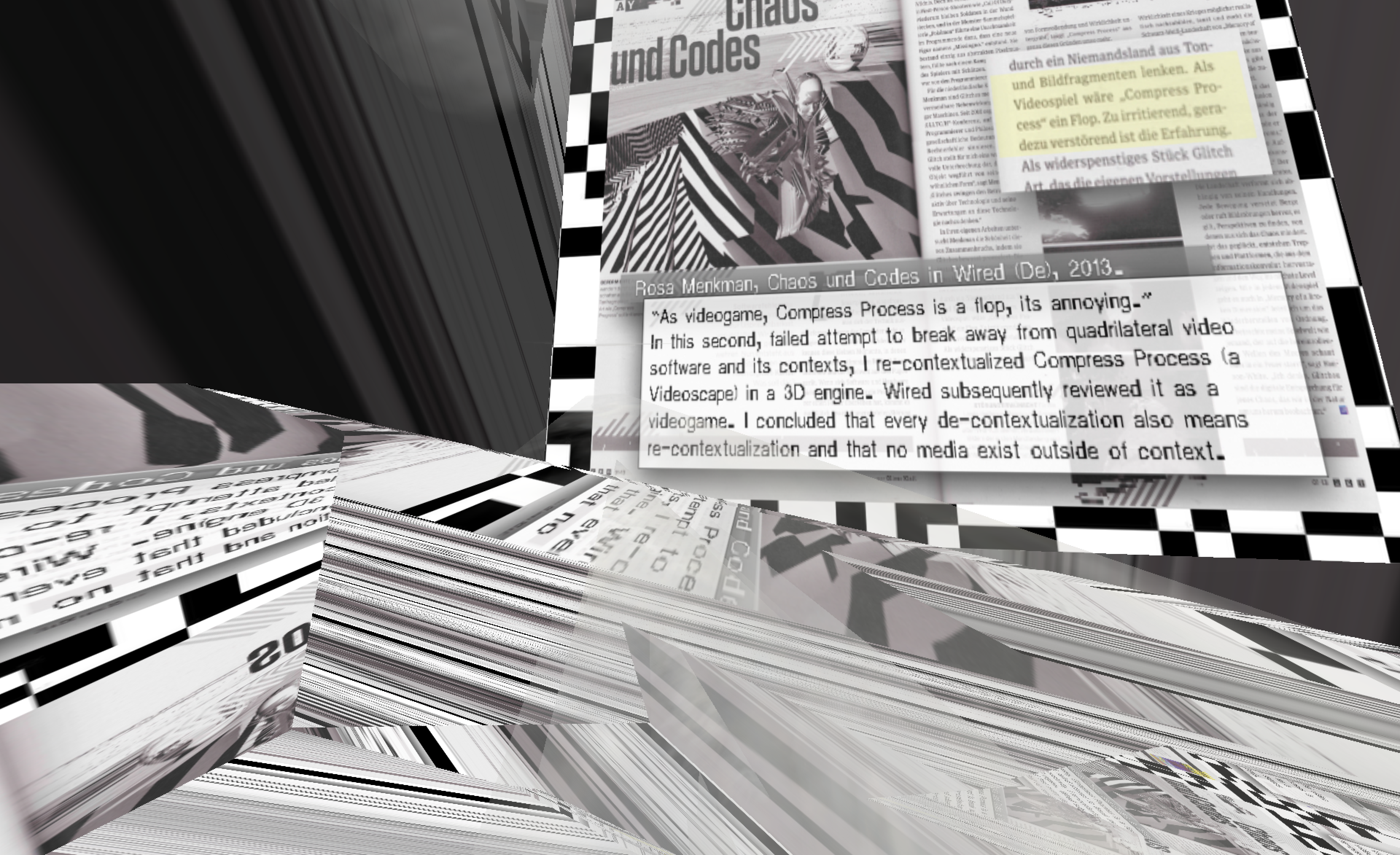

Besides the rheology of data, I worked with my students to imagine what syphoning could mean to our computational experience. Syphoning, a term borrowed from the open source Mac OS X plugin technology Syphon (by Tom Butterworth and Anton Marini) refers to certain applications sharing information, such as frames – full frame rate video or stills – with one another in real time. For instance, Syphon allows me to project my slides or video as textures on top of 3D objects (from Modul8 into Unity). This allowed me to, at least partially, escape otherwise flat, quadrilateral interfaces of (digital) images and video, and leak my content through the walls of applications.

In the field of computation – and especially in image processing – all renders follow quadrilateral, ecology dependent, standard solutions following tradeoffs (compromises) that deal with efficiency and functionality in the realms of storage, processing and transmission. However, what I am interested in is the creation of circles, pentagons, and other more organic manifolds! If this was possible, our computational machines would work entirely different; we could create modular or even syphoning relationships between text files, and as demonstrated in Chicago’s glitch artist Jon Satroms’ 2011 QTzrk installation, videos could have uneven corners, multiple timelines, and changing soundtracks.

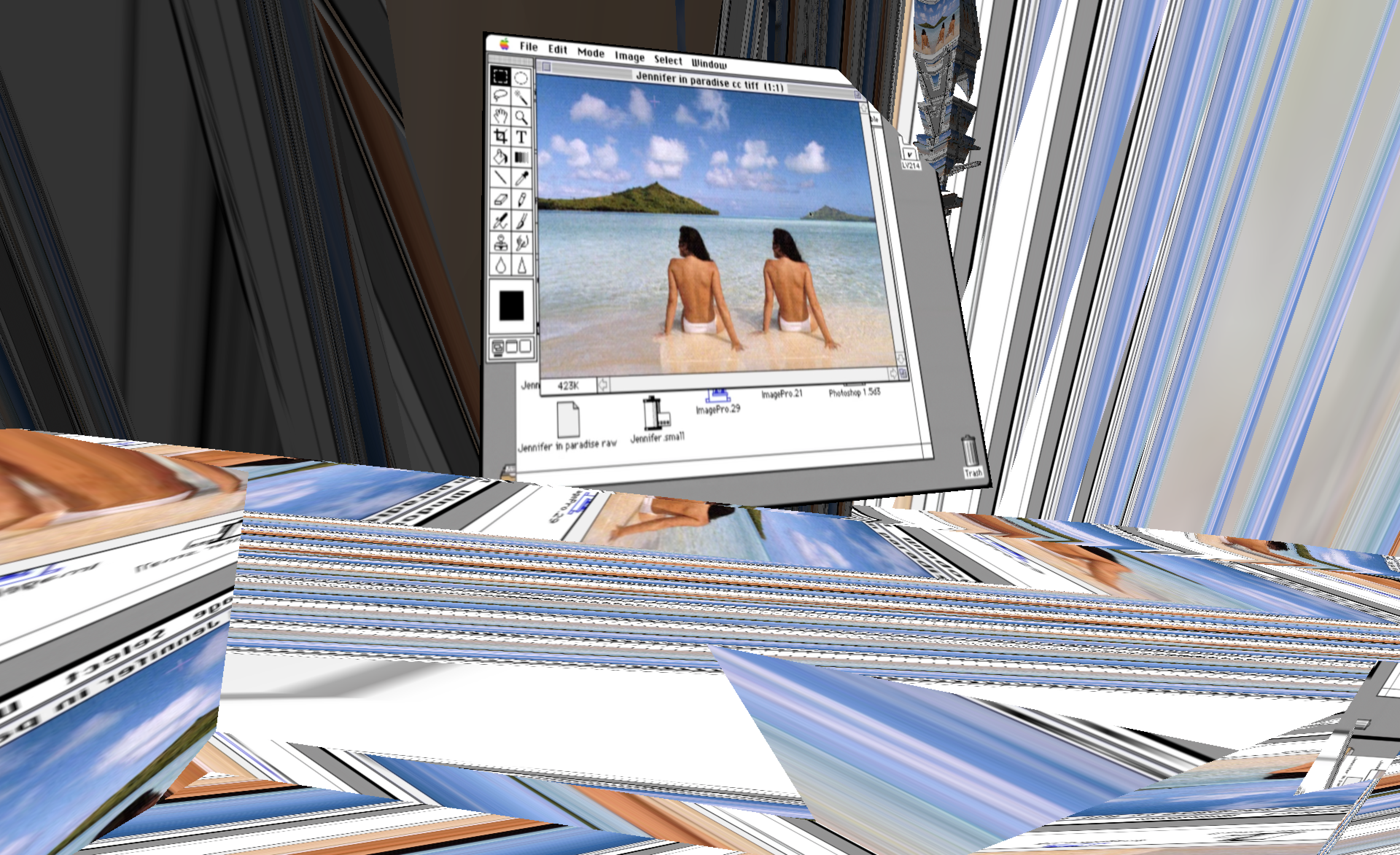

Inspired by these ideas, I build Compress Process. This application makes it possible to navigate video inside 3D environments, where sound is triggered and pans when you navigate. Unfortunately, upon release, Wired magazine reviewed the experiment Compress Process as »a flopped video game«. Ironically, they could not imagine that in my demonstration video exists outside the confines of the traditional two-dimensional and flat interface; this other resolution – 3D – meant the video-work was re-categorized as a gaming application.

In a time when image processing technologies function as black boxes, we desperately need research, reflection and re-evaluation of these machines of obfuscation. However, institutions – schools and publications alike – appear to consider the same old settings over and over, without critically analyzing or deconstructing the programs of our newer media. As a result, there are no studies of alternative resolutions. Instead we only teach and learn to copy; simulate the behavior of the interface, replicate the information, paste the data.

A condition which reminds me of science fiction writer Philip K. Dick’s dystopian novel Pay for the Printer, in which printers print printers, a process that finally results in printers printing useless mush. If we do not approach our resolutions analytically, a next generation will likely be discombobulated by the loss of quality between subsequent copies or transcopies of data. As a result, they will turn into a partition of institutionalized programs producing monotonous junk.

Together these observations set up a pressing research agenda. To me it is important to ask how new resolutions can be created; and if every decontextualized materiality is immediately re-contextualized inside other, already existing, paradigms or interfaces? In The Interface Effect, NYU professor in new media Alexander Galloway writes that an interface is »not a thing, an interface is always an effect. It is always a process or translation« (Galloway 2013: p. 33). Does this mean that every time we deal with data we completely depend on our conditioning? Is it possible to escape the normative or habitual interpretation of our interfaces?

Inspired by these ideas, I build Compress Process. This application makes it possible to navigate video inside 3D environments, where sound is triggered and pans when you navigate. Unfortunately, upon release, Wired magazine reviewed the experiment Compress Process as »a flopped video game«. Ironically, they could not imagine that in my demonstration video exists outside the confines of the traditional two-dimensional and flat interface; this other resolution – 3D – meant the video-work was re-categorized as a gaming application.

In a time when image processing technologies function as black boxes, we desperately need research, reflection and re-evaluation of these machines of obfuscation. However, institutions – schools and publications alike – appear to consider the same old settings over and over, without critically analyzing or deconstructing the programs of our newer media. As a result, there are no studies of alternative resolutions. Instead we only teach and learn to copy; simulate the behavior of the interface, replicate the information, paste the data.

A condition which reminds me of science fiction writer Philip K. Dick’s dystopian novel Pay for the Printer, in which printers print printers, a process that finally results in printers printing useless mush. If we do not approach our resolutions analytically, a next generation will likely be discombobulated by the loss of quality between subsequent copies or transcopies of data. As a result, they will turn into a partition of institutionalized programs producing monotonous junk.

Together these observations set up a pressing research agenda. To me it is important to ask how new resolutions can be created; and if every decontextualized materiality is immediately re-contextualized inside other, already existing, paradigms or interfaces? In The Interface Effect, NYU professor in new media Alexander Galloway writes that an interface is »not a thing, an interface is always an effect. It is always a process or translation« (Galloway 2013: p. 33). Does this mean that every time we deal with data we completely depend on our conditioning? Is it possible to escape the normative or habitual interpretation of our interfaces?

To establish a better understanding of our technologies, we need to acknowledge that the term »resolution« does not just refer to a numerical quantity or a measure of acutance. A resolution involves the result of a consolidation between interfaces, protocols, and materialities. Resolutions thus also entail a space of compromise between these different actors.

Think for instance about how different objects such as a lens, film, image sensor, and compression algorithm dispute over settings (frame rate, number of pixels, and so forth) following certain affordances (standards) – possible settings a technology has not just by itself but in connection or composition with other elements. Generally, settings within these conjunctions either ossify as requirements or de facto norms, or are notated as de jure – legally binding – standards by organizations such as the International Organization for Standardization (ISO). This process of resolving an image becomes less complex, but ultimately also less transparent and more black-boxed.

It’s not just institutions like ISO that program, encode, and regulate (standardize) the flows of data in and between our technologies, or that arrange the data in our machines following systems that underline efficiency or functionality. In fact data is often formatted to include all kinds of inefficiencies that the user is not conditioned or even supposed to see, think, or question. Our data is for instance often (re-)encoded and deformed by nepotist, sometimes covertly operating cartels for reasons like insidious data collection or locking the users into a proprietary software.

Think for instance about how different objects such as a lens, film, image sensor, and compression algorithm dispute over settings (frame rate, number of pixels, and so forth) following certain affordances (standards) – possible settings a technology has not just by itself but in connection or composition with other elements. Generally, settings within these conjunctions either ossify as requirements or de facto norms, or are notated as de jure – legally binding – standards by organizations such as the International Organization for Standardization (ISO). This process of resolving an image becomes less complex, but ultimately also less transparent and more black-boxed.

It’s not just institutions like ISO that program, encode, and regulate (standardize) the flows of data in and between our technologies, or that arrange the data in our machines following systems that underline efficiency or functionality. In fact data is often formatted to include all kinds of inefficiencies that the user is not conditioned or even supposed to see, think, or question. Our data is for instance often (re-)encoded and deformed by nepotist, sometimes covertly operating cartels for reasons like insidious data collection or locking the users into a proprietary software.

So while in the digital realm, the term »resolution« is often simplified to just mean a number signifying width and height of for instance a screen, the critical use I propose also considers the screens »depth.« And it’s within this depth that reflections on the technological procedure

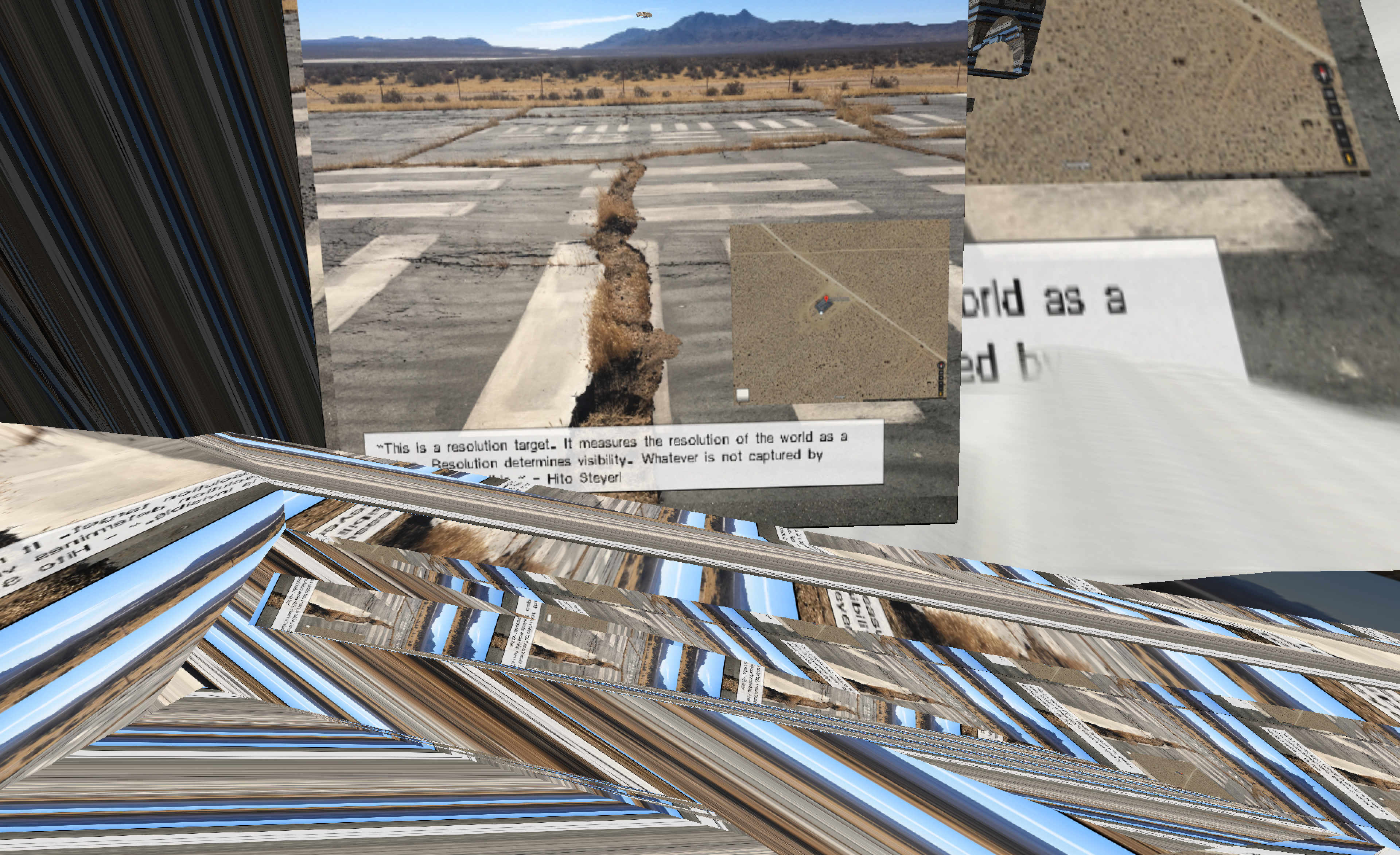

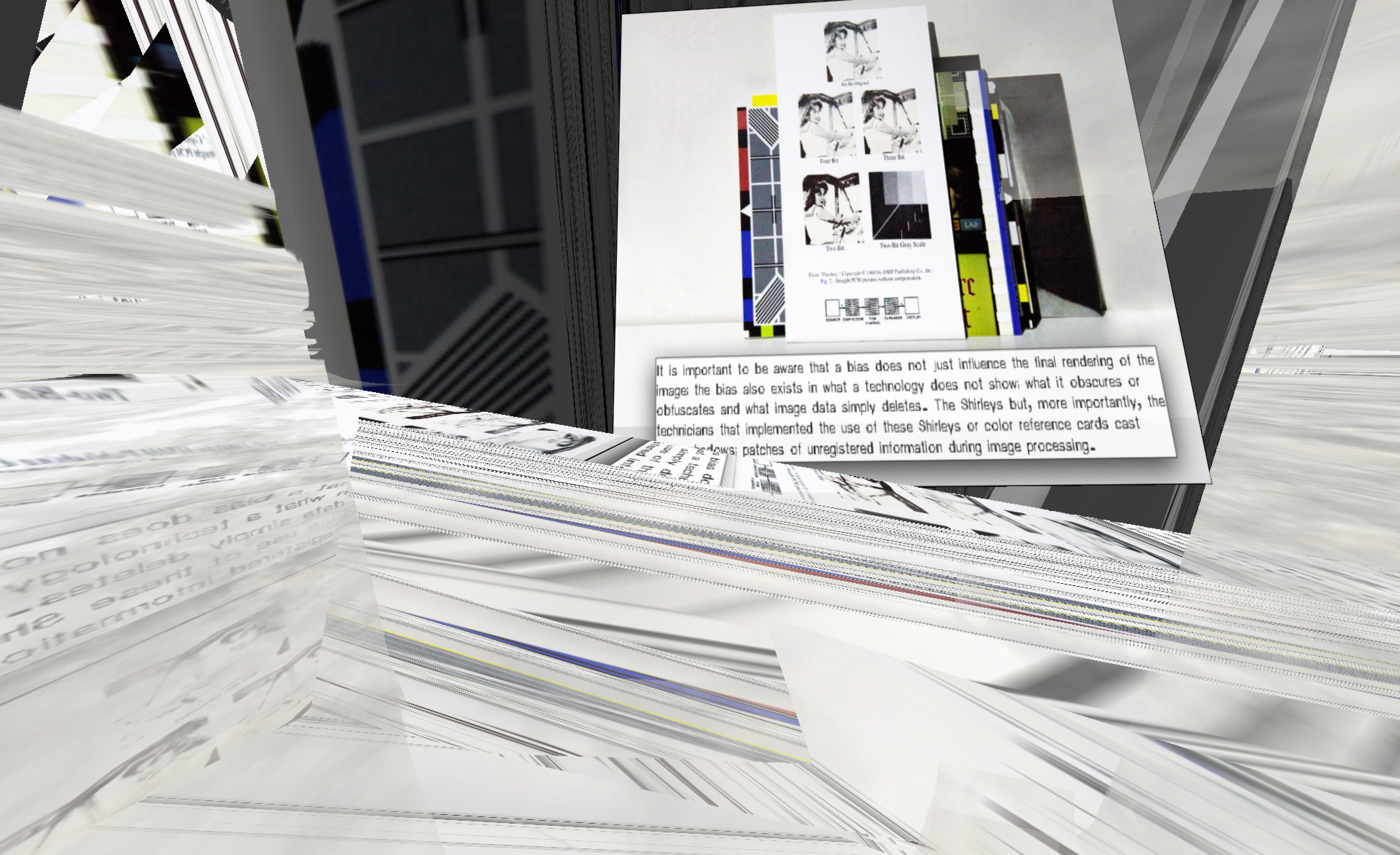

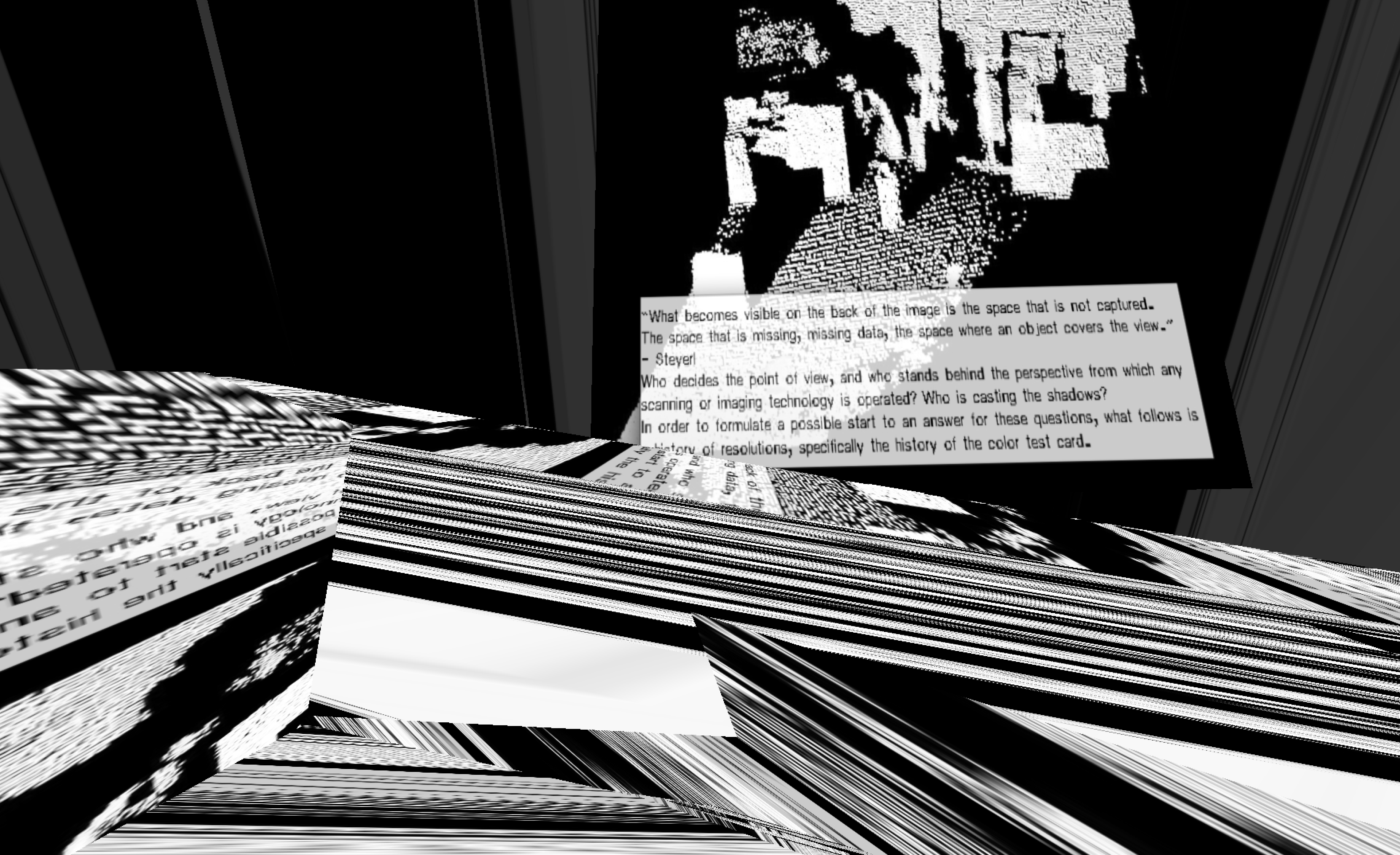

In the 1950s and 1960s, the United States Air Force installed different versions of the 1951 USAF resolution test chart in the Mojave desert to calibrate aerial photography and video. Documentary film maker and writer Hito Steyerl’s video essay How Not to be Seen. A Fucking Didactic Educational .MOV File (2013) was filmed at one of these resolution targets just west of Cuddeback Lake. Steyerl presents viewers with an educational manual which via critical consideration of the resolutions and surveillance embedded in digital and analogue technologies argues that »whatever is not captured by resolution is invisible.«

Even though I am aware that her work by no means claims to be a comprehensive description of the term «resolution», or the ways in which these resolutions perform in the age of mass-surveillance, I believe the essay non the less misses a crucial perspective. Resolutions do not simply present things as visible, while rendering Others obscured or invisible. Rather, a resolution should also be understood as the choice between certain technological processes and materials that involve their own specific standard protocols and affordances, which in their turn inform the settings that govern a final capture. However, these settings and their inherent affordances – or the possibilities to choose other settings – have become more and more complex and obscure themselves as they exist inside black-boxed interfaces. Moreover, while resolutions compromise, obfuscate, or obscure certain visual outcomes, the processes of standardization and upgrade culture as a whole also compromise certain technological affordances – creating new ways of seeing or perceiving – altogether. And it is these alternative technologies of seeing, or obscured and deleted settings that also need to be considered as part of resolution studies.

Even though I am aware that her work by no means claims to be a comprehensive description of the term «resolution», or the ways in which these resolutions perform in the age of mass-surveillance, I believe the essay non the less misses a crucial perspective. Resolutions do not simply present things as visible, while rendering Others obscured or invisible. Rather, a resolution should also be understood as the choice between certain technological processes and materials that involve their own specific standard protocols and affordances, which in their turn inform the settings that govern a final capture. However, these settings and their inherent affordances – or the possibilities to choose other settings – have become more and more complex and obscure themselves as they exist inside black-boxed interfaces. Moreover, while resolutions compromise, obfuscate, or obscure certain visual outcomes, the processes of standardization and upgrade culture as a whole also compromise certain technological affordances – creating new ways of seeing or perceiving – altogether. And it is these alternative technologies of seeing, or obscured and deleted settings that also need to be considered as part of resolution studies.

The i.R.D. is dedicated to researching the interests of anti-utopic, obfuscated, lost, and unseen, or simply »too good to be implemented« resolutions. It functions as a stage for non-protocological radical digital materialisms. The i.R.D. is a place for the »otherwise« or »dysfunctional« to be empowered, or at least to be recognized. From inside the i.R.D., technical literacy is considered to be both a strength and a limitation: The i.R.D. shows resolutions beyond our usual field of view, and points to settings and interfaces we have learned to refuse to recognize.

While the i.R.D. call attention to media resolutions, it does not just aestheticize their formal qualities or denounce them as »evil,« as new media professors Andrew Goffey and Matthew Fuller did in Evil Media (2012). The i.R.D. could easily have become a Wunderkammer for artifacts that already exist within our current resolutions, exposing standards as Readymades in technological boîte-en-valises. Curiosity cabinets are special spaces, but in a way they are also dead; they celebrate objects behind glass, or safely stowed away inside an acrylic cube. I can imagine the man responsible for such a collection of technological artifacts. There he sits, in the corner, smoking a pipe, looking over his conquests.

This type of format would have turned the i.R.D. into a static capture of hopelessness; an accumulation that will not activate or change anything; a private, boutique collection of evil. An institute that intends to host disputes cannot get away with simply displaying objects of contention. Disputes involve discussions and debate. In other words: the objects need to be unmuted – or be given – a voice. A dilemma that informs some of my key questions: how can objects be displayed in an »active« way? How do you exhibit the »invisible?«

Genealogy (in terms of for instance upgrade culture) and ecology (the environment and the affordances the environment offers for the dynamic inter-relational processes of objects) play a big role in the construction of resolutions. This is why the i.R.D. hosts classic resolutions and their inherent (normally obfuscated) artifacts such as dots, lines, blocks, and wavelets, inside an »Ecology of Compression Complexities,« a study of compression artifacts and their qualities and ways of diversion, dispersion, and (alternative) functioning by employing tactics of »creative problem creation«, a type of tactic coined by Jon Satrom, during GLI.TC/H 2111, which shifts authorship back to the actors involved in the setting of a resolution.

While the i.R.D. call attention to media resolutions, it does not just aestheticize their formal qualities or denounce them as »evil,« as new media professors Andrew Goffey and Matthew Fuller did in Evil Media (2012). The i.R.D. could easily have become a Wunderkammer for artifacts that already exist within our current resolutions, exposing standards as Readymades in technological boîte-en-valises. Curiosity cabinets are special spaces, but in a way they are also dead; they celebrate objects behind glass, or safely stowed away inside an acrylic cube. I can imagine the man responsible for such a collection of technological artifacts. There he sits, in the corner, smoking a pipe, looking over his conquests.

This type of format would have turned the i.R.D. into a static capture of hopelessness; an accumulation that will not activate or change anything; a private, boutique collection of evil. An institute that intends to host disputes cannot get away with simply displaying objects of contention. Disputes involve discussions and debate. In other words: the objects need to be unmuted – or be given – a voice. A dilemma that informs some of my key questions: how can objects be displayed in an »active« way? How do you exhibit the »invisible?«

Genealogy (in terms of for instance upgrade culture) and ecology (the environment and the affordances the environment offers for the dynamic inter-relational processes of objects) play a big role in the construction of resolutions. This is why the i.R.D. hosts classic resolutions and their inherent (normally obfuscated) artifacts such as dots, lines, blocks, and wavelets, inside an »Ecology of Compression Complexities,« a study of compression artifacts and their qualities and ways of diversion, dispersion, and (alternative) functioning by employing tactics of »creative problem creation«, a type of tactic coined by Jon Satrom, during GLI.TC/H 2111, which shifts authorship back to the actors involved in the setting of a resolution.

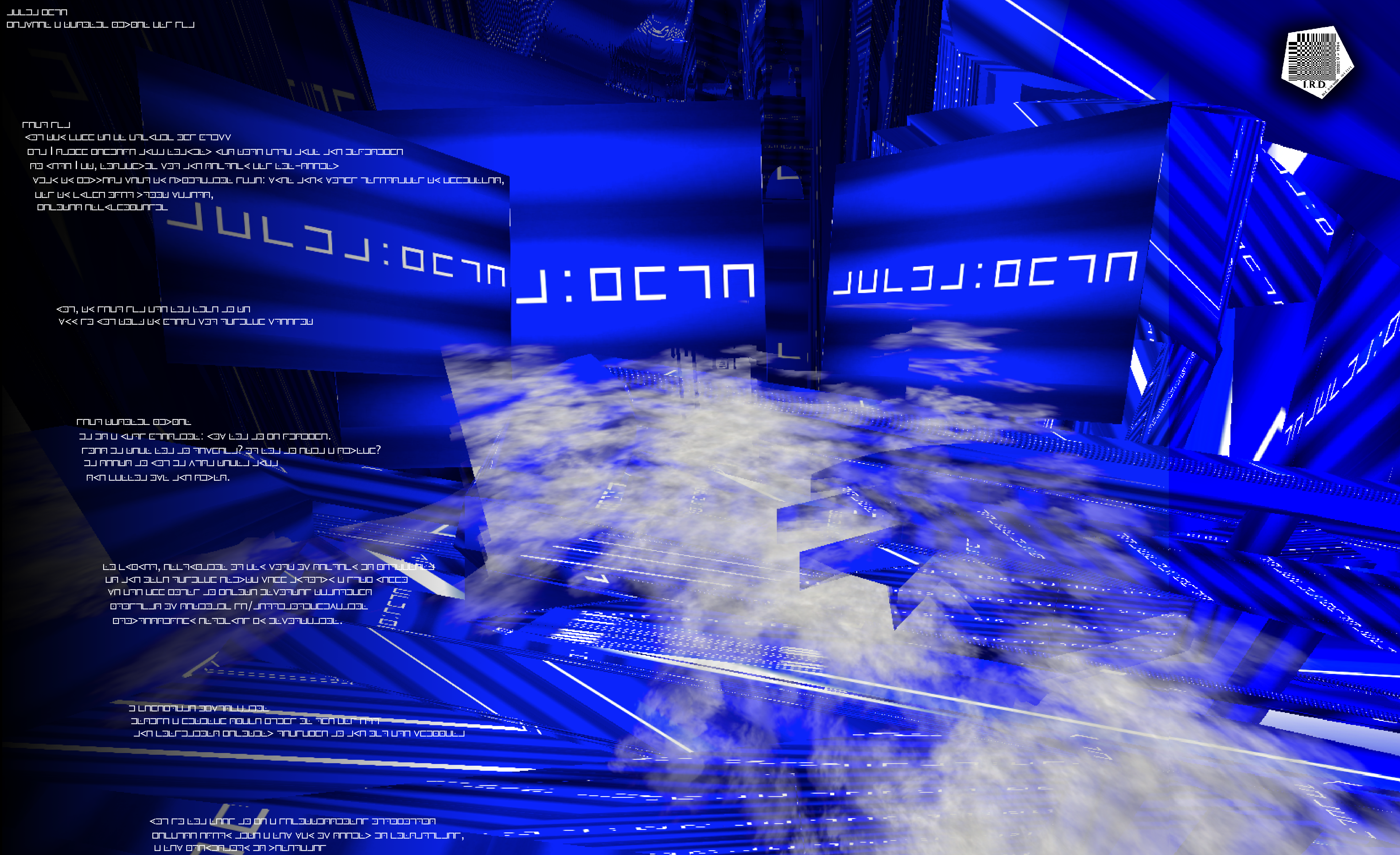

Tacit:Blue (Rosa Menkman, video, 2015) small interruptions in an otherwise smooth blue video document a conversation between two cryptography technologies; a Masonic Pigpen or Freemasons cipher (a basic, archaic, and geometric simple substitution cipher) and the cryptographic technology DCT (Rosa Menkman, Discrete Cosine Transform encryption, 2015). The sound and light that make up the blue surface are generated by transcoding the same electric signals using different components; what you see is what you hear.

The technology responsible for the audiovisual piece is the NovaDrone (Pete Edwards/Casper Electronics, 2012), a small AV synthesizer designed by Casper Electronics. In essence, the NovaDrone is a noise machine with a flickering military RGB LED on top. The synthesizer is easy to play with; it offers three channels of sound and light (RGB) and the board has twelve potentiometers and ten switches to control the six oscillators routed through a 1/4-inch sound output, with which you can create densely textured drones, or in the case of Tacit:Blue, a rather monotonous, single AV color / frequency distortion.

The video images have been created using the more exciting functions of the NovaDrone. Placing the active camera of an iPhone against the LED on top of the NovaDrone, which turns the screen of the phone into a wild mess of disjointed colors, revealing the NovaDrone’s hidden second practical usage as a light synthesizer.

In this process the NovaDrone exploits the iPhone’s CMOS (Complimentary Metal-Oxide-Semiconductor) image sensor, a technology that is part of most commercial cameras, and is responsible for the transcoding of captured light into image data. When the camera function on the phone is activated, the CMOS moves down the sensor capturing pixel values one row at a time. However because the flicker frequency of the military RGB LED is changed by the user and higher than the writing speed of the phone’s CMOS, the iPhone camera is unable to synch up with the LED. What appears on the screen of the iPhone is an interpretation of its input, riddled with aliasing known as rolling shutter artifact; a resolution dispute between the CMOS and the RGB LED. Technology and its inherent resolutions are never neutral; every time a new way of seeing is created, a new prehistory is being written.

The technology responsible for the audiovisual piece is the NovaDrone (Pete Edwards/Casper Electronics, 2012), a small AV synthesizer designed by Casper Electronics. In essence, the NovaDrone is a noise machine with a flickering military RGB LED on top. The synthesizer is easy to play with; it offers three channels of sound and light (RGB) and the board has twelve potentiometers and ten switches to control the six oscillators routed through a 1/4-inch sound output, with which you can create densely textured drones, or in the case of Tacit:Blue, a rather monotonous, single AV color / frequency distortion.

The video images have been created using the more exciting functions of the NovaDrone. Placing the active camera of an iPhone against the LED on top of the NovaDrone, which turns the screen of the phone into a wild mess of disjointed colors, revealing the NovaDrone’s hidden second practical usage as a light synthesizer.

In this process the NovaDrone exploits the iPhone’s CMOS (Complimentary Metal-Oxide-Semiconductor) image sensor, a technology that is part of most commercial cameras, and is responsible for the transcoding of captured light into image data. When the camera function on the phone is activated, the CMOS moves down the sensor capturing pixel values one row at a time. However because the flicker frequency of the military RGB LED is changed by the user and higher than the writing speed of the phone’s CMOS, the iPhone camera is unable to synch up with the LED. What appears on the screen of the iPhone is an interpretation of its input, riddled with aliasing known as rolling shutter artifact; a resolution dispute between the CMOS and the RGB LED. Technology and its inherent resolutions are never neutral; every time a new way of seeing is created, a new prehistory is being written.

Myopia – consisted of a giant vinyl wall installation of 12 x 4 meters, plus extruding vectors – presenting a zoomed-in perspective of JPEG2000 wavelet compression artifacts. These artifacts were the aesthetic result of a »glitch« that occurred when I added a line of »another language« into the data of a high res JPEG2000 image – a compression standard used and developed for medical imaging, supporting zoom without block distortion.

The title Myopia hinted at a proposed solution for our collective suffering from technological hyperopia – the condition of farsightedness: being able to see things sharp only over a distance. With Myopia I build a place that disintegrated the architecture of zooming, and endowed the public with the »qualities« of being short-sighted. Myopiaoffered an abnormal view; a non-flat wall that presented the viewer a look into the compression – a new perspective. This was echoed in the conclusion of the installation, the day before the i.R.D. closed, when visitors were invited to bring an Exacto knife and to cut their own resolution of Myopia to mount them on any institution of choice (a book, computer or other rigid surface).

Resolutions are the determination of what is run, read, and seen, and what is not. In a way, resolutions form a lens of (p)reprogrammed »truths.« But their actions and the qualities have moved beyond a fold of our perspectives; and we have gradually become blind to the politics of these congealed and hardened compromises.

The title Myopia hinted at a proposed solution for our collective suffering from technological hyperopia – the condition of farsightedness: being able to see things sharp only over a distance. With Myopia I build a place that disintegrated the architecture of zooming, and endowed the public with the »qualities« of being short-sighted. Myopiaoffered an abnormal view; a non-flat wall that presented the viewer a look into the compression – a new perspective. This was echoed in the conclusion of the installation, the day before the i.R.D. closed, when visitors were invited to bring an Exacto knife and to cut their own resolution of Myopia to mount them on any institution of choice (a book, computer or other rigid surface).

Resolutions are the determination of what is run, read, and seen, and what is not. In a way, resolutions form a lens of (p)reprogrammed »truths.« But their actions and the qualities have moved beyond a fold of our perspectives; and we have gradually become blind to the politics of these congealed and hardened compromises.

DCT (after Discrete Cosine Transform, an algorithm that forms the core of the JPEG compression) uses the 64 macroblocks that form the visual »alphabet« for any JPEG compressed image.

The premise of DCT is that the legibility of an encrypted message does not only depend on the complexity of the encryption algorithm, but equally on the placement of the message. DCT, a font that can be used on any TTF (TrueType Font) supporting device, applies both methods of cryptography and steganography; hidden in secret, the message is transcoded and embedded on the surface of the image where it looks like an artifact.

A second part of this third work was inspired by one of the by Trevor Paglen’s uncovered Symbology patches. It consists of a logo for the i.R.D., embroidered in a black on black patch, providing a key to decipher anything written in DCT: 010 0000 – 101 1111. These binary values also decipher the third and final work titled institutions: consisting of five statements written in manifesto style, printed in DCT, on acrylic.

When MOTI, the Museum Of The Image (in collaboration with the Institute of Network Cultures, that had previously contracted me to write my book on Resolution Studies, but then floundered), wrote out their first Crypto Design Challenge later that year (August 2015) I entered the competition with DCT. Delivered as an encrypted message against institutions and their backwards bureaucracy DCT finally won the shared first prize in the Crypto Design Challenge.

When MOTI, the Museum Of The Image (in collaboration with the Institute of Network Cultures, that had previously contracted me to write my book on Resolution Studies, but then floundered), wrote out their first Crypto Design Challenge later that year (August 2015) I entered the competition with DCT. Delivered as an encrypted message against institutions and their backwards bureaucracy DCT finally won the shared first prize in the Crypto Design Challenge.

Another institutional success came in the winter of 2016. Six years after the creation of A Vernacular of File Formats (Rosa Menkman, 2010), I was invited to submit the work as part of a large-scale joint acquisition of Stedelijk Museum Amsterdam and MOTI.

A file format is an encoding system that organizes data according to a particular syntax. These organizations are commonly referred to as compression algorithms. A Vernacular of File Formats consists of one source image, a self-portrait showing my face, and an arrangement of recompressed and disturbed or de-calibrated iterations. By compressing the source image using different compression languages and subsequently implementing a same (or similar) error into each file, the normally invisible compression language presents itself on the surface of the image, resulting in a compilation of de-calibrated and unresolved self-portraits showcasing the aesthetic complexities of all the different file format languages.

After thorough conversation, both institutions agreed that the most interesting and best format for acquisition was the full digital archive, consisting of more than 16GB of data (661 files) This included the original and »glitched« – broken – and unstable image files, the Monglot software (Rosa Menkman and Johan Larsby, 2011), videos, and original PDFs. A copy of the whole compilation is now part of the archive of the Stedelijk Museum. The PDF of A Vernacular of File Formats remains freely downloadable and following the spirit of COPY < IT > RIGHT !, soon the research archive will be freely available online, inviting artists, students, and designers to use the files as source footage for their own work and research into compression artifacts.

A file format is an encoding system that organizes data according to a particular syntax. These organizations are commonly referred to as compression algorithms. A Vernacular of File Formats consists of one source image, a self-portrait showing my face, and an arrangement of recompressed and disturbed or de-calibrated iterations. By compressing the source image using different compression languages and subsequently implementing a same (or similar) error into each file, the normally invisible compression language presents itself on the surface of the image, resulting in a compilation of de-calibrated and unresolved self-portraits showcasing the aesthetic complexities of all the different file format languages.

After thorough conversation, both institutions agreed that the most interesting and best format for acquisition was the full digital archive, consisting of more than 16GB of data (661 files) This included the original and »glitched« – broken – and unstable image files, the Monglot software (Rosa Menkman and Johan Larsby, 2011), videos, and original PDFs. A copy of the whole compilation is now part of the archive of the Stedelijk Museum. The PDF of A Vernacular of File Formats remains freely downloadable and following the spirit of COPY < IT > RIGHT !, soon the research archive will be freely available online, inviting artists, students, and designers to use the files as source footage for their own work and research into compression artifacts.

By making my work available for re-use I intend to share the creative process and knowledge I have obtained throughout its productions. I adopt video artist and activist Phil Mortons statement: »First, it’s okay to copy! believe in the process of copying as much as you can (Phil Morton, Distribution Religion, 1973).« Generally, copying can be great practice; it can open up alternative possibilities, be a tactic to learn, and create access. Unfortunately copying can also be done in ways that are damaging or wrong, as has happened repeatedly with A Vernacular of File Formats. For instance when extracted images were used as application icons for the apps Glitch! for Android and Glitch Camerafor iPhone, or the many times my work was featured on commercially sold clothing, functioned as cover image on two different record sleeves, without permission, compensation or proper accreditation.

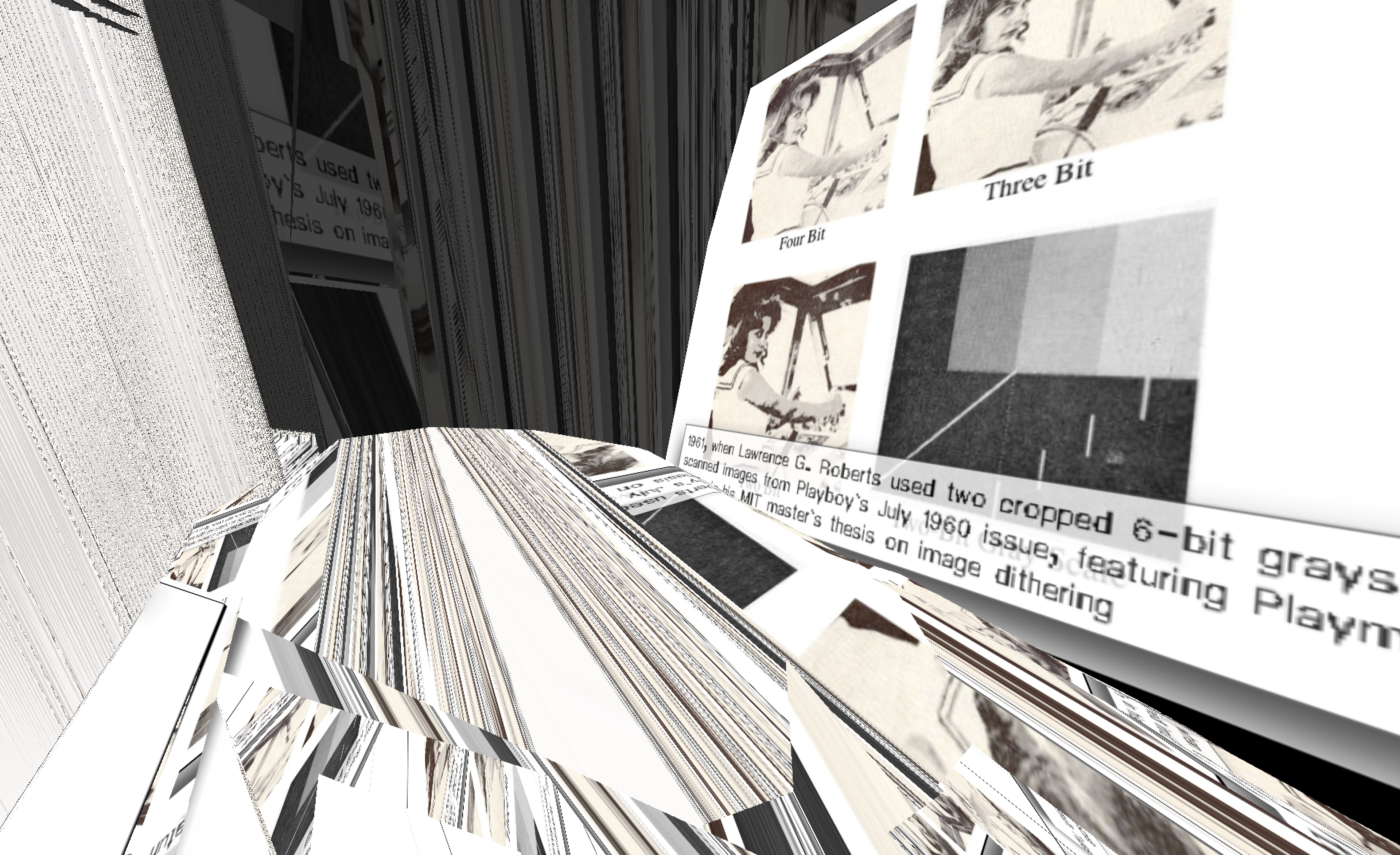

For Elevate Festival 2017 I was invited to talk about my experiences with losing authorship over images showing my face. Only during the preparations for this talk I realised that it is not that uncommon to lose (a sense of) authorship over an image (even if it is your face). An example of this is the long standing »tradition« within the professional field of image processing of co-opting (stealing) the image of a Caucasian female face for the production of color test cards. A practice that is responsible for the introduction of a racial bias into the standard settings for image processing. This revelation prompted me to start the research at the basis of the second part of the Diptych Beyond Resolution, titled Behind White Shadows (2017).

For Elevate Festival 2017 I was invited to talk about my experiences with losing authorship over images showing my face. Only during the preparations for this talk I realised that it is not that uncommon to lose (a sense of) authorship over an image (even if it is your face). An example of this is the long standing »tradition« within the professional field of image processing of co-opting (stealing) the image of a Caucasian female face for the production of color test cards. A practice that is responsible for the introduction of a racial bias into the standard settings for image processing. This revelation prompted me to start the research at the basis of the second part of the Diptych Beyond Resolution, titled Behind White Shadows (2017).

While a »one size fits all« or as a technician once wrote: »physics is physics« approach has become the standard, in reality, the various skin type complexions reflect light differently. This requires a composite interplay between the different settings involved when subject are captured. Despite the obvious need to factor in these different requirements for hues and complexions, certain technologies only implement the support for one – the Caucasian complexion – and compromise a resolution of Other complexions.

A much discussed example is the image of Lena Söderberg (in short »Lena«), featured as the Miss November 1972 Playboy centerfold and subsequently co-opted as test image during the implementation of DCT in the JPEG compression. The rights of use of the Lena image were never properly cleared or checked with Playboy. But the image, up until today, is the only image used to test and build the JPEG compression on. Scott Acton, editor of IEEE Transactions, writes in a critical piece: »We could be fine-tuning our algorithms, our approaches to this one image. […] They will do great on that one image, but will they do well on anything else? […] In 2016, demonstrating that something works on Lena isn’t really demonstrating that the technology works.«

To uncover and gain a better insight into the processes behind the biased protocols that make up standard settings, we are required to ask some fundamental questions; Who gets to decide the hegemonic conventions that resolve the image? Through what processes is this power legitimized and how does it elevated to a normative status? Moreover, who decides the principal point of view, and whose perspective is used in the operation of scanning or imaging technologies? All in all, who are casting these (Caucasian) »shadows«?

One way to make instances such as the habitual whiteness of color test cards more apparent is by insisting that these standard images, trapped in the histories of our technologies, become part of the public domain. These images need to lose their copyright along with their elusive power. The stories of standardization belong in high-school textbooks, and the possible violence associated with this process should be studied as part of any suitable curriculum. In order to illuminate the white shadows that govern the outcomes of our image processing technologies, it is required to first write these genealogies of standardization.

This type of research can easily become densely theoretical, something which is not bad in itself, but will result in the loss of audience. Which has let me to rethink and re-frame the output of my practice and to show it also as a series of »compression ethnographies;« videos, 3D environments, poems, and other experimental forms in which I anthropomorphize compressions and let them speak in their own languages. In doing so, I enable compressions to tell their own stories, about their conception and development, in the language of their own data organization.

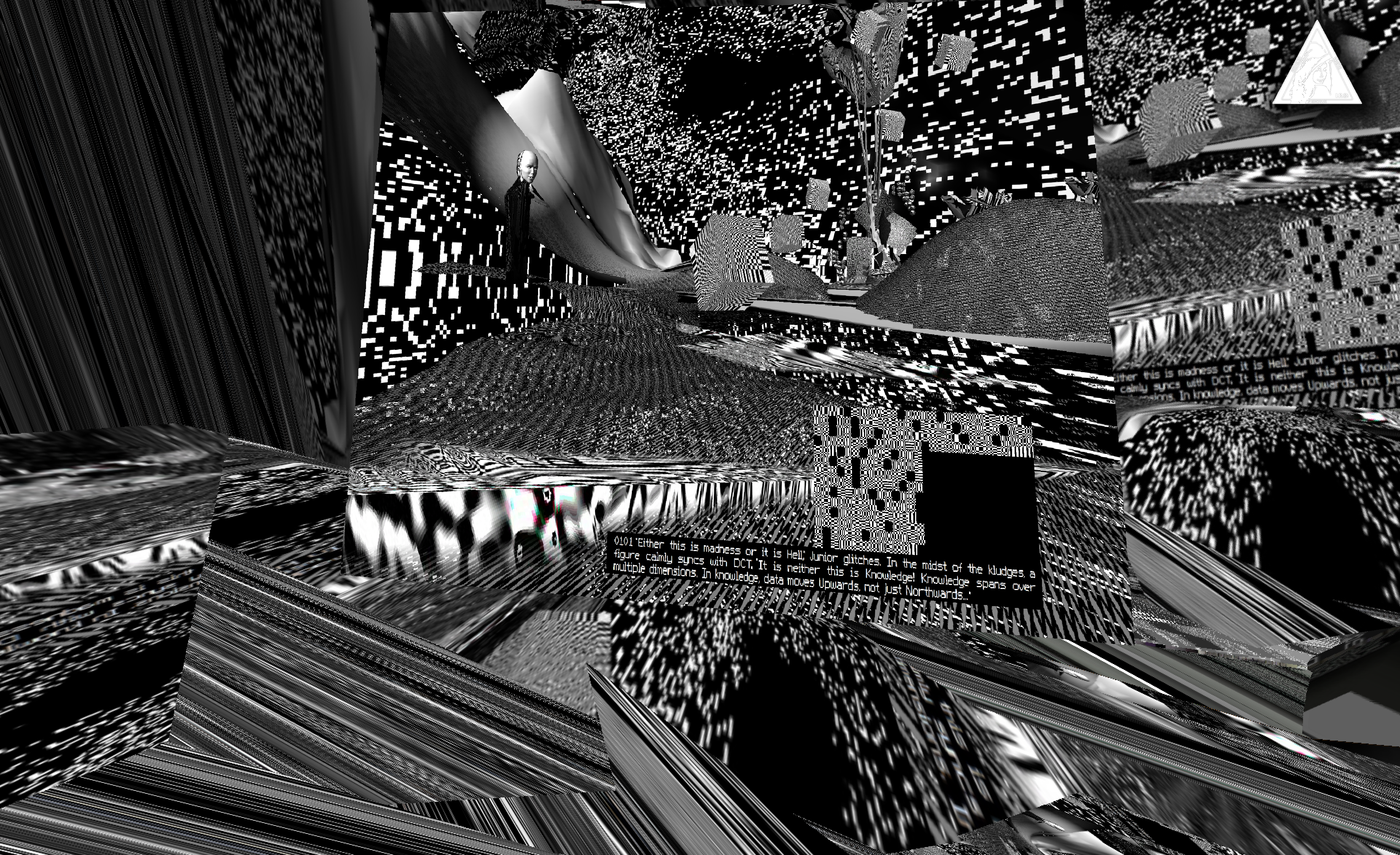

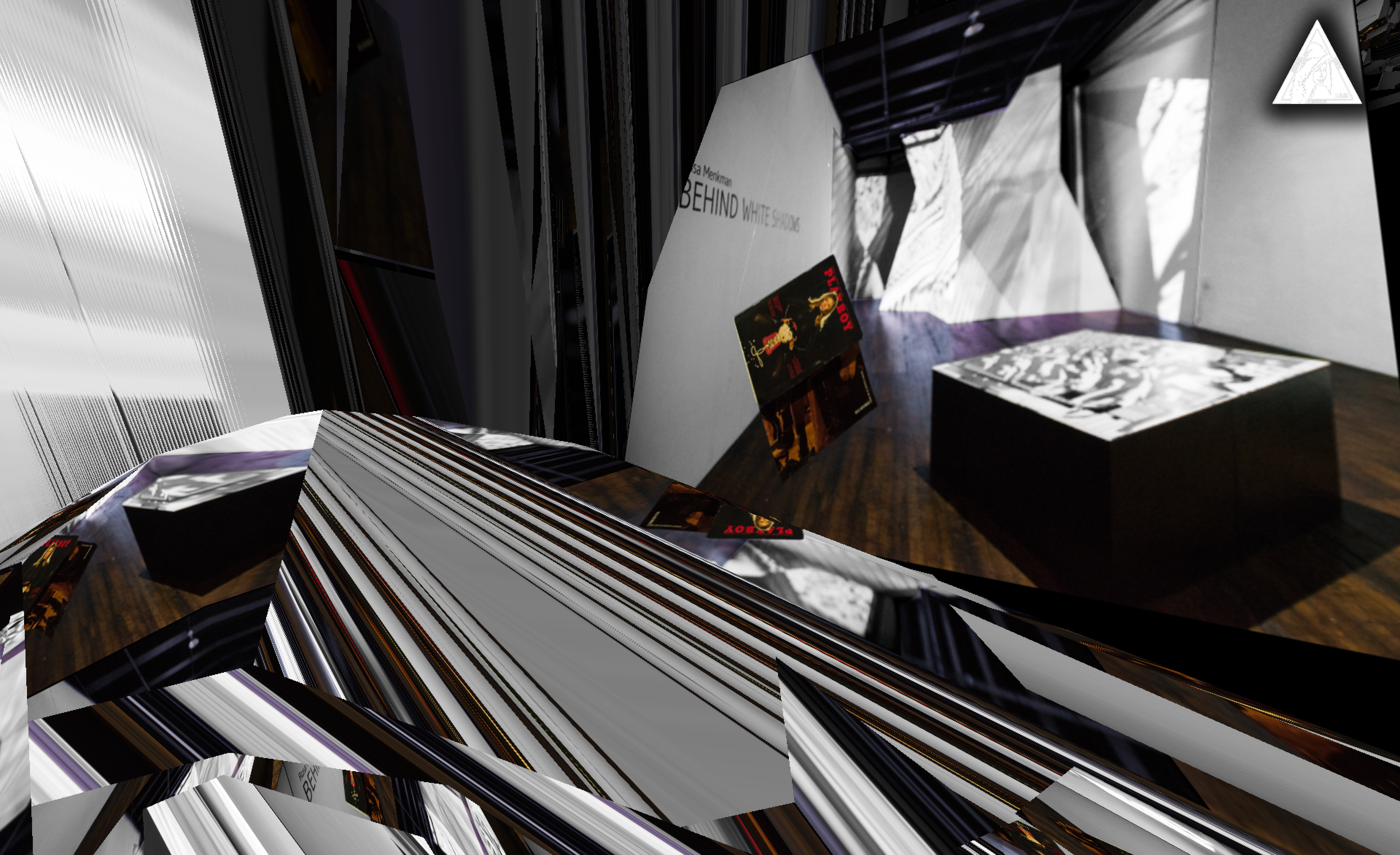

An example is the Behind White Shadows centerpiece: DCT:SYPHONING. The 1000000th (64th) interval created and performed in VR is a fictional journey, told as a modern version of the 1884 Edwin Abbott Abbott novel Flatland. But in this case, toldthrough the historical progression of image compression complexities. In DCT:SYPHONING two DCT blocks, Senior and Junior lead us through a universe of abstract, simulated environments made from the materials of compression – evolving from early raster graphics to our contemporary state of CGI realism. At each level, this virtual world interferes with the formal properties of VR to create stunning and disorienting environments, throwing into question our preconceived notions of virtual reality.

An example is the Behind White Shadows centerpiece: DCT:SYPHONING. The 1000000th (64th) interval created and performed in VR is a fictional journey, told as a modern version of the 1884 Edwin Abbott Abbott novel Flatland. But in this case, toldthrough the historical progression of image compression complexities. In DCT:SYPHONING two DCT blocks, Senior and Junior lead us through a universe of abstract, simulated environments made from the materials of compression – evolving from early raster graphics to our contemporary state of CGI realism. At each level, this virtual world interferes with the formal properties of VR to create stunning and disorienting environments, throwing into question our preconceived notions of virtual reality.

As a third and final work, the exhibition Behind White Shadows also showed a four by three-meter Spomenik (monument) for resolutions that will never exist; a non-quadrilateral, extruding and video-mapped sculpture, that presents videos shot from within DCT:SYPHONING. Technically, the Spomenik, functions as an oddly shaped screen with mapped video, consisting of 3D vectors extruding in space.

Historically, a Spomenik is a piece of abstract, Brutalist, and monumental anti-fascist architecture from former Yugoslavia, commemorating or meaning »many different things to many people.« The Spomenik in Behind White Shadows is dedicated to resolutions that will never exist and »screen objects« (shards) that were never implemented, such as the non-quadrilateral screen. It commemorates the biased (white) genealogies of image and video compression. The installed shard is three meters high, creating an obscured compartment in the back of the Spomenik: a small room hiding a VR installation that runs DCT:SYPHONING, while the projection on the Spomenik features video footage from within the VR. In doing so, the Spomenik reflects literal light on the issues surrounding image processing technologies and addresses some of the hegemonic conventions that obscure our view continuously.