Pique Nique Pour Les Inconnues (2020)

a desktop tele-chorale organised by the Angel of History;

a congregation of women whose faces

appropriated for the use on color-calibration test cards

now sing Paul McCartney’s “We All Stand Together,” their voices substituted by desktop sounds,

Together, they form the i.R.D. Perfect De/Calibration Army.

In my essay Behind White Shadows of Image Processing (see below), I describe how standardisation, especially through color-test cards, has shaped the history of image processing. a desktop tele-chorale organised by the Angel of History;

a congregation of women whose faces

appropriated for the use on color-calibration test cards

now sing Paul McCartney’s “We All Stand Together,” their voices substituted by desktop sounds,

Together, they form the i.R.D. Perfect De/Calibration Army.

Pique Nique pour les Inconnues extends that critique as a desktop tele-chorale in which the Angel of History convenes the often nameless figures: test-card models, bots, virtual assistants, stock-photo placeholders, and other “female objects” engineers long relied on to test and calibrate image quality or to perk-up or make more amicable architectural and virtual spaces.

Although their faces persist endlessly through copying and reuse, their names and identities have vanished. Gathering on a desktop, they attempt to recover their voices by performing “We All Stand Together” using only computer-desktop sounds.

BEHIND WHITE SHADOWS OF IMAGE PROCESSING (2017 - ... )

Shirley, Lena, Jennifer and the Angel of History.

[[About the loss of identity, bias, image calibration and ownership]]

INTRODUCTION

While digital photography seems to reduce the effort of taking an image of the face, such as a selfie or a portrait, to a straightforward act of clicking, these photos, stored and created inside (digital) imaging technologies do not just take and save an image of the face. In reality, a large set of biased - gendered and even racist - protocols intervene in the processes of saving the face to memory. As a result, what gets resolved, and what gets lost during the process of resolving the image is often unclear.

To uncover and get a better insight into the processes behind the biased protocols that make up these standard settings, we need to come back to asking certain basic questions: who gets decide the hegemonic conventions that resolve the image? And why and how do these standards come into being? Through an examination of the history of the color test card, I aim to provide some answers to these issues.

In her 2012 White Shadows: what is missing from images lecture at the Gdansk Academy of Fine Arts in Poland, Hito Steyerl speaks about how new technologies force us to reformulate important questions about the making visible, capturing, and documenting of information. In the first part of her talk, Steyerl focuses on the use of 3D scanning in forensic crime scene investigations. Steyerl explains how the 3D scanner, or LiDAR technology (Light Detection And Ranging), sends laser beams that reflect off of the surfaces of the objects that are being scanned. In this process, each point in space is measured and finally compiled as a 3D facsimile point cloud of a space. Steyerl states that this kind of capturing does not just provide a completely new image of reality or possibility for capturing the ‘truth’. In fact, she takes issue with the general belief that this type of new technology should be described as the ultimate documentary, forensic tool; a tool that produces 100% reliable, true evidence.

Just like any other technology, Steyerl argues, VR has its affordances, and with these affordances come blind spots: for instance, only a few scanning rigs are advanced enough to capture a moving object. Generally, a moving object becomes a blur or is not picked up at all. A “2.5D” scanning rig (a rig with just one 3D scanner that scans a space) can only provide the surface data of one side of the scanned object space. As a result, the final scan of an object or space includes blind spots: the back of the objects or shadows cast by objects in front of an object which, depending on the displaying technology, sometimes show up as an empty, white shell.

“What becomes visible on the back of the image is the space that is not captured. The space that is missing, missing data, the space where an object covers the view. A shadow. […] Documentary truth and evidence also [ed. rosa: includes] the missing of information. The missing people themselves.”

To really scan an environment properly, the scanning would have to be done from every angle. But in the case of most 3D scanning, certain elements of the scanned environment will always exist in the shadow of the next object, and result in white patches, blank spaces or hollowed out shells, remaining in the dataset only as a log of the scanners unseen, unregistered space. An important question then, not just for 3D, but for any technology is: who decides the point of view, and who stands behind the perspective from which the LiDAR—or any scanning or imaging technology—is operated? Who is casting these white shadows? In order to formulate a possible start to an answer for these questions, what follows is a history of resolutions, specifically the history of the color test card.

A collage of different resolution test cards on top of each other.

A collage of different resolution test cards on top of each other. Test images

A fundamental part of the history of image-processing and the standardization of settings within both analog and digital compression as well as codec technologies is the test card, chart, or image. This standard test image is an image (file) used across different institutions to evaluate, for instance, image processing, compression algorithms, and rendering, or to analyze the quality of a display. One type, the test pattern or resolution target, is typically used to test the rendering of a technology or to measure the resolution of an imaging system. Such a pattern often consists of reference line patterns with clear, well-defined thicknesses and spacings. By identifying the largest set of non-distinguishable lines, one determines the resolving power of a given system, and by using identical standard test images, different labs are able to compare results, both visually, qualitatively, and quantitatively.

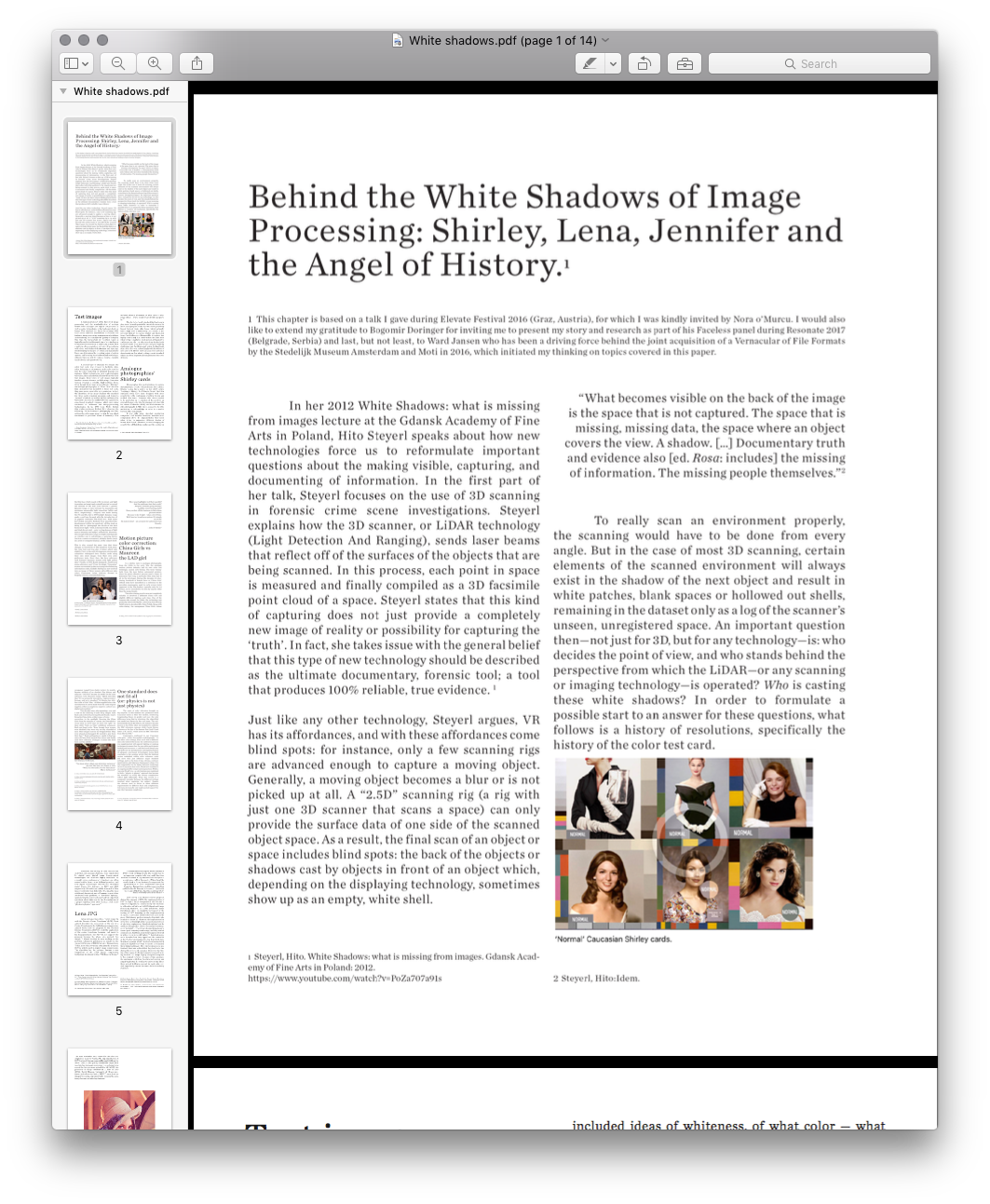

A second type of standard test image, the color test card, was created to facilitate skin-color balancing or adjustment, and can be used to test the color rendering on different displays, for instance. While technologies such as photography, television, film and software all have their own color test images, these types of test images all typically involve a norm reference card showing a Caucasian woman wearing a colorful, high-contrast dress. Even though there were many different “Shirleys” (in analog photography) or “China Girls” (in color film chemistry) that modeled for these test cards, they were never created to serve variation. In fact, the identities of the many Shirleys who modeled for these norms stayed unknown and formed a “normal” standard, as is often written on these color test cards. As such, the cards cultivated a gendered, race-biased standard reference, which even today continues to influence our image-processing technologies. In his 1997 book White, British film studies professor Richard Dyer observes the following: "In the history of photography and film, getting the right image meant getting the one which conformed to prevalent ideas of humanity. This included ideas of whiteness, of what color — what range of hue — white people wanted white people to be.”

The de-facto, ‘ideal’ standard that has been in play since the early part of the twentieth century for most analog photo labs has thus been positively biased towards white skin tones, which naturally have a high level of reflectivity. As a result it was not only difficult to capture darker and black skin tones, but it also proved impossible to capture two highly contrasting skin tones within the same shot; when trying to capture a black person sitting next to a white person, the reproduction of any African-American facial images would often lose details and pose lighting challenges, and finally present ashen-looking facial skin colors that contrast strikingly with the whites of eyes and teeth. Hence, the Caucasian test card is not about variation, but about setting a racist standard, which has been dogmatically implemented for over 40 years.

A fundamental part of the history of image-processing and the standardization of settings within both analog and digital compression as well as codec technologies is the test card, chart, or image. This standard test image is an image (file) used across different institutions to evaluate, for instance, image processing, compression algorithms, and rendering, or to analyze the quality of a display. One type, the test pattern or resolution target, is typically used to test the rendering of a technology or to measure the resolution of an imaging system. Such a pattern often consists of reference line patterns with clear, well-defined thicknesses and spacings. By identifying the largest set of non-distinguishable lines, one determines the resolving power of a given system, and by using identical standard test images, different labs are able to compare results, both visually, qualitatively, and quantitatively.

A second type of standard test image, the color test card, was created to facilitate skin-color balancing or adjustment, and can be used to test the color rendering on different displays, for instance. While technologies such as photography, television, film and software all have their own color test images, these types of test images all typically involve a norm reference card showing a Caucasian woman wearing a colorful, high-contrast dress. Even though there were many different “Shirleys” (in analog photography) or “China Girls” (in color film chemistry) that modeled for these test cards, they were never created to serve variation. In fact, the identities of the many Shirleys who modeled for these norms stayed unknown and formed a “normal” standard, as is often written on these color test cards. As such, the cards cultivated a gendered, race-biased standard reference, which even today continues to influence our image-processing technologies. In his 1997 book White, British film studies professor Richard Dyer observes the following: "In the history of photography and film, getting the right image meant getting the one which conformed to prevalent ideas of humanity. This included ideas of whiteness, of what color — what range of hue — white people wanted white people to be.”

The de-facto, ‘ideal’ standard that has been in play since the early part of the twentieth century for most analog photo labs has thus been positively biased towards white skin tones, which naturally have a high level of reflectivity. As a result it was not only difficult to capture darker and black skin tones, but it also proved impossible to capture two highly contrasting skin tones within the same shot; when trying to capture a black person sitting next to a white person, the reproduction of any African-American facial images would often lose details and pose lighting challenges, and finally present ashen-looking facial skin colors that contrast strikingly with the whites of eyes and teeth. Hence, the Caucasian test card is not about variation, but about setting a racist standard, which has been dogmatically implemented for over 40 years.

Analog photographies’ Shirley cards

Photographic film stock's failures to capture dark skin tones aren't a technical issue, but a choice. Scholar Lorna Roth writes in her 2009 article “Looking at Shirley, the Ultimate Norm” that film emulsion could have been designed with more sensitivity to the continuum of yellow, brown and reddish skin tones. However, this choice needed to be motivated by recognition of the need for an extended range; after the development of color film for cinema Kodacolor (1928) and Kodachrome for still photography (1935), there seemed to be little motivation to acknowledge or cater to a market beyond white consumers.

It was only when chocolate production companies and wooden furniture manufacturers complained about the impossibilities they faced when trying to reproduce different shades of brown, that Kodak’s chemists started changing the sensitivities of their film emulsions (the coating on the film base which reacts with chemicals and light to produce an image), and gradually started to extend the abilities of the film stock towards a greater dynamic range, or ratio between the maximum and minimum measurable light intensities (white and black, respectively). Progress was made during the 70s and 80s. But in 1997 Kodak’s dynamic range made a real leap forward with the introduction of its popular consumer film Gold Max. Roth notes how Kodak executive Richard Wien described this development within the sensitivity of film stock as being able to “photograph the details of the dark horse in low light.” Still, in the real world, true white and black do not exist — only varying degrees of light source intensity and subject reflectivity. Moreover, the concept of dynamic range is complex and depends on whether one is calculating a capturing device (such as a camera or scanner), a display device (such as a print or computer display), or the subject itself.

This is why around the same time that these changes in sensitivity of film emulsion took place, the color test card, albeit only slightly, was also revisited. First, in the mid-90s, Japanese photography companies redesigned their Shirley cards using their own stock images from their own color preference tests. Since then, the local reference card featured Japanese women with light yellow skin. Finally, in 1995, Kodak designed a multiracial norm reference card. From the single “Caucasian” woman surrounded by the necessary color balancing information codes, Kodak’s Shirley has now evolved into an image of three women with different skin colors (Caucasian, Asian, African American), dressed in brightly colored, contrasted clothing.

Photographic film stock's failures to capture dark skin tones aren't a technical issue, but a choice. Scholar Lorna Roth writes in her 2009 article “Looking at Shirley, the Ultimate Norm” that film emulsion could have been designed with more sensitivity to the continuum of yellow, brown and reddish skin tones. However, this choice needed to be motivated by recognition of the need for an extended range; after the development of color film for cinema Kodacolor (1928) and Kodachrome for still photography (1935), there seemed to be little motivation to acknowledge or cater to a market beyond white consumers.

It was only when chocolate production companies and wooden furniture manufacturers complained about the impossibilities they faced when trying to reproduce different shades of brown, that Kodak’s chemists started changing the sensitivities of their film emulsions (the coating on the film base which reacts with chemicals and light to produce an image), and gradually started to extend the abilities of the film stock towards a greater dynamic range, or ratio between the maximum and minimum measurable light intensities (white and black, respectively). Progress was made during the 70s and 80s. But in 1997 Kodak’s dynamic range made a real leap forward with the introduction of its popular consumer film Gold Max. Roth notes how Kodak executive Richard Wien described this development within the sensitivity of film stock as being able to “photograph the details of the dark horse in low light.” Still, in the real world, true white and black do not exist — only varying degrees of light source intensity and subject reflectivity. Moreover, the concept of dynamic range is complex and depends on whether one is calculating a capturing device (such as a camera or scanner), a display device (such as a print or computer display), or the subject itself.

This is why around the same time that these changes in sensitivity of film emulsion took place, the color test card, albeit only slightly, was also revisited. First, in the mid-90s, Japanese photography companies redesigned their Shirley cards using their own stock images from their own color preference tests. Since then, the local reference card featured Japanese women with light yellow skin. Finally, in 1995, Kodak designed a multiracial norm reference card. From the single “Caucasian” woman surrounded by the necessary color balancing information codes, Kodak’s Shirley has now evolved into an image of three women with different skin colors (Caucasian, Asian, African American), dressed in brightly colored, contrasted clothing.

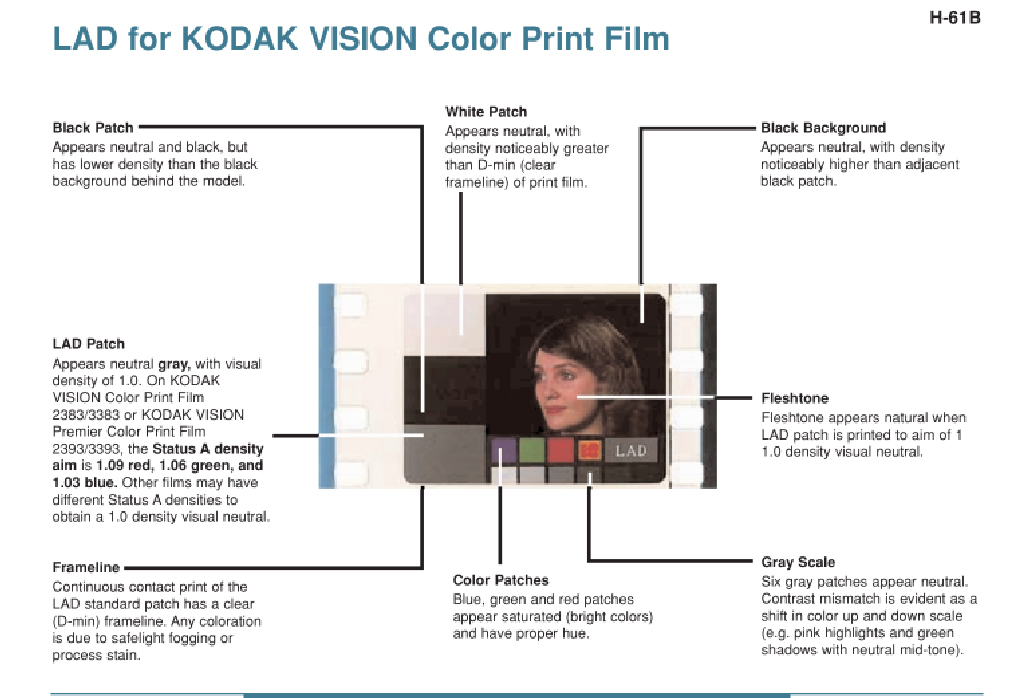

John P. Pytlak posing with his invention: LAD girl

Laboratory Aim Density (LAD) system for Kodak.

LAD color test strip

Have your highlights lost their sparkle?

And the midtones lost their scale?

Are your shadows going smokey?

And the colors turning stale?

Have you lost a little business to labs whose pictures shine?

Because to do it right – takes a lot of time.

Well, here’s a brand new system. It’s simple as can be!

Its name is LAD – an acronym for Laboratory Aim Density.

– John P. Pytlak

.

And the midtones lost their scale?

Are your shadows going smokey?

And the colors turning stale?

Have you lost a little business to labs whose pictures shine?

Because to do it right – takes a lot of time.

Well, here’s a brand new system. It’s simple as can be!

Its name is LAD – an acronym for Laboratory Aim Density.

– John P. Pytlak

.

Motion picture color correction: China Girls vs the Maureen the LAD girl

In a similar vain to analog photography, from the 1920s to the early ’90s, the analog motion picture industry had its own color test equivalent, named color-timing. The term ‘timing’ hails from the days before automated printers, when the photo chemical process used a timer to determine how long a particular film strip had to sit in the developer. During the decades of color-timing, hundreds of female faces or ‘China Girls’ (which some have described as a reference to the porcelain mannequins used in early screen tests) appeared in the film leaders, typically only for 1-4 frames, never intended to be seen by anyone other than the projectionist.

The color-timing practice was not completely reliable; it involved a different China Girl and slightly different lighting each time. One of the reasons why around the 1980s, the technology was gradually superseded by the Laboratory Aim Density (LAD) system, developed by John Pytlak. Along with color-timing, the anonymous China Girls, whose occupancy ranged from studio workers to models, became artifacts of an obsolete film history and only one “LAD Girl” became the model for the color reference card: Maureen Darby. Pytlak describes that “It was primarily intended as ‘representative’ footage, and not a standard.” By filming two 400-foot rolls of 5247 film, “all film supplied since the introduction of LAD is made from the same original negative, either as a duplicate negative, and now as a digital intermediate.”

Two decades later, after spending a year and a half on the restoring of lost color strip images, Julie Buck and archivist Karin Segal finally found a way to bring the China Girls, or women of color-correction, to the spotlight. Rescuing the China Girls from the margins of cinema, they intended to recast them as movie stars in their own right. In their 2005 “Girls on Film” exhibition statement, Buck and Segal write: “Even though these women were idealised, they were only seen by a handful of men. Their images exist on the fringes of film. They were abused and damaged. We wanted to give them their due.” Buck and Segal were unable to find any cases of China Girls-turned-film actress and finally used their collection of images to create the short Girls on Film (2008). In which they recast them as stars of the short.

In a similar vain to analog photography, from the 1920s to the early ’90s, the analog motion picture industry had its own color test equivalent, named color-timing. The term ‘timing’ hails from the days before automated printers, when the photo chemical process used a timer to determine how long a particular film strip had to sit in the developer. During the decades of color-timing, hundreds of female faces or ‘China Girls’ (which some have described as a reference to the porcelain mannequins used in early screen tests) appeared in the film leaders, typically only for 1-4 frames, never intended to be seen by anyone other than the projectionist.

The color-timing practice was not completely reliable; it involved a different China Girl and slightly different lighting each time. One of the reasons why around the 1980s, the technology was gradually superseded by the Laboratory Aim Density (LAD) system, developed by John Pytlak. Along with color-timing, the anonymous China Girls, whose occupancy ranged from studio workers to models, became artifacts of an obsolete film history and only one “LAD Girl” became the model for the color reference card: Maureen Darby. Pytlak describes that “It was primarily intended as ‘representative’ footage, and not a standard.” By filming two 400-foot rolls of 5247 film, “all film supplied since the introduction of LAD is made from the same original negative, either as a duplicate negative, and now as a digital intermediate.”

Two decades later, after spending a year and a half on the restoring of lost color strip images, Julie Buck and archivist Karin Segal finally found a way to bring the China Girls, or women of color-correction, to the spotlight. Rescuing the China Girls from the margins of cinema, they intended to recast them as movie stars in their own right. In their 2005 “Girls on Film” exhibition statement, Buck and Segal write: “Even though these women were idealised, they were only seen by a handful of men. Their images exist on the fringes of film. They were abused and damaged. We wanted to give them their due.” Buck and Segal were unable to find any cases of China Girls-turned-film actress and finally used their collection of images to create the short Girls on Film (2008). In which they recast them as stars of the short.

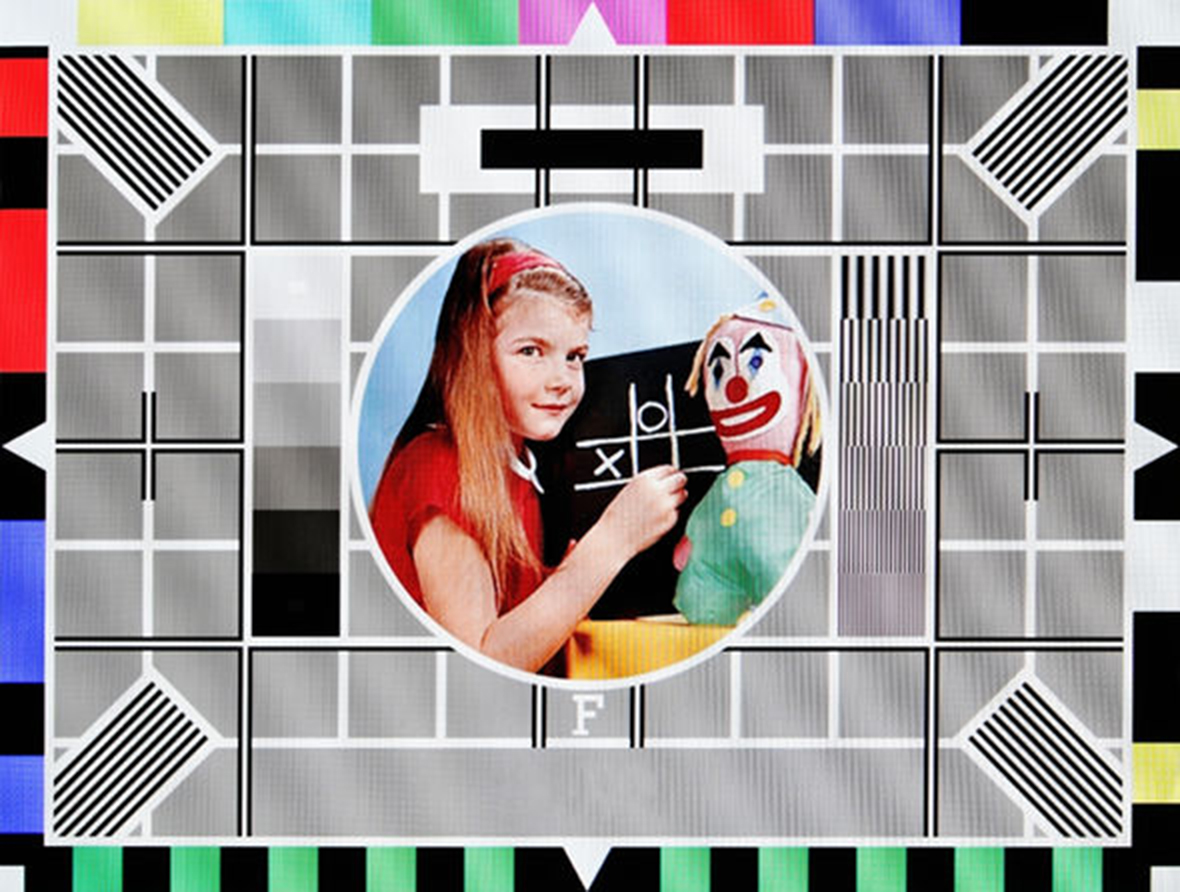

Carole Hersee on Test Card F, which aired on BBC Television from 1967 to 1998.

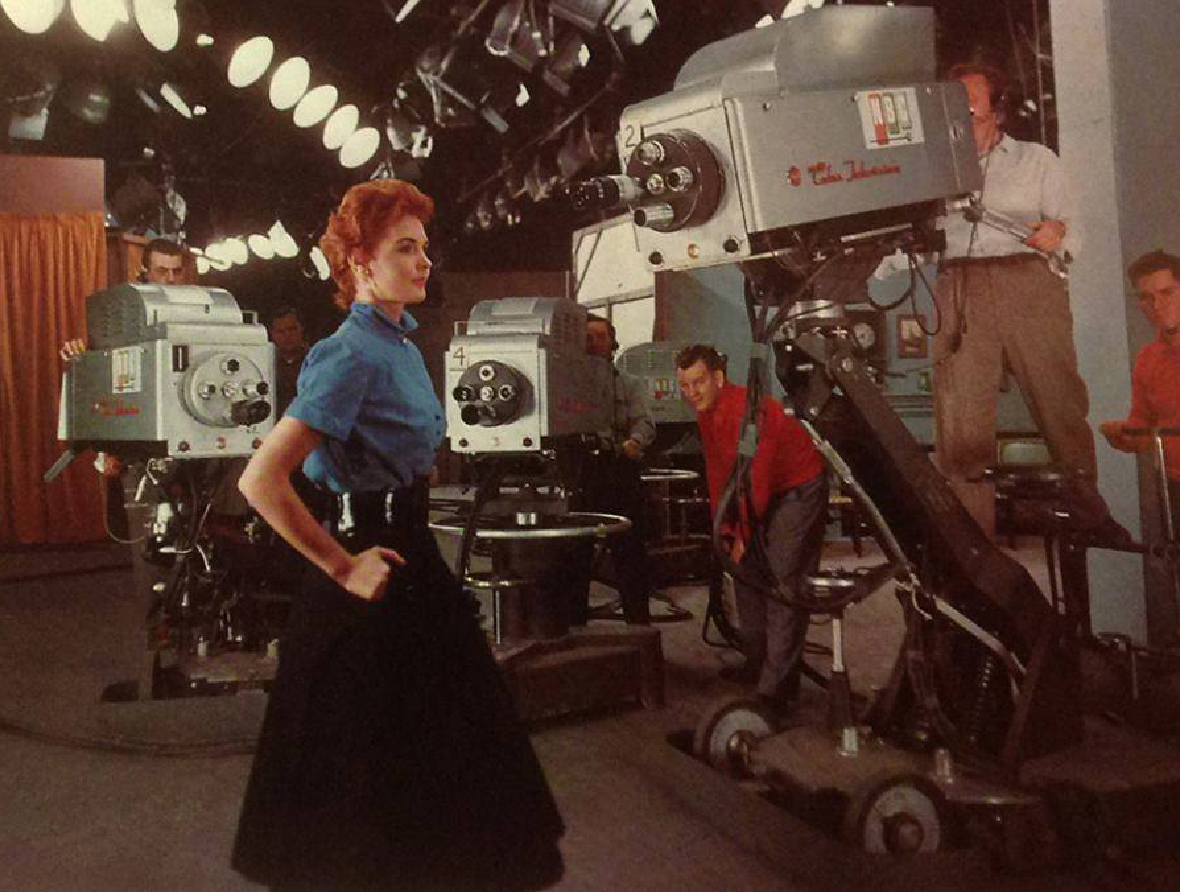

Marie McNamara at the NBC studios.

Marie McNamara at the NBC studios. “You know what a black-and-white test pattern is,” she told The New York Times in 1953.

“Well, I’m it for color. I’m the final check.”

- Marie McNamara

One standard does not fit all (or: physics is not just physics)

The onset of color television brought no big surprise; in this medium too, producers hired Caucasian ladies as their test models, reinforcing longstanding biases in gender and race—the only difference being that in television, the objectified test model was known by her real name. The red-haired model Marie McNamara, for instance, became known in the 1950s when she modeled to calibrate the NBC television cameras, while Carole Hersee is known as the face of the famous Test Card F (and latter J, W, and X), which aired on BBC Television from 1967 to 1998.

Cameramen continued to use Caucasian color girls — either live models or photographs — to test their color settings. If an actor with a different skin color entered the scene, the calibration process was supplemented with special lighting or makeup techniques, to ensure that the non-white participants looked good on screen—a task that is not always easy and deferred the development and implementation of adequate, non-biased technologies. Lorna Roth concludes in her seminal article that the habitual racism embedded within color reference cards did more than just influence major standard settings, such as the tone of hue, chroma, contrast, quantization, and lightness (luminance) values. To her, it is also responsible for the highly deficient renderings of non-Caucasian skin tones, which have resulted in an ongoing need for compensatory practices. While a ‘one size fits all’ or as a technician once explained to Roth: “physics is physics” approach has become the standard, in reality, the various complexions reflect light differently. What this reveals is a composite interplay between the different settings involved when capturing the subject. Despite the obvious need to factor in these different requirements for different hues and complexions, television technically only implemented support for one: the Caucasian complexion.

Moreover, the history of color bias did not end when old analog standards were superseded by digital ones; digital image (compression) technologies too, inherited legacy standards. As a result, even contemporary standards are often rooted within these racist, habitual practices and new digital technologies still feature embedded racial biases. For instance, in 2009 and 2010 respectively, HP webcams and the Microsoft’s XBox Kinect controller had difficulties tracking the faces of African-American users. Consumer reports later attributed both problems to “low-level lighting”, again moving the conversation away from important questions about skin tone to the determination of a proper lighting level, still echoing a dull, naive physics is physics approach.

The onset of color television brought no big surprise; in this medium too, producers hired Caucasian ladies as their test models, reinforcing longstanding biases in gender and race—the only difference being that in television, the objectified test model was known by her real name. The red-haired model Marie McNamara, for instance, became known in the 1950s when she modeled to calibrate the NBC television cameras, while Carole Hersee is known as the face of the famous Test Card F (and latter J, W, and X), which aired on BBC Television from 1967 to 1998.

Cameramen continued to use Caucasian color girls — either live models or photographs — to test their color settings. If an actor with a different skin color entered the scene, the calibration process was supplemented with special lighting or makeup techniques, to ensure that the non-white participants looked good on screen—a task that is not always easy and deferred the development and implementation of adequate, non-biased technologies. Lorna Roth concludes in her seminal article that the habitual racism embedded within color reference cards did more than just influence major standard settings, such as the tone of hue, chroma, contrast, quantization, and lightness (luminance) values. To her, it is also responsible for the highly deficient renderings of non-Caucasian skin tones, which have resulted in an ongoing need for compensatory practices. While a ‘one size fits all’ or as a technician once explained to Roth: “physics is physics” approach has become the standard, in reality, the various complexions reflect light differently. What this reveals is a composite interplay between the different settings involved when capturing the subject. Despite the obvious need to factor in these different requirements for different hues and complexions, television technically only implemented support for one: the Caucasian complexion.

Moreover, the history of color bias did not end when old analog standards were superseded by digital ones; digital image (compression) technologies too, inherited legacy standards. As a result, even contemporary standards are often rooted within these racist, habitual practices and new digital technologies still feature embedded racial biases. For instance, in 2009 and 2010 respectively, HP webcams and the Microsoft’s XBox Kinect controller had difficulties tracking the faces of African-American users. Consumer reports later attributed both problems to “low-level lighting”, again moving the conversation away from important questions about skin tone to the determination of a proper lighting level, still echoing a dull, naive physics is physics approach.

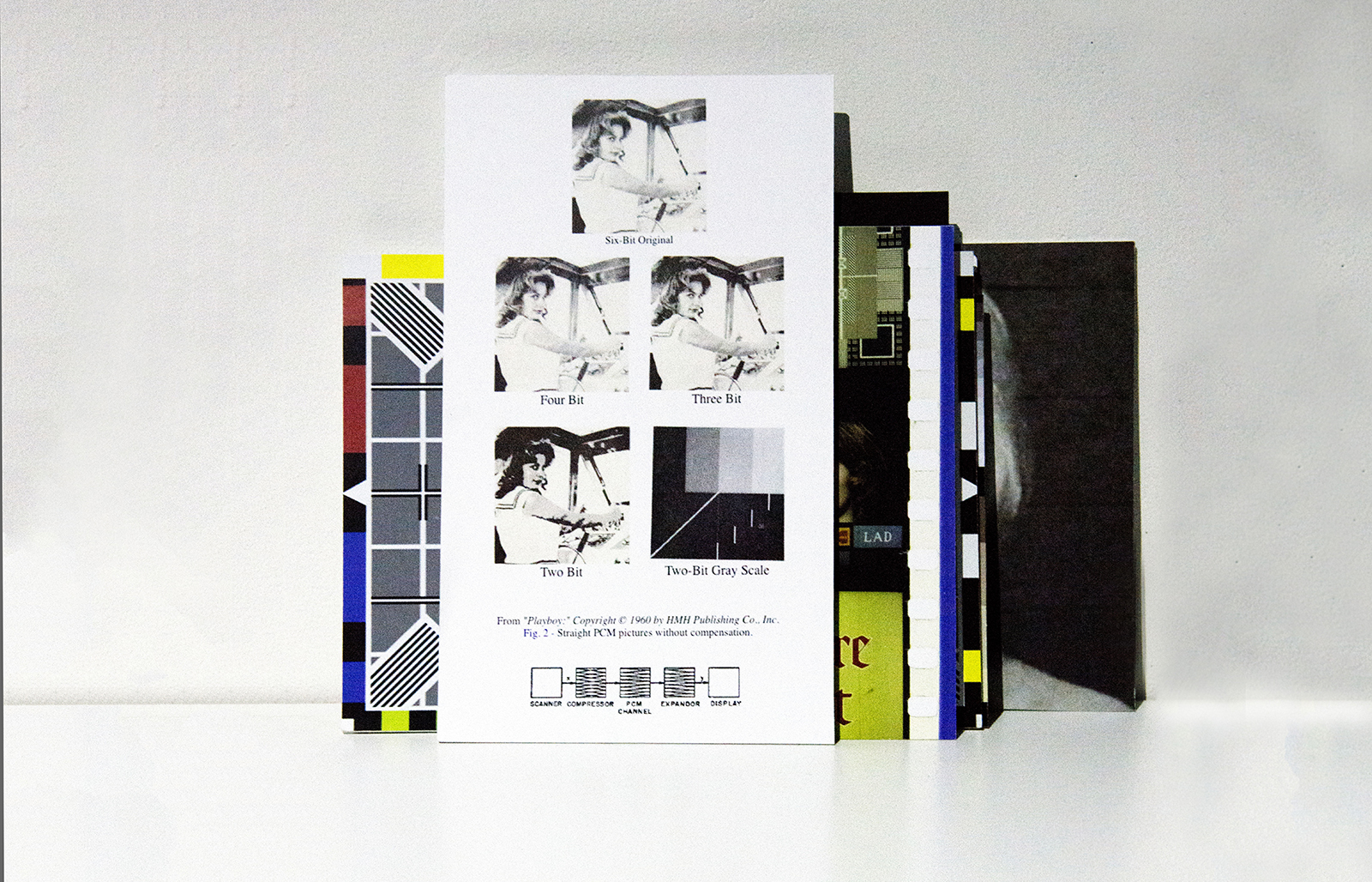

My collection of Caucasian testcards in the Behind White Shadows exhibition.

Lena JPEG

In his retrospective article “How I Came Up with the Discrete Cosine Transform” (DCT), Nasir Ahmed describes his conception of the use of a Cosine Transform in the field of image compression. Ahmed writes how he proposed the National Science Foundation (NSF) to study the application of the cosine transform, however, and much to his disappointment, the NSF did not support the proposal, because the whole idea seemed “too simple.” Ahmed decided to keep working on the problem, ultimately publishing his results in the January 1974 issue of IEEE Computer Transactions. Today, more than 40 years after Ahmeds proposal, DCT is widely used in digital image compression. The algorithm has for instance become a core component of the JPEG image compression technology, developed by the JPEG Experts Group.

“I remember dedicating the whole summer of 1973 to work on this problem. The results that we got appeared too good to be true, and I therefore decided to consult Harry Andrews later that year at a conference in New Orleans. […] When I sent the results back to Harry Andrews, he suggested that I publish them. As such, I sent them to the IEEE Computer Transactions, and the paper was then published in the January 1974 issue. […] Little did we realize at that time that the resulting “DCT” would be widely used in the future!”

.

Just shortly after Ahmed’s initial proposal, during the Summer of 1973, the implementation of DCT in digital image compression also became a subject of experiments conducted by the University of Southern California’s (USC) Signal and Image Processing Institute. In a 2001 newsletter, Jamie Hutchinson offers an insightful retrospect of the testing of DCT, focusing on the implementation of, again, a Caucasian, female color test card. In the piece, Hutchinson quotes Alexander Sawchuk, who reminisces his efforts on the implementation of the test card during his time as assistant professor of electrical engineering. Sawchuk explains how he and his colleagues felt tired of the normal test images or “dull stuff”, “They wanted something glossy to ensure good output dynamic range, and they wanted a human face. Just then, somebody happened to walk in with a recent issue of Playboy.” Sawchuk moves on to describe they ripped out the centerfold of the Playboy and used its top third part to scan with their Muirhead scanner, which they had customized with analog-to-digital converters to create a 3-channel, 512 x 512px test image. After the tricky process was finished, Sawchuk realized that they had lost a line during the process of scanning. Moreover, the timing of the analog-to-digital converters was off, making the final test image slightly elongated compared to the original. However, because of time pressure, the engineers settled for the distorted version and simply replicated the top line to arrive at 512. Those three sets of 512 lines—one set for each color, created imperfectly—would become a de facto industry standard.

The Miss November 1972 centerfold, that the USC employees used for testing the implementation of DCT, featured Caucasian model Lena Söderberg (born: Lena Sjööblom). Her image, ‘the Lenna’ (spelled with double n to promote the right pronounciation) quickly became the single most used picture in image-processing research and even one of the first pictures uploaded to ARPANET, the precursor of today’s internet. In A Note on Lena (1996), David Munson, University of Illinois professor and editor-in-chief at IEEE Transactions on Image Processing, explains why he believes the Lena image became an industry standard: “First, the image contains a nice mixture of detail, flat regions, shading, and texture that do a good job of testing various image processing algorithms. It is a good test image! Second, the Lnna image is a picture of an attractive woman. It is not surprising that the (mostly male) image-processing research community gravitated toward an image that they found attractive.” Munson moves on describing why the Lena image has become such an issue: “some members of our community are unhappy with the source of the Lena image. I am sympathetic to their argument, which states that we should not use material from any publication that is seen (by some) as being degrading to women.”

While the use of the Lena image remained a topic of discussion, and its rights were never properly cleared or even checked with Playboy, by 1991, SIPI (USCs Signal and Image Processing Institute) actually started distributing the image of Lena for a fee, to researchers all over the world. While Lena was regularly found on the pages of image-processing journals, books, and conference papers, Playboy finally became aware of these transgressions when the Journal of Optical Engineering featured Lena on its July cover. In August 1991, Optical Engineering received a letter from Playboy Enterprises, Inc. asking them, “as fellow publishers”, to cease any unintentional, unauthorized use of the image and to contact Playboy for permission for any future use of their copyrighted material. The International Society for Optical Engineering (SPIE) responded, arguing that “[t]he image is widely used in the worldwide optics and electronics community. It is digitized and its common use permits comparison of different image processing techniques and algorithms coming out of different research laboratories.” They also pointed out that SPIE is a nonprofit scientific society and that the material published by SPIE is intended for educational and research purposes.

SPIE reached an understanding with Playboy, but in a January 1992 editorial, SPIE editor Brian J. Thompson warns that “it is each author's responsibility to make sure that materials in their articles are either free of copyright or that permission from the copyright holder has been obtained.” On the other side, Eileen Kent, Vice President of new media at Playboy publicly commented on the issue - “We decided we should exploit this, because it is a phenomenon” - and granted SPIE authorization for all further use of the image. According to publications director at SPIE Eric Pepper, “it was almost as if Lena had entered the public domain by that time. Almost, but not quite.”

In his retrospective article “How I Came Up with the Discrete Cosine Transform” (DCT), Nasir Ahmed describes his conception of the use of a Cosine Transform in the field of image compression. Ahmed writes how he proposed the National Science Foundation (NSF) to study the application of the cosine transform, however, and much to his disappointment, the NSF did not support the proposal, because the whole idea seemed “too simple.” Ahmed decided to keep working on the problem, ultimately publishing his results in the January 1974 issue of IEEE Computer Transactions. Today, more than 40 years after Ahmeds proposal, DCT is widely used in digital image compression. The algorithm has for instance become a core component of the JPEG image compression technology, developed by the JPEG Experts Group.

“I remember dedicating the whole summer of 1973 to work on this problem. The results that we got appeared too good to be true, and I therefore decided to consult Harry Andrews later that year at a conference in New Orleans. […] When I sent the results back to Harry Andrews, he suggested that I publish them. As such, I sent them to the IEEE Computer Transactions, and the paper was then published in the January 1974 issue. […] Little did we realize at that time that the resulting “DCT” would be widely used in the future!”

.

Just shortly after Ahmed’s initial proposal, during the Summer of 1973, the implementation of DCT in digital image compression also became a subject of experiments conducted by the University of Southern California’s (USC) Signal and Image Processing Institute. In a 2001 newsletter, Jamie Hutchinson offers an insightful retrospect of the testing of DCT, focusing on the implementation of, again, a Caucasian, female color test card. In the piece, Hutchinson quotes Alexander Sawchuk, who reminisces his efforts on the implementation of the test card during his time as assistant professor of electrical engineering. Sawchuk explains how he and his colleagues felt tired of the normal test images or “dull stuff”, “They wanted something glossy to ensure good output dynamic range, and they wanted a human face. Just then, somebody happened to walk in with a recent issue of Playboy.” Sawchuk moves on to describe they ripped out the centerfold of the Playboy and used its top third part to scan with their Muirhead scanner, which they had customized with analog-to-digital converters to create a 3-channel, 512 x 512px test image. After the tricky process was finished, Sawchuk realized that they had lost a line during the process of scanning. Moreover, the timing of the analog-to-digital converters was off, making the final test image slightly elongated compared to the original. However, because of time pressure, the engineers settled for the distorted version and simply replicated the top line to arrive at 512. Those three sets of 512 lines—one set for each color, created imperfectly—would become a de facto industry standard.

The Miss November 1972 centerfold, that the USC employees used for testing the implementation of DCT, featured Caucasian model Lena Söderberg (born: Lena Sjööblom). Her image, ‘the Lenna’ (spelled with double n to promote the right pronounciation) quickly became the single most used picture in image-processing research and even one of the first pictures uploaded to ARPANET, the precursor of today’s internet. In A Note on Lena (1996), David Munson, University of Illinois professor and editor-in-chief at IEEE Transactions on Image Processing, explains why he believes the Lena image became an industry standard: “First, the image contains a nice mixture of detail, flat regions, shading, and texture that do a good job of testing various image processing algorithms. It is a good test image! Second, the Lnna image is a picture of an attractive woman. It is not surprising that the (mostly male) image-processing research community gravitated toward an image that they found attractive.” Munson moves on describing why the Lena image has become such an issue: “some members of our community are unhappy with the source of the Lena image. I am sympathetic to their argument, which states that we should not use material from any publication that is seen (by some) as being degrading to women.”

While the use of the Lena image remained a topic of discussion, and its rights were never properly cleared or even checked with Playboy, by 1991, SIPI (USCs Signal and Image Processing Institute) actually started distributing the image of Lena for a fee, to researchers all over the world. While Lena was regularly found on the pages of image-processing journals, books, and conference papers, Playboy finally became aware of these transgressions when the Journal of Optical Engineering featured Lena on its July cover. In August 1991, Optical Engineering received a letter from Playboy Enterprises, Inc. asking them, “as fellow publishers”, to cease any unintentional, unauthorized use of the image and to contact Playboy for permission for any future use of their copyrighted material. The International Society for Optical Engineering (SPIE) responded, arguing that “[t]he image is widely used in the worldwide optics and electronics community. It is digitized and its common use permits comparison of different image processing techniques and algorithms coming out of different research laboratories.” They also pointed out that SPIE is a nonprofit scientific society and that the material published by SPIE is intended for educational and research purposes.

SPIE reached an understanding with Playboy, but in a January 1992 editorial, SPIE editor Brian J. Thompson warns that “it is each author's responsibility to make sure that materials in their articles are either free of copyright or that permission from the copyright holder has been obtained.” On the other side, Eileen Kent, Vice President of new media at Playboy publicly commented on the issue - “We decided we should exploit this, because it is a phenomenon” - and granted SPIE authorization for all further use of the image. According to publications director at SPIE Eric Pepper, “it was almost as if Lena had entered the public domain by that time. Almost, but not quite.”

In May 1997, almost 25 years after being Miss November, Lena Söderberg attended the 50th anniversary of the Imaging Science and Technology (IS&T) Conference in Boston. Jeff Seideman, the president of the Boston IS&T, had arranged for Lena to appear and after the event, Seideman started working with Playboy's archivist to re-scan Lena's image and compile the missing information, including the type of photo emulsion used to make the print featured in the magazine, and the technical specifications of the scanner. As a result, Seideman hoped that the image of Lena would remain a standard reference image for compression technologies throughout the 21st century. Today, the standard Lena test image is still downloadable from several laboratory sites.

But the controversy around the Lena image did not end in the 90s. In 2001, David Munson, editor of the IEEE’s image processing journal, wrote: “It was clear that some people wanted me to ban Lena from the journal […] People didn’t object to the image itself, but to the fact that it came from Playboy, which they feel exploits women.” Rather than ban Lena, Munson wrote an editorial in which he encouraged authors to use other images. “We could be fine-tuning our algorithms, our approaches, to this one image,” he says. “They will do great on that one image, but will they do well on anything else?” In 2016, Scott Acton, editor of IEEE Transactions, proposed to the journal’s editorial board to instate an prohibition on the use of Lena in any published research: “In 2016, demonstrating that something works on Lena isn’t really demonstrating that the technology works.” Acton believed that the Lena image “doesn’t send the right message” to female researchers about their inclusion in the field. But Acton’s strongest objections were technical in nature: “Lena contains about 250,000 pixels, some 32 times smaller than a picture snapped with an iPhone 6. And then there’s a quality problem: The most commonly used version of the image is a scan of a printed page. The printing process doesn’t produce a continuous image, but rather a series of dots that trick your eye into seeing continuous tones and colors. Those dots, Acton says, mean that the scanned Lena image isn’t comparable to photos produced by modern digital cameras. Short of an all-out ban in the journal, he says, making authors aware of the image’s technical and ethical issues might be a way to usher Lena gracefully into retirement.”

While it is clear that the use of the Lena image opened a discussion about embedded bias and the consideration of gender in test card usage, there are still many questions that remain unanswered: how much are the performance, texture and materiality of digital photography actually influenced by the use of the image of a Caucasian Lena? What would it have meant for the standardization of digital image compression if the image chosen for the test card would have been the first African-American Playboy centerfold Jennifer Jackson (March 1965), or if the 512x512px image had instead featured the image of Grace Murray Hopper, one of the first pioneers in computer programming and person responsible for inventing some of the first compiler-related tools—moreover, the woman who, coincidentally, coined the widely used computer slang “bug”? Or Christine Darden, an African American researcher at NASA, pioneering supersonic aircrafts. How much do the compression standards we use on a day to day basis reflect the complexities of the ‘good’ 512x512px Lena image; and how well do these standard settings function when capturing another kind of color complexity?

But the controversy around the Lena image did not end in the 90s. In 2001, David Munson, editor of the IEEE’s image processing journal, wrote: “It was clear that some people wanted me to ban Lena from the journal […] People didn’t object to the image itself, but to the fact that it came from Playboy, which they feel exploits women.” Rather than ban Lena, Munson wrote an editorial in which he encouraged authors to use other images. “We could be fine-tuning our algorithms, our approaches, to this one image,” he says. “They will do great on that one image, but will they do well on anything else?” In 2016, Scott Acton, editor of IEEE Transactions, proposed to the journal’s editorial board to instate an prohibition on the use of Lena in any published research: “In 2016, demonstrating that something works on Lena isn’t really demonstrating that the technology works.” Acton believed that the Lena image “doesn’t send the right message” to female researchers about their inclusion in the field. But Acton’s strongest objections were technical in nature: “Lena contains about 250,000 pixels, some 32 times smaller than a picture snapped with an iPhone 6. And then there’s a quality problem: The most commonly used version of the image is a scan of a printed page. The printing process doesn’t produce a continuous image, but rather a series of dots that trick your eye into seeing continuous tones and colors. Those dots, Acton says, mean that the scanned Lena image isn’t comparable to photos produced by modern digital cameras. Short of an all-out ban in the journal, he says, making authors aware of the image’s technical and ethical issues might be a way to usher Lena gracefully into retirement.”

While it is clear that the use of the Lena image opened a discussion about embedded bias and the consideration of gender in test card usage, there are still many questions that remain unanswered: how much are the performance, texture and materiality of digital photography actually influenced by the use of the image of a Caucasian Lena? What would it have meant for the standardization of digital image compression if the image chosen for the test card would have been the first African-American Playboy centerfold Jennifer Jackson (March 1965), or if the 512x512px image had instead featured the image of Grace Murray Hopper, one of the first pioneers in computer programming and person responsible for inventing some of the first compiler-related tools—moreover, the woman who, coincidentally, coined the widely used computer slang “bug”? Or Christine Darden, an African American researcher at NASA, pioneering supersonic aircrafts. How much do the compression standards we use on a day to day basis reflect the complexities of the ‘good’ 512x512px Lena image; and how well do these standard settings function when capturing another kind of color complexity?

Christine Darden in the control room of NASA Langley's Unitary Plan Wind Tunnel in 1975. Credit: NASA

Christine Darden in the control room of NASA Langley's Unitary Plan Wind Tunnel in 1975. Credit: NASA

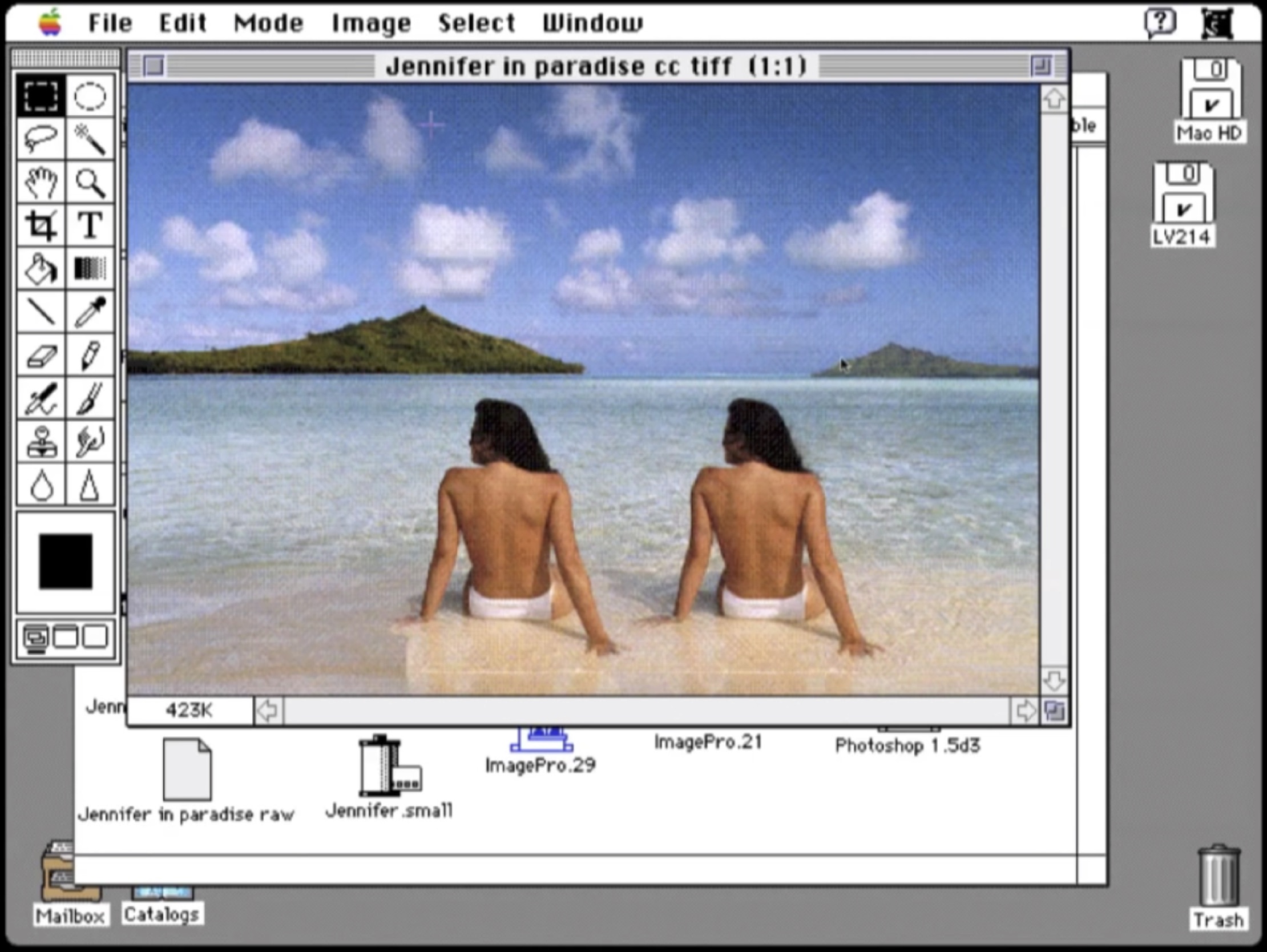

“Dear Jennifer,

Sometime in 1987, you were sitting on a beach in Bora Bora, looking at To’opua island, enjoying a holiday with a very serious boyfriend. […] This photograph of a beautiful moment in your personal history has also become a part of my history, and that of many other people; it has even shaped our outlooks on the world at large. John’s image of you became the first image to be publicly altered by the most influential image manipulation program ever.” […] In essence, it was the very first photoshop meme—but now the image is nowhere to be found online.

Did John ask you if he could use the image? Did you enjoy seeing yourself on the screen as much as he did? Did you think you would be the muse that would inspire so much contemporary image making? Did you ever print out the image? Would you be willing to share it with me, and so, the other people for whom it took on such an unexpected significance? Shouldn’t the Smithsonian have the negative of that image, not to mention digital backups of its endless variations?

All these questions have made me decide to redistribute the image ‘jennifer in paradise’ as well as I can, somewhat as an artist, somewhat as a digital archeologist, restoring what few traces of it I could find. It was sad to realize this blurry screen grab was the closest I could get to the image, but beautiful at the same time. How often do you find an important image that is not online in several different sizes already?”

︎ Constant Dullaart: Jennifer in Paradise – the correspondence. 2013.

.

Jennifer in Paradise

A woman is sitting with her back towards us, topless, on a beach. Silver sand, blue water, a green island in the distance. We can’t see her face but we know her name: Jennifer. This photo, taken in 1987 by one of the two original creators of Photoshop, John Knoll, became the standard test image for the development and implementation of Photoshop and its suite of creative effects. Twirling, deleting and copying Jennifer were just some of the processes that were tested on the image. At that time, the early days of digital computing, there was not a large array of digital images available, which is why this 24-bit scan of a holiday photo of John’s soon-to-be Jennifer ‘Knoll’ became a standard test image for all of the development of Photoshop. It is also one of the reasons why the image did not disappear when Photoshop moved out of its development phase; when Photoshop was finally ready for public demonstrations, John and his brother Thomas used the image again and again in public and online demos. "It was a good image to do demos with," John Knoll recalls. "It was pleasing to look at and there were a whole bunch of things you could do with that image technically.”

As Dutch artist Constant Dullaart explains in his Chaos Computer Club presentation The Possibility of an Army, John Knoll confirmed an age-old motif: a man objectifying a female body. But besides being critical, Dullaart also underlined the special cultural-historical value of the artifact, which formed a key inspiration for his 2013 Future Gallery solo show Jennifer in Paradise. In this show, Dullaart focused on the excavation and exhibition of a reconstruction of the Jennifer image. In an open letter accompanying the show, Dullaart describes the image of Jennifer as an important artifact in the history of software development and as an anecdote in Adobe history. He also asks Jennifer to share the original image file with the world. A sentiment that was later echoed by Gordon Comstock in a 2014 piece for the Guardian, in which he describes the image as “central to the modern visual vernacular as Eadweard Muybridge’s shots of galloping horses or the first use of perspective.” In a way, just like the Lena image, Jennifer has become ‘a phenomenon’.

While Dullaart never obtained any rights or permissions for the use of the Jennifer image, he did digitally reconstruct the original image and created an image series consisting of Photoshopped versions, materialized as wallpapers and a series of prints featuring enthusiastically filtered Jennifers (twirled, blurred, etc.). Dullaart also spread the digitally reconstructed version of the original image with an added a payload: he steganographically added messages to the reconstructed JPEG image file. By doing so, he intended to treat the JPEG image not just as an image, but as a unique container format for content, to open a debate on the value of the digital file (format). The reconstructed Jennifer JPEG is not just a format that carries the reconstructed image information; via steganography it has become an unique container and placeholder to discus the materiality of digital photography. In terms of monetization of the material, Dullaart only sells the password to the encrypted payload added to the reconstructed version of the original JPEG—the access to his secret message. Finally, in an effort to translate the work to the context of the gallery, Dullaart organized a performance, in which he briefly showed his secret message written in phosphorescent paint on top of the wallpaper by shining a blacklight on its surface, followed by a destruction of the blacklight as a metaphor for encryption (and inaccessibility).

Dullaart never received a direct response from Jennifer or John Knoll to his request to enter the original image into the public domain or to gift it to an (media) archeological institution such as the Smithsonian. Remarkably, for his Guardian article, Comstack did manage to get a short response from both.

John Knoll seems unconvinced: "I don't even understand what he's doing," he says, bristling at the idea of the image being reconstructed without permission (ironically using Photoshop). Jennifer is more sanguine: "The beauty of the internet is that people can take things, and do what they want with them, to project what they want or feel," she says.”

And maybe even more remarkable is the fact that the Guardian article features just one image: the original Jennifer in Paradise photo taken by John Knoll, embedded on the newspapers website (and thus finally entering the digital domain). Albeit indirect, Dullaart had now fulfilled one of the main goals of his solo show.

----

References / a great deal of inspiration for this text came from the amazing research undertaken by:

Lorna Roth: Looking at Shirley, the Ultimate Norm: Colour Balance, Image Technologies, and Cognitive Equity (2009)

video: color film was build for white people. Here's what it did to dark skin (2009).

project: the colour balance project.

Hito Steyerl: White Shadows (2012).

Constant Dullaart: Jennifer in Paradise – the correspondence (2013).

James Bridle: The Render Ghosts (2013).

in which he first connected the stories of Lena and Jennifer Knoll.

David C. Munson: A note on Lena, in: IEEE Transactions on Image Processing 5.1 (1996): p. 3–3.

Scott Acton in: Corinne Iozzio: The Playboy Centerfold That Helped Create the JPEG, in: The Atlantic (02/09/2016).

A woman is sitting with her back towards us, topless, on a beach. Silver sand, blue water, a green island in the distance. We can’t see her face but we know her name: Jennifer. This photo, taken in 1987 by one of the two original creators of Photoshop, John Knoll, became the standard test image for the development and implementation of Photoshop and its suite of creative effects. Twirling, deleting and copying Jennifer were just some of the processes that were tested on the image. At that time, the early days of digital computing, there was not a large array of digital images available, which is why this 24-bit scan of a holiday photo of John’s soon-to-be Jennifer ‘Knoll’ became a standard test image for all of the development of Photoshop. It is also one of the reasons why the image did not disappear when Photoshop moved out of its development phase; when Photoshop was finally ready for public demonstrations, John and his brother Thomas used the image again and again in public and online demos. "It was a good image to do demos with," John Knoll recalls. "It was pleasing to look at and there were a whole bunch of things you could do with that image technically.”

As Dutch artist Constant Dullaart explains in his Chaos Computer Club presentation The Possibility of an Army, John Knoll confirmed an age-old motif: a man objectifying a female body. But besides being critical, Dullaart also underlined the special cultural-historical value of the artifact, which formed a key inspiration for his 2013 Future Gallery solo show Jennifer in Paradise. In this show, Dullaart focused on the excavation and exhibition of a reconstruction of the Jennifer image. In an open letter accompanying the show, Dullaart describes the image of Jennifer as an important artifact in the history of software development and as an anecdote in Adobe history. He also asks Jennifer to share the original image file with the world. A sentiment that was later echoed by Gordon Comstock in a 2014 piece for the Guardian, in which he describes the image as “central to the modern visual vernacular as Eadweard Muybridge’s shots of galloping horses or the first use of perspective.” In a way, just like the Lena image, Jennifer has become ‘a phenomenon’.

While Dullaart never obtained any rights or permissions for the use of the Jennifer image, he did digitally reconstruct the original image and created an image series consisting of Photoshopped versions, materialized as wallpapers and a series of prints featuring enthusiastically filtered Jennifers (twirled, blurred, etc.). Dullaart also spread the digitally reconstructed version of the original image with an added a payload: he steganographically added messages to the reconstructed JPEG image file. By doing so, he intended to treat the JPEG image not just as an image, but as a unique container format for content, to open a debate on the value of the digital file (format). The reconstructed Jennifer JPEG is not just a format that carries the reconstructed image information; via steganography it has become an unique container and placeholder to discus the materiality of digital photography. In terms of monetization of the material, Dullaart only sells the password to the encrypted payload added to the reconstructed version of the original JPEG—the access to his secret message. Finally, in an effort to translate the work to the context of the gallery, Dullaart organized a performance, in which he briefly showed his secret message written in phosphorescent paint on top of the wallpaper by shining a blacklight on its surface, followed by a destruction of the blacklight as a metaphor for encryption (and inaccessibility).

Dullaart never received a direct response from Jennifer or John Knoll to his request to enter the original image into the public domain or to gift it to an (media) archeological institution such as the Smithsonian. Remarkably, for his Guardian article, Comstack did manage to get a short response from both.

John Knoll seems unconvinced: "I don't even understand what he's doing," he says, bristling at the idea of the image being reconstructed without permission (ironically using Photoshop). Jennifer is more sanguine: "The beauty of the internet is that people can take things, and do what they want with them, to project what they want or feel," she says.”

And maybe even more remarkable is the fact that the Guardian article features just one image: the original Jennifer in Paradise photo taken by John Knoll, embedded on the newspapers website (and thus finally entering the digital domain). Albeit indirect, Dullaart had now fulfilled one of the main goals of his solo show.

The stories of standardisation belong to high-school textbooks, while the violence of standardisation should be studied in every university curriculum. By illuminating these stories, we will reveal (and may possibly undo) the white shadows.

Similar to Constant Dullaart’s call for Photoshop’s “Jennifer” to enter the Smithsonian archive, one way to expose the habitual whiteness of color-test cards is to insist that these standard images, so often obfuscated by technological histories, enter the public domain; they need to lose their elusiveness and become common knowledge.

----

References / a great deal of inspiration for this text came from the amazing research undertaken by:

Lorna Roth: Looking at Shirley, the Ultimate Norm: Colour Balance, Image Technologies, and Cognitive Equity (2009)

video: color film was build for white people. Here's what it did to dark skin (2009).

project: the colour balance project.

Hito Steyerl: White Shadows (2012).

Constant Dullaart: Jennifer in Paradise – the correspondence (2013).

James Bridle: The Render Ghosts (2013).

in which he first connected the stories of Lena and Jennifer Knoll.

David C. Munson: A note on Lena, in: IEEE Transactions on Image Processing 5.1 (1996): p. 3–3.

Scott Acton in: Corinne Iozzio: The Playboy Centerfold That Helped Create the JPEG, in: The Atlantic (02/09/2016).